Team,

Could somebody that is experienced with DCAN and FIFO RX please comment on the below?

Some work are currently being done on the DCAN driver for AM335x to fixe bugs and improve performances. Seems that some patches have been pushed already in the current kernel tree 3.14 which is comparable to what we have in TI SDK 7.0 (based on 3.12) but with additional patches:

https://git.kernel.org/cgit/linux/kernel/git/torvalds/linux.git/tree/drivers/net/can/c_can/c_can.c?id=refs/tags/v3.14

Right now they are some issues when using the RX FIFO mecanism. The complete description is below in blue.

Looking at the description the usage of the RX FIFO seem to be conform to what we have in the DCAN section of the TRM - SPRUH73I (and in the Bosch IP specs).

Still I would like to check the following:

The section "23.3.15.12 Reading From a FIFO Buffer" says:

Reading from a FIFO buffer message object and resetting its NewDat bit is handled the same way as

reading from a single message object.

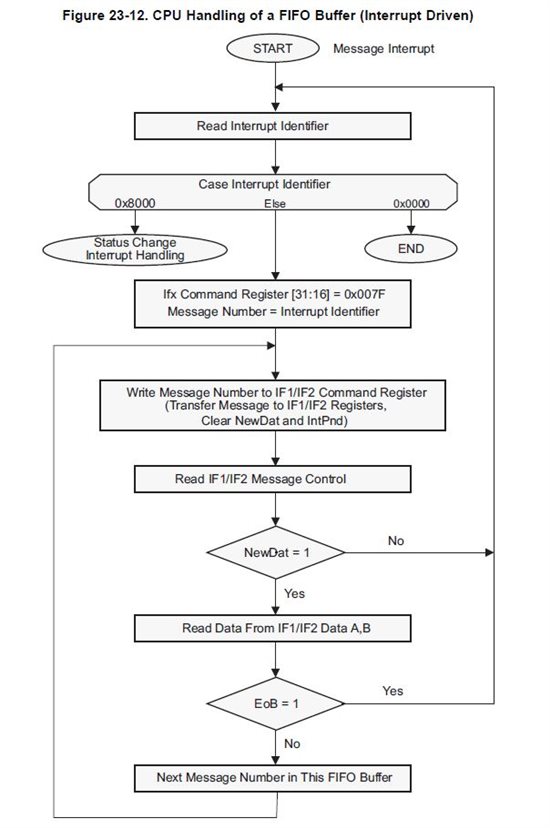

and the flowchart Fig 23-12 show that the operation is sequential: read 1 message, clear NewDat, ..etc and then start again on the next message.

Q: Is it a requirement to do it sequentialy? Or can it be paralelized like read n messages, clear n Newdat bits, ..etc?

In the current Linux driver above it seems to have paralelized the access to the FIFO buffer. This look to work most of the time but still dropping some messages from time to time (see problem description).

------------------ description of the issue and test case from the person writing the driver:

Configure the CAN interface and use 2 other CAN nodes to send packets in bursts. The burst is triggered by a sync packet sent from one of the nodes.

Now in our case, we get the following CAN ids on the bus:

0x080 <- Sync

0x18e <- Answer frames expected in that order

0x18d

0x28e

0x28d

0x38e

0x38d

0x48e

0x48d

0x1ce

0x1cd

0x2ce

0x2cd

0x3ce

0x3cd

0x4ce

0x4cd

0x080 <- Sync

....

The sync happens every 10ms. Every 41ms the nodes send a heartbeat packet (ids 70e and 70d).

Now we use CAN dump with timestamps to read the packets from the can socket and send them over network to a PC which analyzes the packets for ordering and timing correctness.

That shows us a packet drop about once per second and we can prove that the packet is silently lost in the D_CAN IP Core.

We have instrumented the bug fixed driver and found the following issue.

The driver uses 16 buffers as RX FIFO to avoid potential reordering issues. The logic of the receive routine is to read the first 8 packets from message buffer 1-8 but it leaves the NewDat bits on,

so the hardware message handler will queue the packets at the end.

After reading message buffer 8, it clears the newdat bits of message buffer 1-8, so the hardware message handler starts queuing from the beginning of the FIFO again.

That works most of the time, but we can connect the packet loss to that way of handling the message buffer.

RX Packet 1 --> message buffer 1 (newdat bit is not cleared) RX Packet 2 --> message buffer 2 (newdat bit is not cleared) RX Packet 3 --> message buffer 3 (newdat bit is not cleared)

RX Packet 4 --> message buffer 4 (newdat bit is not cleared) RX Packet 5 --> message buffer 5 (newdat bit is not cleared) RX Packet 6 --> message buffer 6 (newdat bit is not cleared)

RX Packet 7 --> message buffer 7 (newdat bit is not cleared) RX Packet 8 --> message buffer 8 (newdat bit is not cleared)

Clear newdat bit in message buffer 1

Clear newdat bit in message buffer 2

Clear newdat bit in message buffer 3

Clear newdat bit in message buffer 4

Clear newdat bit in message buffer 5

Clear newdat bit in message buffer 6

Clear newdat bit in message buffer 7

Clear newdat bit in message buffer 8

Now if during that clearing of newdat bits, a new message comes in, the HW gets confused and drops it.

It doesn’t matter how many of them you clear. I put a delay between clear of buffer 1 and buffer 2 which was long enough that the message should have been queued either in buffer 1 or buffer 9.

But it did not show up anywhere. The next packet after the dropped one ended up in buffer 1. So the hardware lost a packet of course without telling it via one of the error mechanisms.

That does not happen on all clear newdat bit events, but the instrumentation clearly proves that the packet loss is always during the clearing of the newdat bits of the lower message buffers.

If we clear the newdat bit for the first 8 message buffers right away the packet loss cannot be observed anymore. But this opens the unlikely but under certain circumstances observable behaviour:

3 new messages are in the buffers 1-3

Read Packet 1 from buffer 1

Hardware queues a new packet in buffer 1

Hardware queues a new packet in buffer 4

Read Packet 2 from buffer 2

Read Packet 3 from buffer 3

Now we have two new messages in the buffers 1 and 4, but no way to tell which one got queued first because it might have been ordered this way:

Read Packet 1 from buffer 1 Hardware queues a new packet in buffer 4

Read Packet 2 from buffer 2

Hardware queues a new packet in buffer 1 Read Packet 3 from buffer 3

There might be a way to figure that out by reading out the newdat pending bits over and over, but we want to avoid that and it is asynchronous to the message handler.

So we cannot really know what happens in the background.

The documentation does not forbid the way Linux is using the hardware and there is no reason why this should cause packet loss.

It just says that the hardware always queues in the lowest available buffer.

So letting the newdat bits on to enforce an enqueue above is a reasonable design choice.

Thanks in advance and best regards,

Anthony