This is a continuation of my previous post, however I'm approaching it in a more detailed way now so I thought it best to start a new post.

I am trying to stream audio to the AM335x EVM. I was having problems with network performance, especially over Wi-Fi. The TI employee who is helping me in my post in the Wi-Fi Forum said it might be a resource issue...however this system should be able to handle this as it is a faster processor than that used in the Streaming Audio Reference Design.

I have now removed the daughterboard from the EVM and am working with the McASP1 port directly. I plan to hook up an external codec, but first I am debugging the issues using a logic analyzer and software based I2S decoding.

I followed this example to create a new driver for my codec and am successfully decoding the I2S data and converting it into a .wav file and playing it back on my laptop.

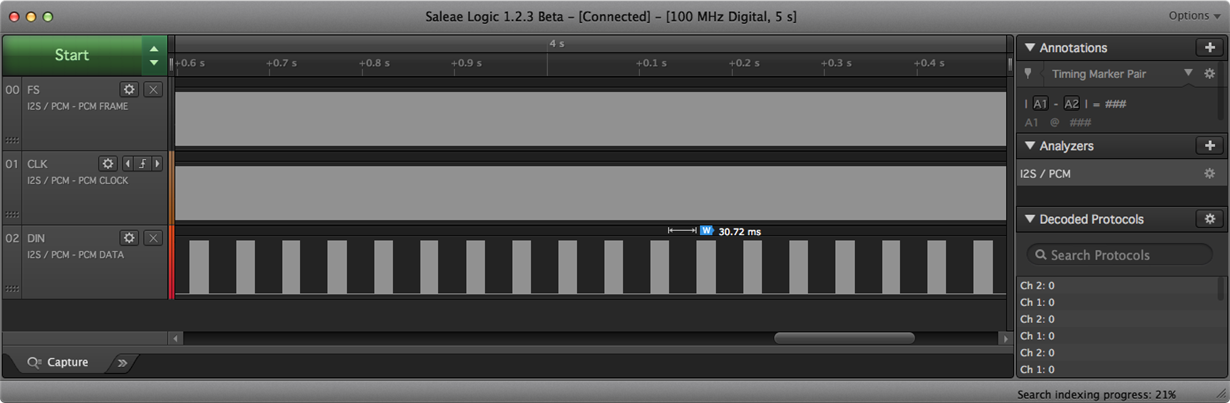

The problem is that whenever I try to stream across a network interface - even loopback via localhost - there are 30.72ms gaps in my I2S data stream. The clock and frame keep going, but the data line stays low during that time.

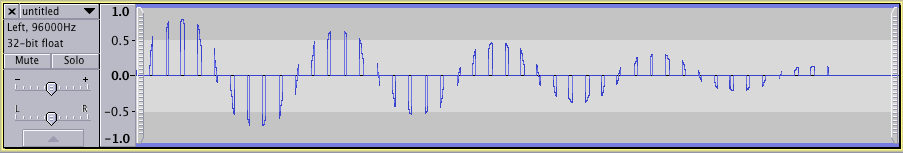

I streamed a sine wave and I can see that the samples are being separated - not dropped...in other words if I were to manually delete the 30.72ms gaps, the sound would be correct.

I've also tried streaming locally while exercising the Wi-Fi module using iperf to see if it was a contention or resource conflict issue, but that doesn't seem to be the case. So it seems to be something specific to using GStreamer from a network interface (even loopback) to the audio device.

Here is a picture of the issue on the logic analyzer:

Here is a picture of what a sine wave looked like after decoding the I2S:

All help is appreciated!

I'm using the latest version of the SDK (01.00.00.03) and the kernel is Linux am335x-evm 3.14.43-g875c69b

Andrew