Hello!

In the process of stepping through SPI driver code to find out why I was not getting any data from the McSPI peripheral, even though the correct, expected data from the remote chip is clearly coming in as shown on my scope on the spi0_d1 pin (MCSPI_CH0CONF.IS == 1, .DEP1 == 1, DPE0 == 0).

What I'm working with: Win7-64-bit,

Dev Env: CCS 6.1.2

Platform: Custom board with MYIR brand MCC-AM335X-Y board with AM3358, 250MB RAM and other electronics that seem to be working perfectly.

Packages: SYS/BIOS 6.45.1.29, UIA 2.0.5.50, AM335x PDK 3.0 (installs C:\ti\pdk_am335x_1_0_3\...)

Relatively speaking, I'm a newby in the AM335x world.

----

Up to this point, reading from and writing to registers in my AM3358 has so far (in my application) been going perfectly. I have also been assuming that due to the way I set up the MMU, I should be able to read and write from/to registers without having to worry about the L1 & L2 cache.

Pasted from my APP.CFG file:

// Near top:

var Mmu = xdc.useModule('ti.sysbios.family.arm.a8.Mmu');

.

.

.

// Later

/*---------------------------------

* MMU

*---------------------------------*/

Mmu.enableMMU = true;

/* Force peripheral section to be NON cacheable strongly-ordered memory */

var perAttrs = {

type : Mmu.FirstLevelDesc_SECTION, // SECTION descriptor (secion = 1MB)

tex: 0,

bufferable : false, // bufferable

cacheable : false, // cacheable

shareable : false, // shareable

noexecute : true, // not executable

};

/* Base addresses in which needed peripherals reside. */

/* L4_WKUP domain:

* Clock Module, Power Reset Module, DT0, GPIO0, UART0, I2C0, ADC_TSC,

* Control Module, DDR2/3/PHY, DT1, WDT1, RTCSS. */

var perBaseAddr0 = 0x44e00000;

/* L4_PER (peripheral) domain:

* UART1&2, I2C1, McSPI0, McASP0&1_CFG, DMTimer2-7, GPIO1, MMCHS0, ELM, MBX0, Spinlock. */

var perBaseAddr1 = 0x48000000;

/* I2C2, McSPI1, UART3-5, GPIO2-3, DCAN0&1, MMC1. */

var perBaseAddr2 = 0x48100000;

/* Interrupt Controller. */

var perBaseAddr3 = 0x48200000;

/* PWM sub-systems, LCD Controller. */

var perBaseAddr4 = 0x48300000;

/* PRU_ICSS. */

var perBaseAddr5 = 0x4A300000;

/* Configure the corresponding MMU page descriptors. */

Mmu.setFirstLevelDescMeta(perBaseAddr0, perBaseAddr0, perAttrs);

Mmu.setFirstLevelDescMeta(perBaseAddr1, perBaseAddr1, perAttrs);

Mmu.setFirstLevelDescMeta(perBaseAddr2, perBaseAddr2, perAttrs);

Mmu.setFirstLevelDescMeta(perBaseAddr3, perBaseAddr3, perAttrs);

Mmu.setFirstLevelDescMeta(perBaseAddr4, perBaseAddr4, perAttrs);

Mmu.setFirstLevelDescMeta(perBaseAddr5, perBaseAddr5, perAttrs);

Simultaneously, I have been ASSUMING that accessing the registers via the methods demonstrated in the PDK drivers, via code like this:

HW_RD_REG32(baseAddr + MCSPI_CHSTAT(chNum))

was giving me (over and above the MMU settings), a data barrier instruction after every register WRITE (although I see that it is programmed into register reads as well).

Specifically, without intending to do so, my code is calling the register operations in the hw_types.h file in <pdk>\packages\ti\csl\hw_types.h and I've seen various ways that things like

HW_RD_REG32_RAW()

are coded. For example, the <pdk>\packages\ti\starterware\include\hw\hw_types.h version of this file hard-codes an asm("dsb") in the actual functions, but in the <pdk>\packages\ti\csl\hw_types.h version of the file, it codes an

HW_MEM_BARRIER();

which is defined in the same file as:

static inline void HW_MEM_BARRIER(void)

{

#if defined(__ARMv7)

#ifndef MEM_BARRIER_DISABLE

asm(" dsb");

#endif

#endif

}

And I believe in other hw_types.h code I have seen (perhaps older versions of the PDK? since I can't find them now), these are all coded in macros.

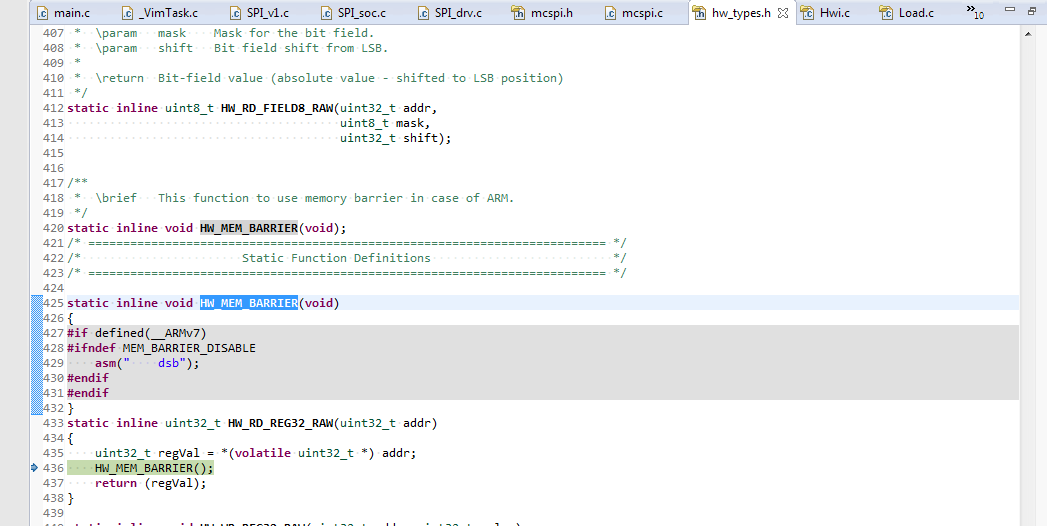

Since I know I'm not defining the symbol MEM_BARRIER_DISABLE, I have been happily assuming that this asm("dsb"); was executing. However, just while ago in stepping into this code, I saw this on my screen:

And suddenly realized that the symbol __ARMv7 was not being defined, though I understand that the Cortex-A8 in the AM3358 is an ARMv7 processor.

4 questions:

Q1: Given the above (MMU register memory ranges set for bufferable == false combined with the fact that that DSB instruction isn't being executed), what is ACTUALLY happening inside my processor and the L1 & L2 cache?

Q2: Should I be defining the __ARMv7 symbol in my project?

Q3: Is the data barrier instruction only needed when the MMU is set to cache register address ranges?

Q4: Why in the hw_types.h code are there data barrier instructions on REGISTER READs? I thought (possibly erroneously) that any type of external memory read would read straight through the L1/L2 cache (regardless of MMU settings) and thus the L1/L2 cache would be properly synchronized on ALL reads, and only at risk of being out of sync on CPU writes to external memory (thus the need for the data barrier instruction). Am I missing some basic information about what the DSB instruction actually does and why it is needed?

Kind regards,

Vic