Hi ,

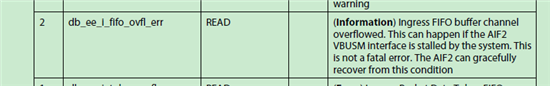

I am using the C6670 AIF2 module on LTE 20MHZ, CPRI mode . Sometimes I can get an error report from the DB module : db_ee_i_fifo_ovfl_err, then the AIF2 RX side will loss some symbol data.

I had post this problem in:

http://e2e.ti.com/support/dsp/c6000_multi-core_dsps/f/639/t/315721.aspx

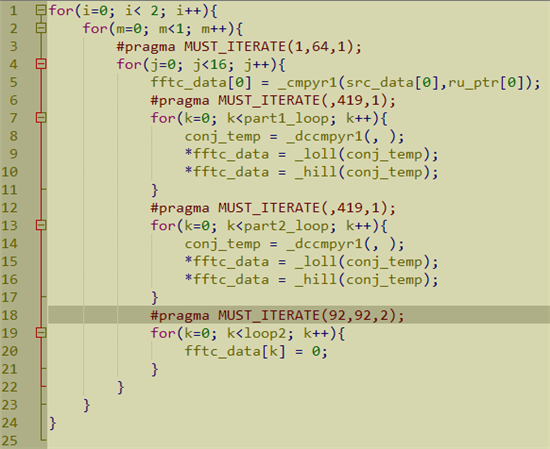

Normally, I can receive the symbol in sequence, from 0 to 139 in LTE mode. But when the error happens, I will lost some symbol.

I tried these ways to optimize the usage of AIF2.

1: Change the PKTDMA priority of AIF2 to the highest 0, and all other PKTDMA module to 1.

2: Place the AIF2 RX descriptor in L2 SRAM, TX descriptors in DDR.

3: Increase the DB buf size to the max value "CSL_AIF2_DB_FIFO_DEPTH_QW256"

These methods has some effect and the error is rare, but it is disturbing me sometimes. Is there any way to solute this problem once for all?

Thanks for your reply.

Regards,

ziyang.