Hello,

In my understanding, L2 cache enabling makes faster than disable configuration for DDR3 write access.

But C66x L2 cache enabling is slower in my test case in K2HEVM.

Question)

- L2 cache is slow in C66x. Is that right?

- Do you have any workaround?

Our test case)

unsigned char* pDst = (unsigned char*)(0x10880000);

unsigned char* pSrc = (unsigned char*)(0x80000000);

memset(pSrc, value, 512*1024);

CACHE_wbInvL2Wait();

memcpy(pDst, pSrc, 512*1024);

CACHE_wbInvL2Wait();

Other information)

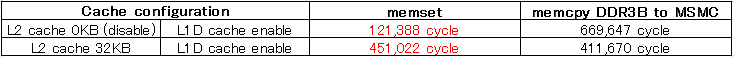

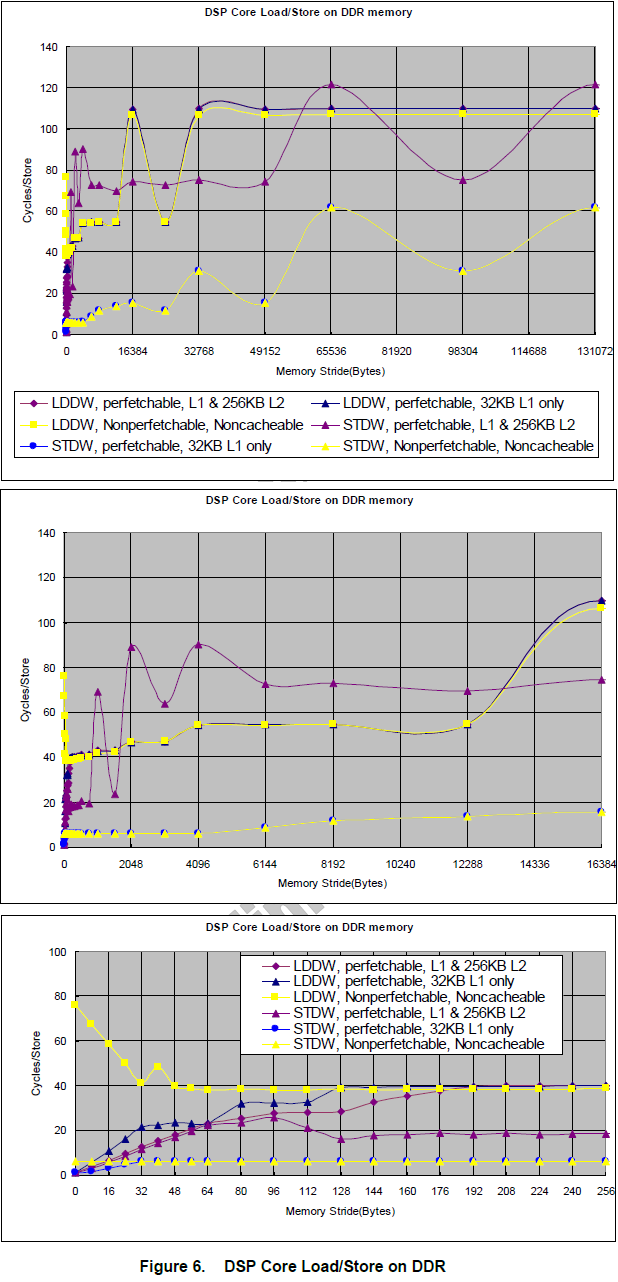

Also I am referring document, it describes C6678 Memory Access Performance.

In Figure6, STDW enabled L2 Cache access is slower than Noncacheable access.(P16-17)

Best regards, RY