Hello,

I have asked about 10GigE benchmark numbers at the following post, but unfortunately I could not get the numbers for K2H.

https://e2e.ti.com/support/dsp/c6000_multi-core_dsps/f/639/t/423161

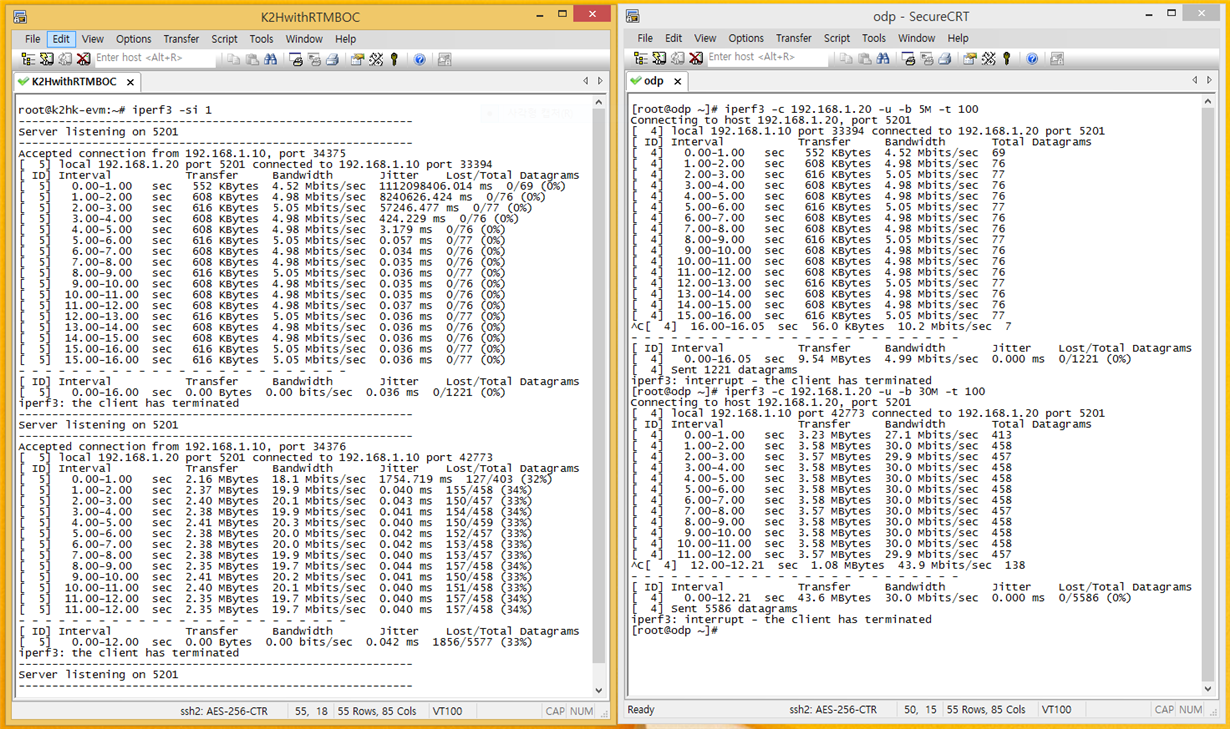

My customer's interest is now whether K2H's 10GigE interface has been verified with RTM-BOC or not because the following link says it has not been verified yet.

My customer has a K2H EVM and they are considering to get an RTM-BOC to check 10GigE performance, but before that, they are asking us that this can really work with RTM-BOC.

Do you have any information for that ?

Best Regards,

Kawada