Hi !!

So i have been using the pa emac example as a base to transfer data between my PC and K2E's ARM core.

My problem is very simple that when i try to transfer UDP packets at a rate higher than 200-300 Mb/s i get alot of packet loss.

I know that the issue is not in the packet accelerator but rather in the queue management and/or interrupt handling as i m able to see the packets leaving the PA at higher bit rates.

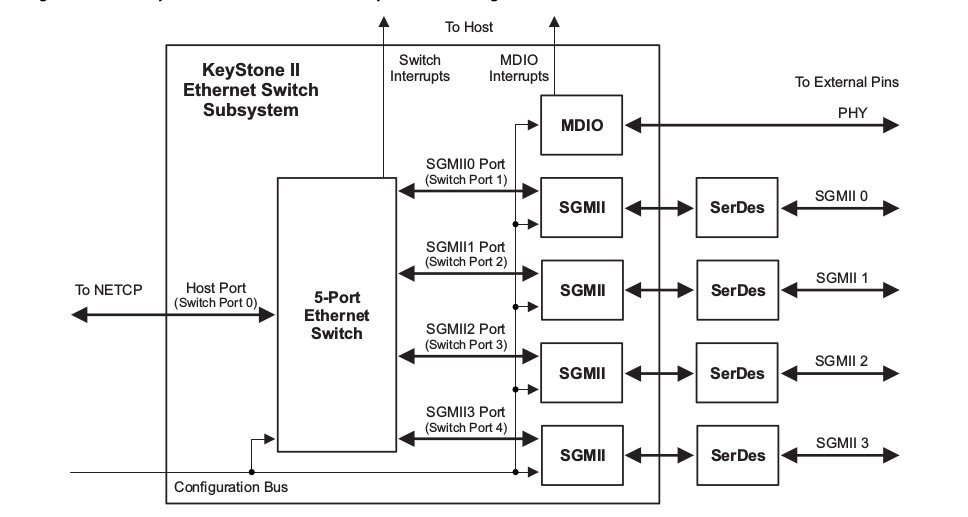

My system outlook can be seen below

PA-> que 704 -> accumulator -> interrupt (just freeing desc. etc nothing big done here)

i have used multiple configs of accumulator (larger page entries, time delays to interrupts etc etc) but i always end up with packet loss.

So my question is

- Is the queue manager fast enough to achieve 1Gb/s with just one queue?

- how can i check where the packet loss is coming from after leaving the PA?

- If a single queue isn't fast enough can i use multiple accumulator channels to sort of create an alternating load and reset mechanism which utilizes 2 ques 2 channels 2 interrupts, i.e. half packets que 1 half in queue 2?

- Any other way i can get 1 gb/s without using the ndk or linux?

Following are my system specs

K2e ARM core 0

pdk 4.0.2

my application is bare metal but uses 90% of the PA emac example code

send and rec. works perfectly at lower rates

the original PA_emac example has the same issue

regards