Hi,

I have an own developed XDAIS compliant IUNIVERSAL algorithm (MYALG) running on DM6446 SoC's DSP.

I would need real time processing.

Reaching that, I need run MYALG process less than 30 ms.

Processing time (algorithm's performance) calculation is performed by TSCL (there is no overrun, and I don't need to use TSCH).

TSC is the DSP's own timer, so it seems to good timer for measure execution cycles.

In addition, processing time is measured by MYALG itself, inside of its function ALG_process(), so it is measured only the DSP running time.

After some digging (due to some performance issues), I found some strange processing times.

Simulator timing, JTAG emulator timing and the real time running (in a DVSDK environment) are quite different.

|

|

I would understand the Simulator and others differences due to the quality of the simulation accuracy (although, I tried to set the best simulator's parameters).

But, I don't understand the JTAG emulator and real time running differences.

Processing times are 2-3 times slower in real time environment than using a JTAG emulator.

Once again: this processing times measured only DSP running in function ALG_process() !

So, I need some optimization work, but I don't know where would I start it.

I would appreciate if anybody would explain the reason of this performance decreasing.

Should I make some configuration steps, or would I need to accept this performance ratios?

Thanks,

Peter

Details:

A./ Algorithm development

CCS v5.3.0.00090

Texas Instruments Simulators: v5.3.3.0

JTAG Emulator: Blackhawk LAN560

Board's DSP core freq: 594 MHz

Board's DDR2 freq: 162 MHz

The measured benchmarks are:

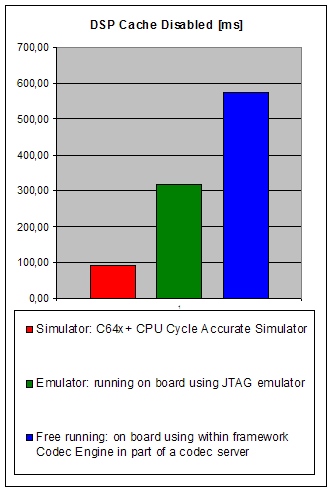

A.1./ When DSP cache is disabled:

92.67 ms ( 55,043,713 sysclk) - Simulator: C64x+ CPU Cycle Accurate Simulator

316.60 ms (188,061,064 sysclk) - Emulator: running on board using JTAG emulator

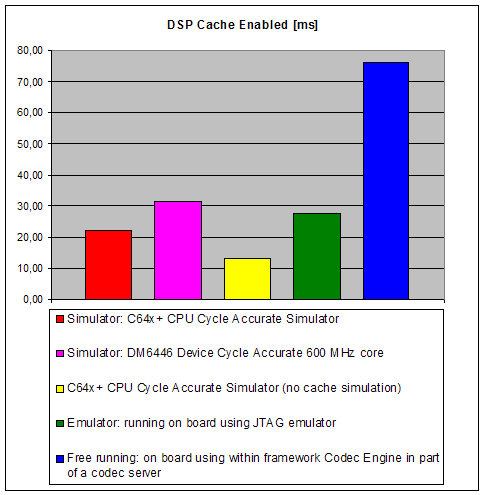

A.2./ When DSP cache is enabled (L1P=32K, L1D=32K, L2=64K):

22.07 ms ( 13,109,186 sysclk) - Simulator: C64x+ CPU Cycle Accurate Simulator

31.70 ms ( 18,829,160 sysclk) - Simulator: DM6446 Device Cycle Accurate (600 MHz core)

27.46 ms ( 16,310,579 sysclk) - Emulator: running on board using JTAG emulator

A.3./ Simulator without cache simulation:

13.07 ms ( 7,765,643 sysclk) - C64x+ CPU Cycle Accurate Simulator (no cache simulation)

B./ Testing in final environment

DVSDK: dvsdk_wince_01_11_00_03_patch_01

DSP BIOS: 5_41_11_38

Code Generation Tools: C6000_7_3_3

XDCTOOLS: 3_23_00_32

Codec Engine: 2_26_01_09

Framework Components: 2_26_00_01

DMAI: 1_27_00_06

XDAIS: 6_26_01_03

WinCE Utils: 1_01_00_01

BIOS utils: 1_02_02

DSPLink: 1_65_00_03

CE utils: v1_07

Opened algorithms in application (in codec server): DM6446-MPEG4 encode, JPEG encode, MYALG

OS: WinCE 6 R3

Board's DSP core freq: 594 MHz

Board's DDR2 freq: 162 MHz

The measured benchmarks are:

B.1./ When DSP cache is disabled:

575.10 ms (341,609,968 sysclk) - Free running: on board using within framework (Codec Engine, in part of a codec server)

B.2./ When DSP cache is enabled (L1P=32K, L1D=16K, L2=64K):

75.97 ms ( 45,124,660 sysclk) - Free running: on board using within framework (Codec Engine, in part of a codec server)