Hi All,

Can anybody help me the minimum adc aquisition time for CC2540/CC2541 BLE SOC? Also, what is the input sampling capacitance of the adc SAR input?

Thanks,

Joemir

This thread has been locked.

If you have a related question, please click the "Ask a related question" button in the top right corner. The newly created question will be automatically linked to this question.

There's a lot going wrong here.

The real issue is that the "characteristic resistance" of 176kohms, while probably accurate for the conditions under which it was determined, is not very meaningful in terms of designing new ADC applications. The actual criteria that are required are the input RC sample-and-hold network values (RI and CI) below, as well as the percentage of the sample period that the switch is allowed to charge. The sample frequency is 4MHz so the sampling time should be well under 250ns.

An actual answer to the question posed by Joemir and myself are three parameters: RI, CI, Ts. The answers provided in this thread and elsewhere don't contain any of that information.

To attempt to partially answer this question based on the 176kohm characteristic resistance with a low-impedance 3V input, we can assume that the sampling capacitor is exposed to the 3V input voltage long enough to fully charge, and that it discharges completely every 250ns sample period.

If the apparent resistance into the pin is 176k at 3V, that means the average current into the pin over the 250ns sample period is about 17uA. Using the basic definition of a farad (F = A * s / V), we can calculate the capacitance of the sample-and-hold capacitor as C = 17uA * 250ns / 3V = 1.4pF. This is the same result as in the other thread, but the origin of the formula used there, 176kohm = 1 / (C * 4MHz), was not obvious or explained.

Assuming that the sampling capacitor is 1.4pF, we still do not know how quickly that capacitor can charge (governed by RI) or how much time the ADC allows the capacitor to charge (governed by Ts). Those two parameters are just as critical for ADC input design but the 176kohm/3V spec does not give any indication of their value.

Hello CJ,

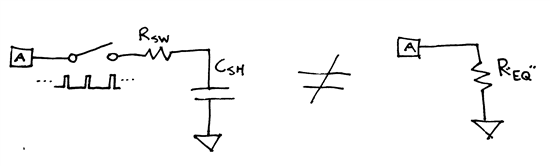

You are correct in stating that the input impedance (Reactance X_c) of a capacitor is defined by 1 / (2pi * f * C), but for a switched capacitor circuit the equivalent input resistance which is based on the average current flowing into the ADC input which is given by 1 / (f * C) ( can be seen in the picture below ).

The charging current of a capacitor asymptotically approaches zero as the capacitor becomes charged up to the connected source voltage. This means you are correct in stating that the input impedance is not constant during charge, but we are using the average to define the equivalent resistance here.

I understand the methodology used to arrive at the "equivalent input resistance model" specification, but my position is that the "equivalent input resistance" specification is not sufficient for anyone to properly design anything.

The only aspect that is actually equivalent if you model the switched-cap input as a resistance is the power delivered into the pin, but that isn't something that anyone really cares much about. Obviously lower power is better, but when you're designing an ADC front-end, conversion fidelity, not small amounts of power, are the primary concern. The "equivalent" input resistor model is only equivalent in terms of power consumption, not in terms of conversion fidelity. If the "equivalent input resistance model" was accurate with regards to conversion fidelity, then you would be able to add an external 176k resistor between the ADC input and the ADC reference and get ADC results that are exactly midscale. I guarantee that if you actually did that experiment, the ADC results wouldn't even be close to midscale. The ADC input just doesn't work that way.

Somewhere the actual, useful specifications I'm looking for are written down. They have to be, or the CC2541 (and every other IC containing this ADC) would not exist. Having engineers characterize it themselves or suggesting they all add the extra cost and complexity of an external buffer seems like a poor approach.

- "The formula for the impedance of a capacitor is 1 / (2pi * f * C), NOT 1 / (f * C)": This is wrong. See chapter 12.4 in "Design of Analog CMOS Integrated Circuits" (Razavi) or chapter 5 in "Analog MOS integrated circuits for signal processing" (Gregorian/ Temes). The equivalent resistance at the input of a SC integrator is 1/(f*C).

- The equivalent resistor model could be used to calculate how to drive the ADC input for a given ENOB, see the attached document (part of a not released app note) on how this could be used to calculate the component values for a resistor ladder. 5810.SOC_ADC.docx

- Since the ADC is a sigma-delta I'm not sure how relevant it is to talk about "minimum adc aquisition time" since the ADC output is an average of n "samples"/ clock cycles.

Acquisition time is absolutely relevant. Regardless of the ADC topology (SAR, S-D, etc) the very first stage is always a sampling of some analog input. The quality of rest of the conversion process is contingent (among so many other things) on how well the sampled voltage on the sample-and-hold cap matches the real voltage on the pin, so it absolutely matters how that sampling is done.

It doesn't matter if you're sampling at 4MHz as you are with this sigma-delta ADC or if you're sampling at 1kHz with an old SAR ADC; the timing of the front-end sample-and-hold circuit is critical, especially if you're sampling a high-impedance signal. The only way to know the timing of that circuit is to have a known AC representation of that circuit, and a 176k resistor is definitely NOT an AC representation.

Go back to my little thought experiment. You put a discrete 176k resistor between the ADC input and the ADC reference. The datasheet says the ADC output should be midscale if you sample that pin. No one should believe that. What do you think the output would really be, and what parameters would you need to know to be sure?

The details of the ADC input stage do not match the earlier figure in three respects, one is that the initial charge on the sampling capacitor is not necessarily zero, second is that the ADC is differential and third the input is sampled in two different ways during one clock period. I will not go into the actual details here, but unfortunately a simple AC model would probably not be a good starting point as non-linarites and the changed impedance during the clock period would be difficult to capture. Rather the given impedance is to give the user an idea of required source impedance/how much current the source must be able to deliver.

We can model this by an ideal voltage source with serial output impedance called R0. The output of R0 is then hooked up with one of the IFADC inputs which have an equivalent input resistance Rin (176k). If the voltage of the ideal voltage source is Vin, the voltage after R0 would be V0, which is ideally the input voltage seen by the ADC.

Using ohm-law and an assumption that the acceptable voltage error is much smaller than 1, make certain that R0 < Rin*e, where e is the acceptable relative error.

E.g. if you accept 1/1000 voltage droop from the ideal voltage to the ADC input (10 bits would be 1/1024), e=1/1000, Rin=176k so R0 < 176 ohm. I would advise to keep a good margin here if accuracy is important as there are also some internal switches which adds non-linearity and some resistance to the equation.

If you don't have an ideal voltage source you need to define one which is good enough. One way is to create a voltage divider where the resistor in parallel with the ADC is significantly smaller than the ADC input. I would not recommend to only adding a single series resistance to the ADC though as the ADC impedance also has significant process variation. Hence even if you find a solution that works well with a particular device you should expect very large process variation and a resulting large performance spread.