Hello Chris and InstaSPIN community,

I am using a custom low inductance, 3 paired pole, 9.8V, BLDC motor with DRV8301-69M-KIT. After reading every piece of literature and forum post I could find I am still unable to spin the motor at the rated 20krpm. At this point in time I am able to hit right around 14krpm. I have varied the USER_PWM_FREQ_kHz from 10 to 80 as well as tried increasing #define USER_MAX_VS_MAG_PU to 1.333, as in lab10.

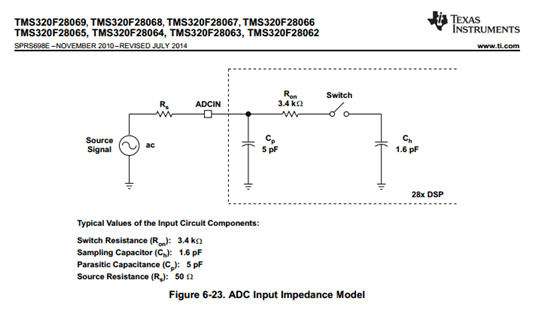

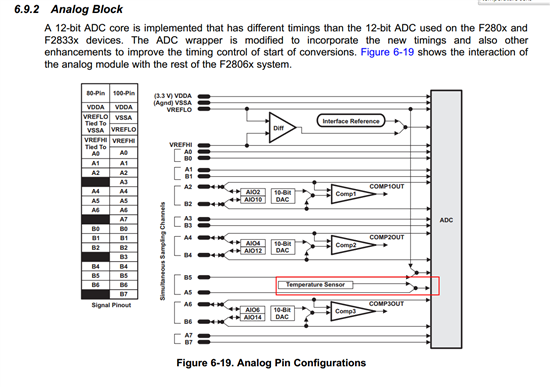

I read that I may get poor results at a bus voltage of 9.8 due to ADC resolution. Is there a method recommended for altering the board to solve this issue?

My goal is: Speed control from 300 rpm - 20krpm

The best results I could get so far are with the attached user.h file

Thanks in advance!

USER_PWM_FREQ_kHz = 25

USER_MAX_VS_MAG_PU = 1.0