Other Parts Discussed in Thread: SYSBIOS,

Tool/software: TI-RTOS

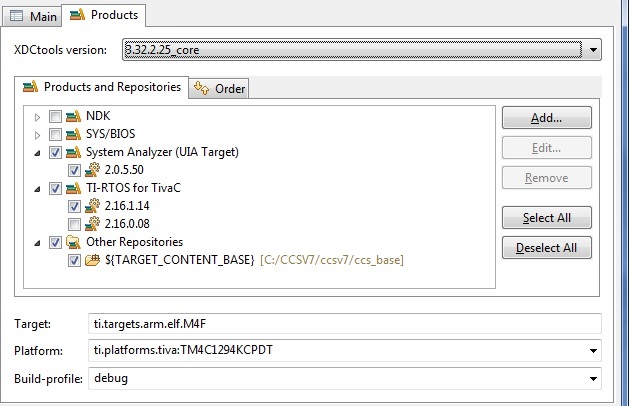

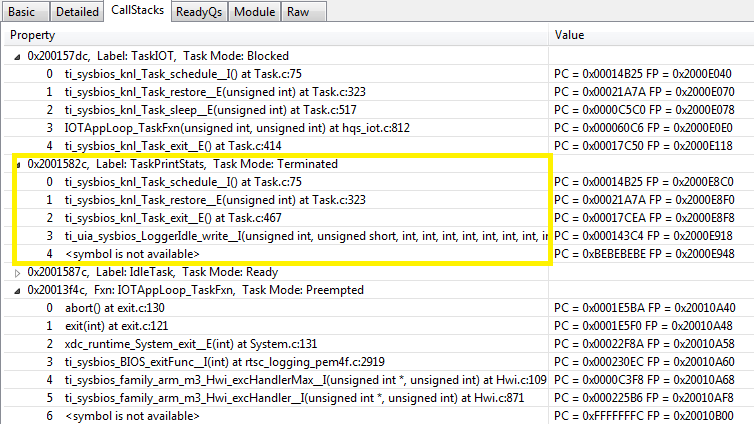

RTOS 2.16.1.14 Has several issues:

1. Boolean switch fails state change detection inside a running task when executed from GUI composer v1.0 button. Must set Boolean value prior to entering the task for it to assert inside task.

2. Task_sleep(2000) function inside an task being exited produces no Sleep (indication) in RTOS Analyzer details or Live events but show 0000 for time listing. Task_exit() or (return) directives produce same results.

3. Reverting MCU core with 1Meg flash to 512k flash MCU fails to place Tivaware device driver library interrupt (.vector) in the correct position and fails to compile application with M3 HWI module support.

4. RTOS Analyzer graphs (load/execution) CC7 debug import no live data yet switching to details view shows values being posted.