Tool/software: TI-RTOS

Is the TI-RTOS kernel multi tasking or only multi threading?

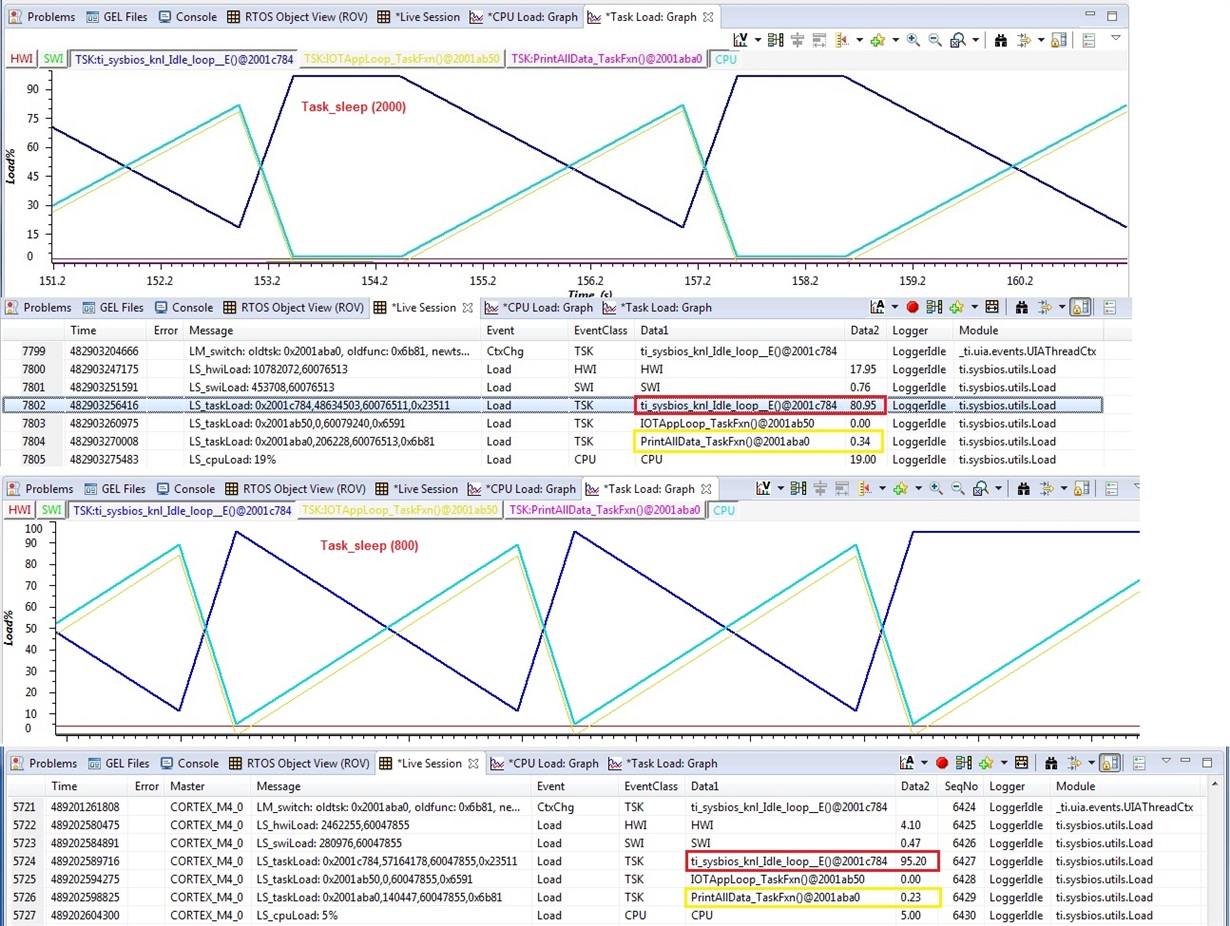

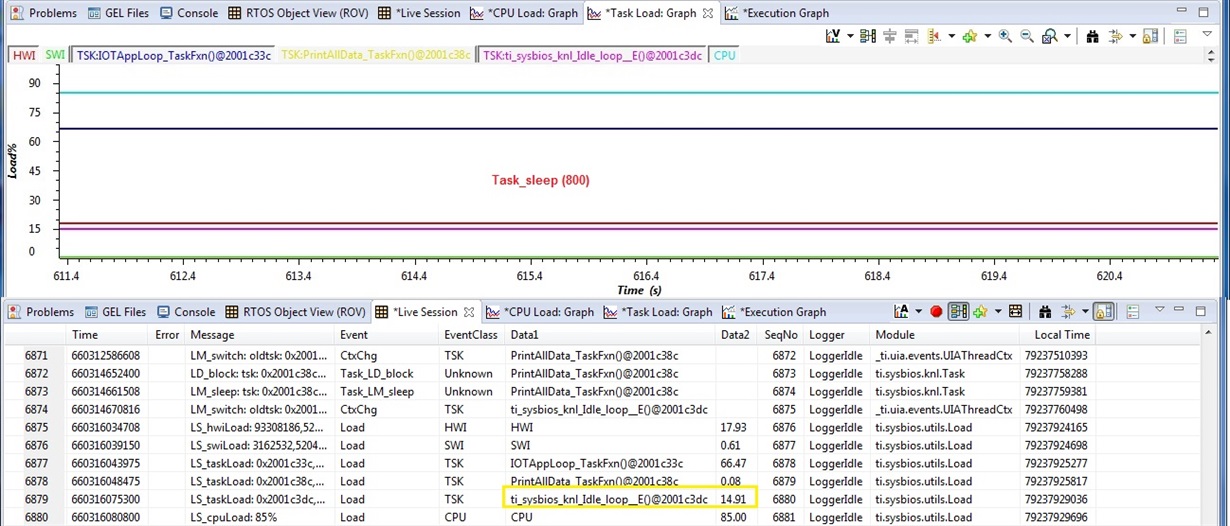

The sleep duration of one constructed task seems to effect that of another or other constructed tasks start of execution time. It may not be the entire task sleep duration but the kernel's CPU load usage exponentially increases relative to total task sleep duration configured with in all constructed tasks. Typically the kernel may steadily load CPU 15% usage with a task sleep duration of 400. Yet same task set to 2000 sleep duration and kernel CPU load peaks over 90%, momentarily as the task that was sleeping wakes up and also peaks over 90%.

Point is that behavior is seemingly not an artifact of a preemptive multi tasking kernel. Windows desktop GUI leverages a preemptive multitasking kernel to seamlessly moderate background program threads without the user being aware it is occurring. We can't expect the very same from a 150 DMIPS CPU but argumentatively the TI-RTOS program scale is much smaller comparatively.