Part Number: TDA4VMXEVM

Hi,

I am trying to use the import utility to generate the .bin file for TDA4X.

SDK details: psdk_rtos_auto_j7_06_00_01_00

TIDL details: tidl_j7_00_09_01_00

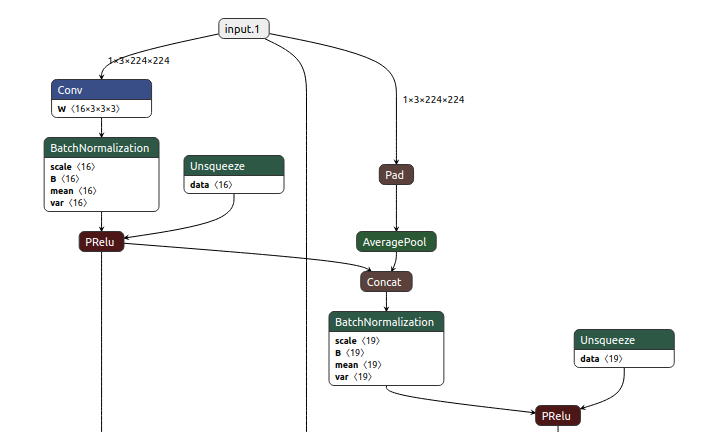

I converted a pytorch model (.pth) to onnx (.onnx) format in order to use it. However the import utility fails with a segmentation fault. Please find the logs below:

*******************************************************************************************************************************************************

~/psdk_rtos_auto_j7_06_00_01_00/tidl_j7_00_09_01_00/ti_dl/utils/tidlModelImport$ ./out/tidl_model_import.out ../../test/testvecs/config/import/public/onnx/tidl_import_espnet.txt

TF Model (Proto) File : ../../test/testvecs/models/public/onnx/espnet/espnet_p_2_q_3.onnx

TIDL Network File : ../../test/testvecs/config/tidl_models/onnx/espnet/espnet_p_2_q_3.bin

TIDL IO Info File : ../../test/testvecs/config/tidl_models/onnx/espnet/espnet_p_2_q_3_

Segmentation fault (core dumped)

******************************************************************************************************************************************************

Kindly let me know how to proceed in this case.

Thanks in advance,

Prajakta