Dear TI supporter ,

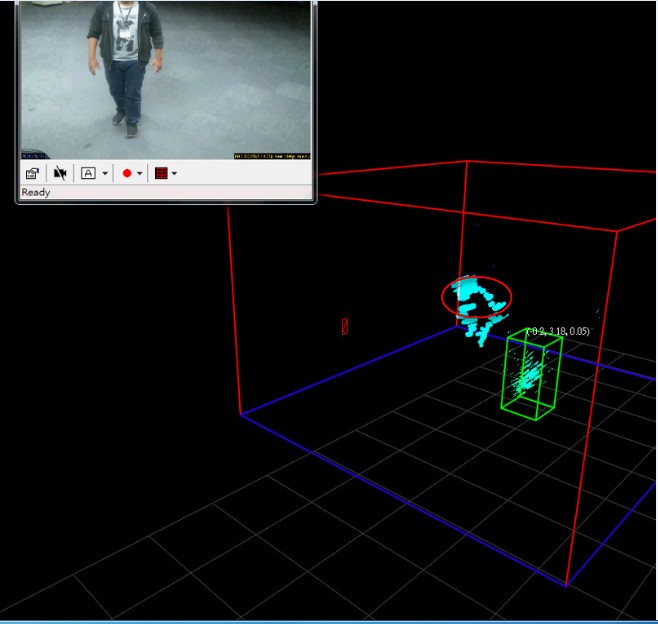

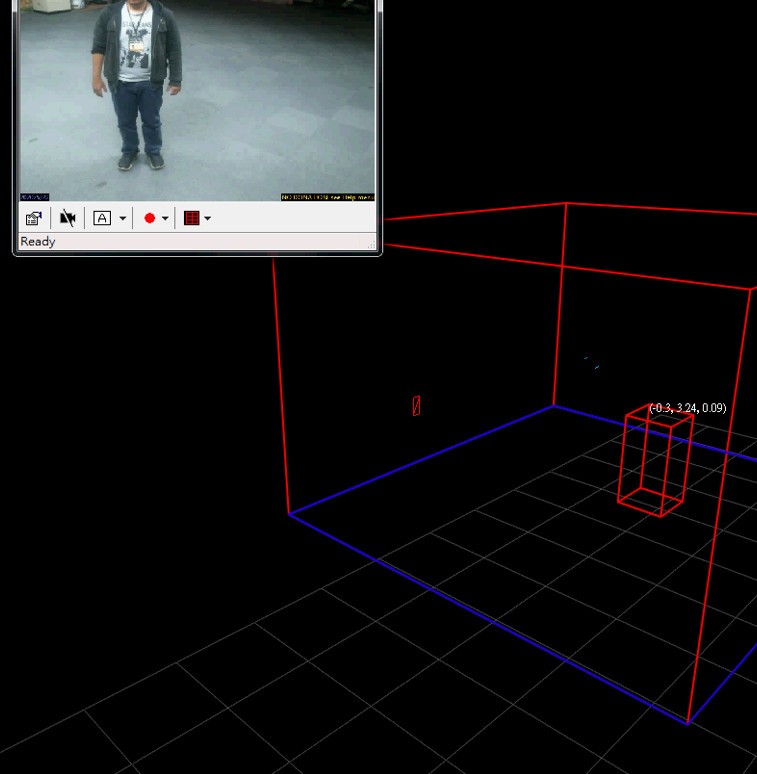

1) Which Project(Lab) reference source is better to exercise and implement the algorithm of "Detecting Human Falls and Stance" ? Can refer to 3D people demo or scan area demo ?

2) I just getting start and trace the code of the 3D people demo, need to have a simple exercise , please post me where is the code area to output the point cloud data in which relating

function I could calculate it

- height of each target

- dimensions of each target

of a person ?

Thanks

Ben.