Other Parts Discussed in Thread: CC2540

Tool/software: TI-RTOS

Hi!

Working with Texas Instrument CC2640R2F Simple link Bluetooth low energy wireless MCU that is running a modified version of the SPP_BLE client/server example code.

Using the blestack (BLE 4.2) and Simple link CC2640R2 SDK version 1.40.00.45.

My focus is questions regarding why we can’t get even close to the normal PHY 1 Mbit/s transfer speed as the total roundtrip time is too high due to

Our current setup consists of one (1) Central and four (4) Peripherals running the following parameter settings:

Each transfer consists of one 10-byte request C→P and one 80 – 1030-byte response P→C.

Each transfer is sent to all peripherals every 200 ms.

All tests always send 100 transfers.

Tests are maximum payload settings achieved using a normal PDU 27 and Connection Interval of 26.5 ms with 1 and 4 peripheral setup.

Below are the maximum possible settings we can use until we start getting peripheral pending issues due to scheduling latency and transfers not completing fast enough.

|

Parameters |

Test 1 P |

Test 4 P |

|

Number of peripheral: |

1 |

4 |

|

Max number of PDU: |

30 |

30 |

|

Max PDU size: |

27 |

27 |

|

MTU size: |

23 |

23 |

|

Packet payload size: |

20 |

20 |

|

Total payload size: |

530 |

80 |

|

Number of packets per interval: |

27 |

4 |

|

Total number of transfers: |

100 |

100 |

|

Total number of packets: |

2650 |

400 |

|

Min connection interval: |

26.5 ms |

26.5 ms |

|

Max connection interval: |

26.5 ms |

26.5 ms |

Results running with Connection Interval 26.5 ms:

|

|

Test 1 P |

Test 4 P |

|

Number of successful packets: |

100 |

100 |

|

Number of CRC error packets: |

0 |

0 |

|

Average roundtrip C↔P: |

124 ms |

178-220 ms |

|

Min roundtrip C↔P: |

108 ms |

156-172 ms |

|

Max roundtrip C↔P: |

141 ms |

188-235 ms |

As soon as the roundtrip time start to get higher than 200 ms we start to see issues.

So, I believe that due to the low overall throughput, PDU’s piles up in the system buffers and we start to see pending issues and in the end, nothing comes through...

Problems we are facing:

- When using a BLE sniffer we notice that during the connection event (P → C) the connection interval is not filled to its limits, why?

We would like to see the CC2640R2F fill the air with as many payload packets as possible within the boundaries of the connection interval settings.

- Higher connection interval setting lowers the number of possible PDU transferred within the given connection interval timeframe, why?

For example: changing a working setting from 26.5 ms to 100 ms connection interval with a data payload of 530 bytes works when using 26.5 ms (100% packets transferred)

To transfer all packets using 100 ms connection interval the data payload needs to be lowered to 40 bytes.

- How are multiple peripheral connection events scheduled? In sequence order: C→P1, P1→C C→P2, P2→C C→P3, P3→C C→P4, P4→C or something similar as I’m guessing that it requires at least two connection interval (one in each direction) times number of peripherals?

- To be able to connect to all four peripherals we need to set max/min connection interval as high as 32.5 ms otherwise all peripherals are not discovered by central.

How much of the airtime can be accounted for when discovery is started? Is the formula for the connection interval 12.5 ms + (5 ms * number of peripherals) valid to assume? - Are there any radio “interdependence of” parameter calculation tool or image explaining the executed scheduling scheme?

Anything that makes it easier to understand and optimize parameter settings.

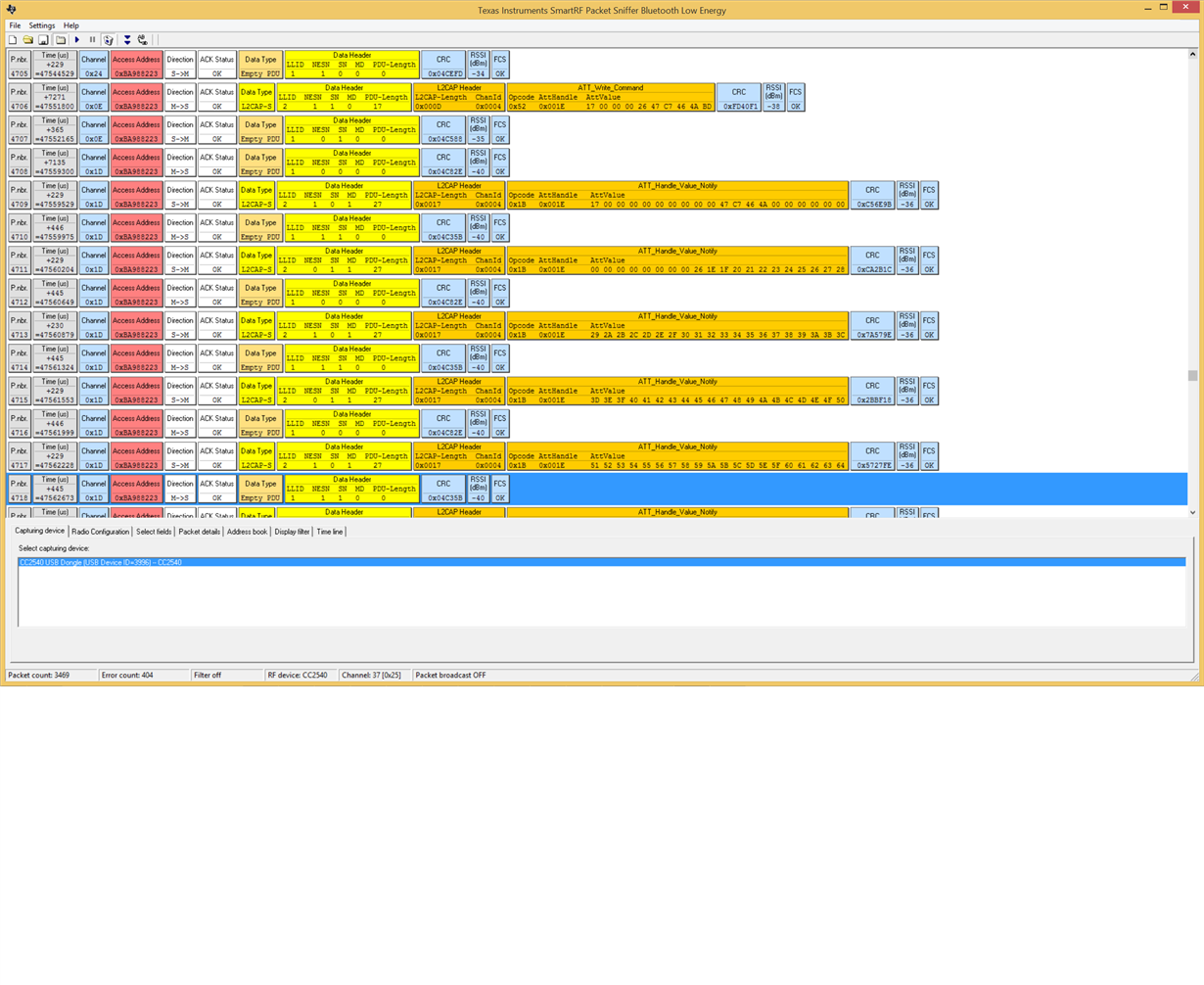

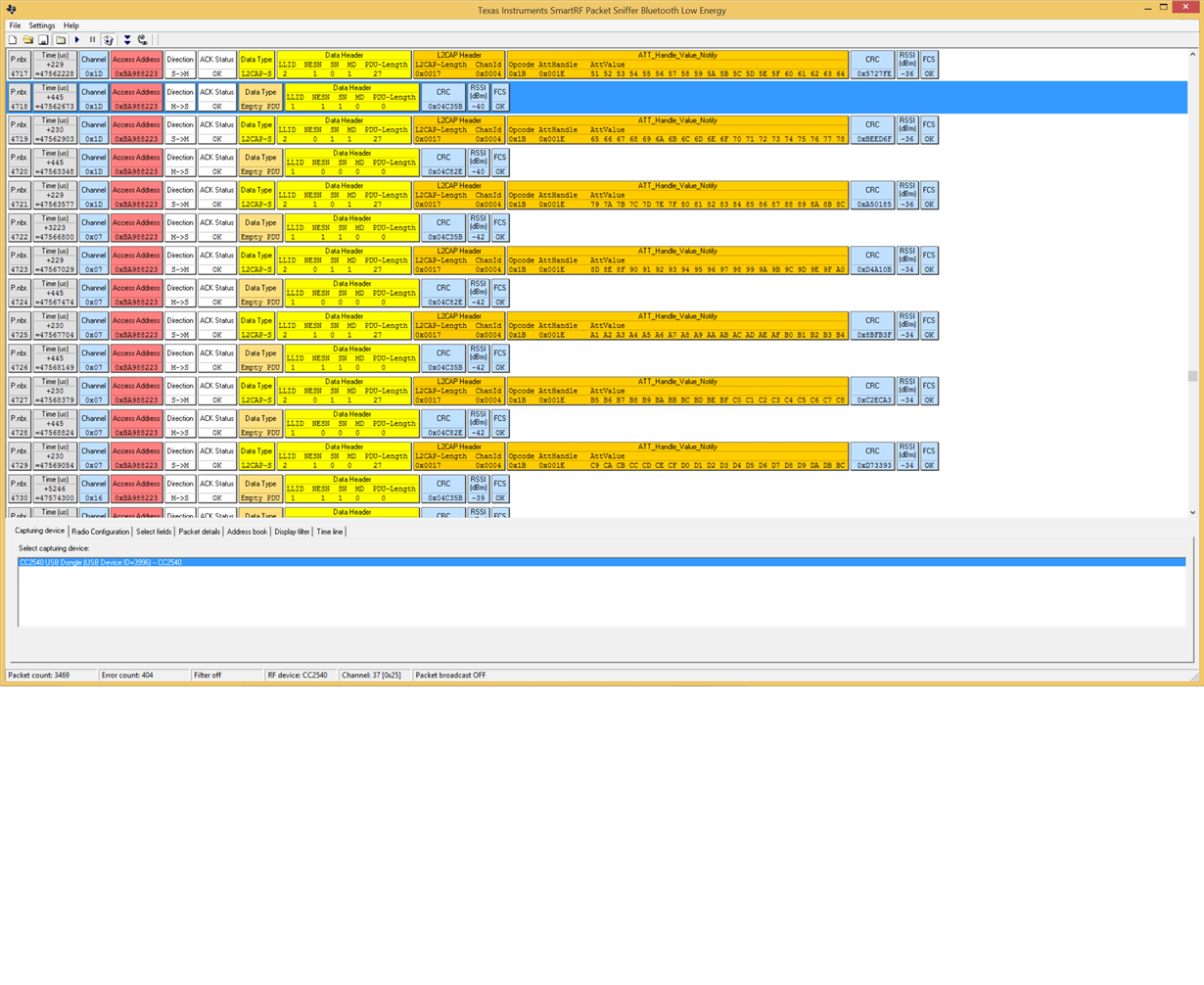

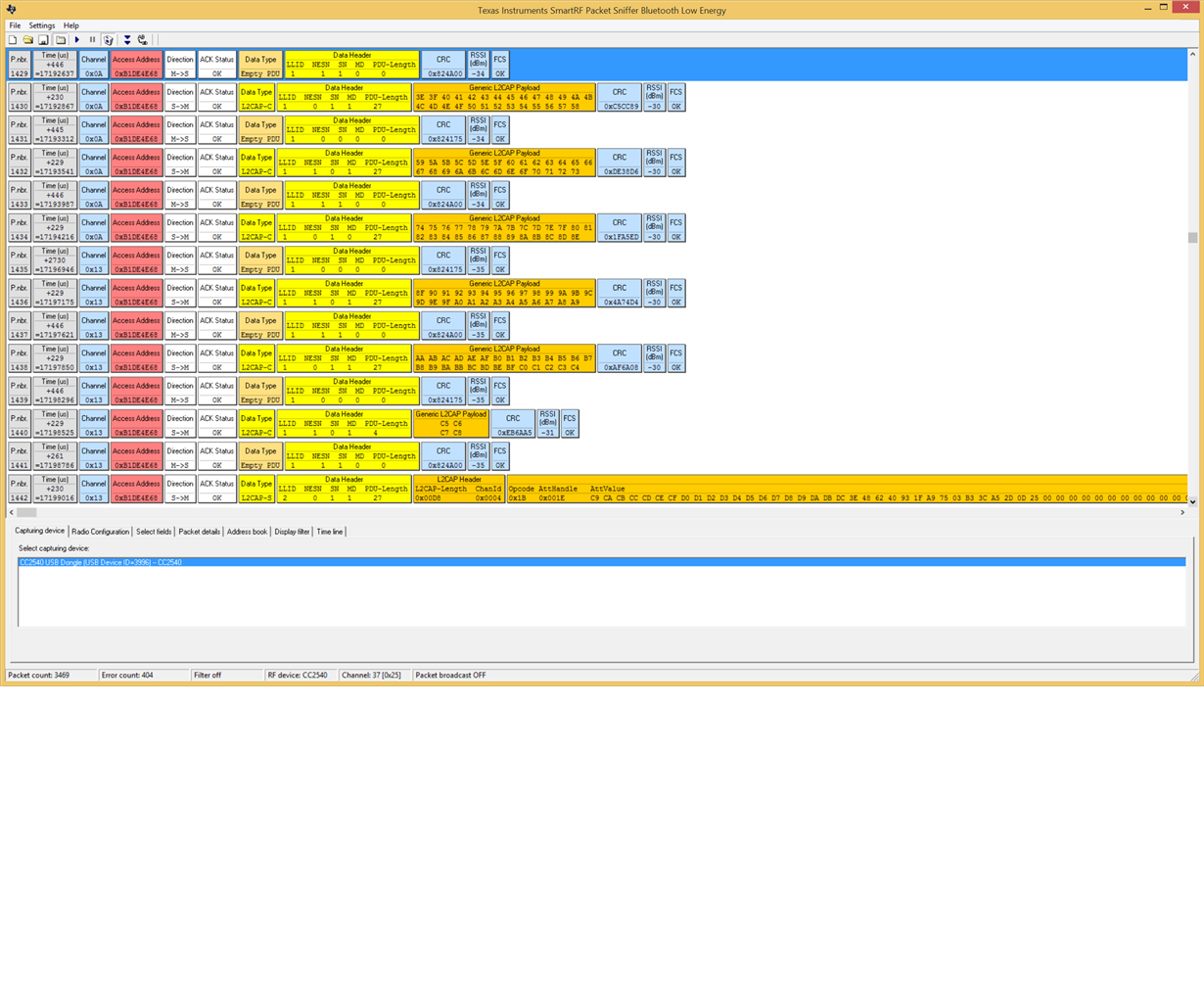

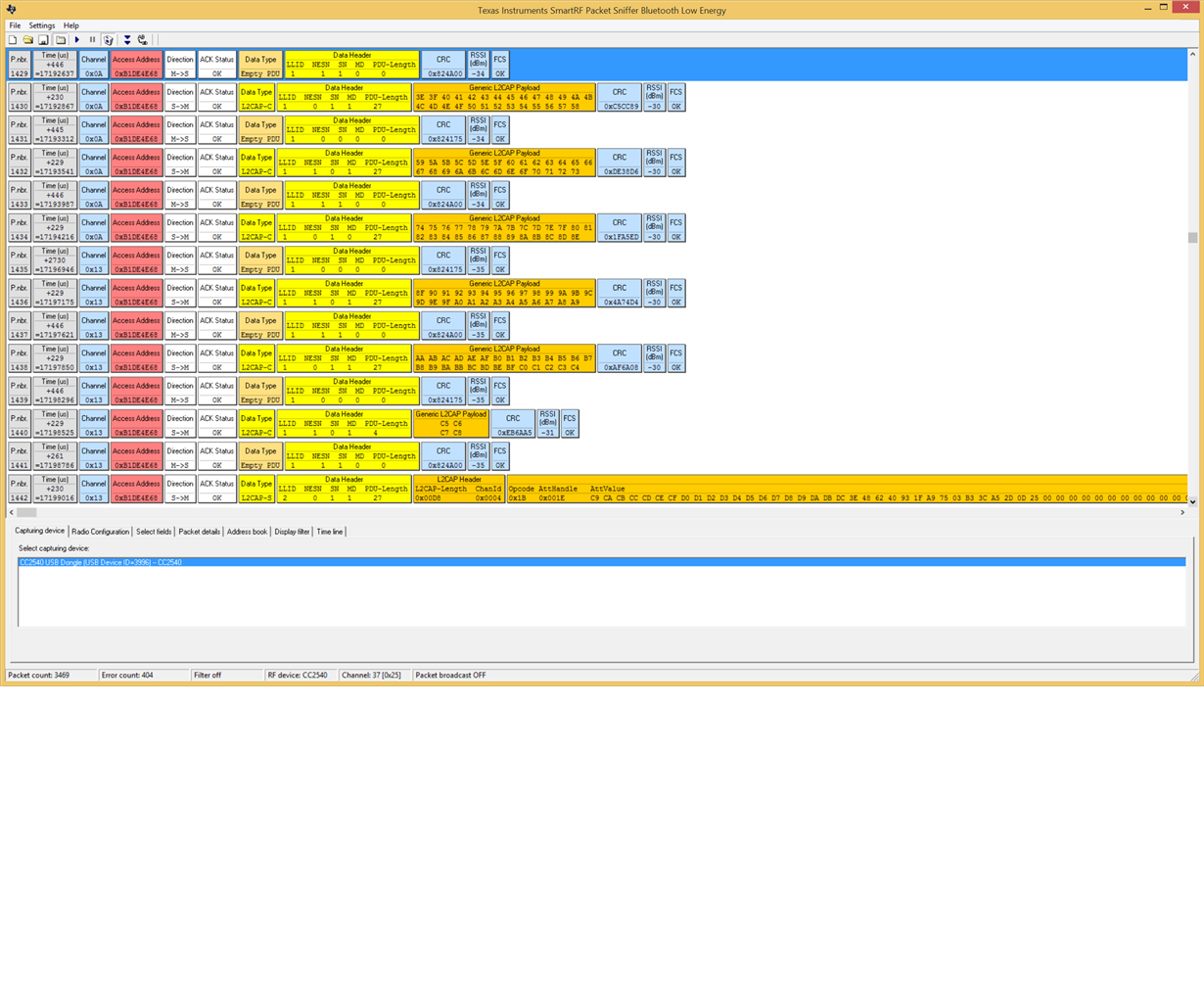

A different test we did was to change the PDU size. For this test we used a CC2540 USB Dongle using SmartRF sniffer

In this setting we are using a connection interval of 7.5 ms and PDU of 27 and the notification is adding enough data to fill 11 PDUs.

We see the PDU going out with a SmartRF sniffer. The following connection interval the peripheral device responds with x PDUs and not the expected 11 PDUs (660us*11 == 7260 us) that should fit within a connection interval of 7.5 ms. Note that after the next anchor point the remaining PDUs is sent. Note that all data is successfully received at central.

What might the reason be that the CC2640R2F choose to send only 7 PDUs and not 11 as the Bluetooth specification suggest should be done?

Here is a SmartRF sniffing session of the ping and response. Notice the long silence at P. nbr. 4722

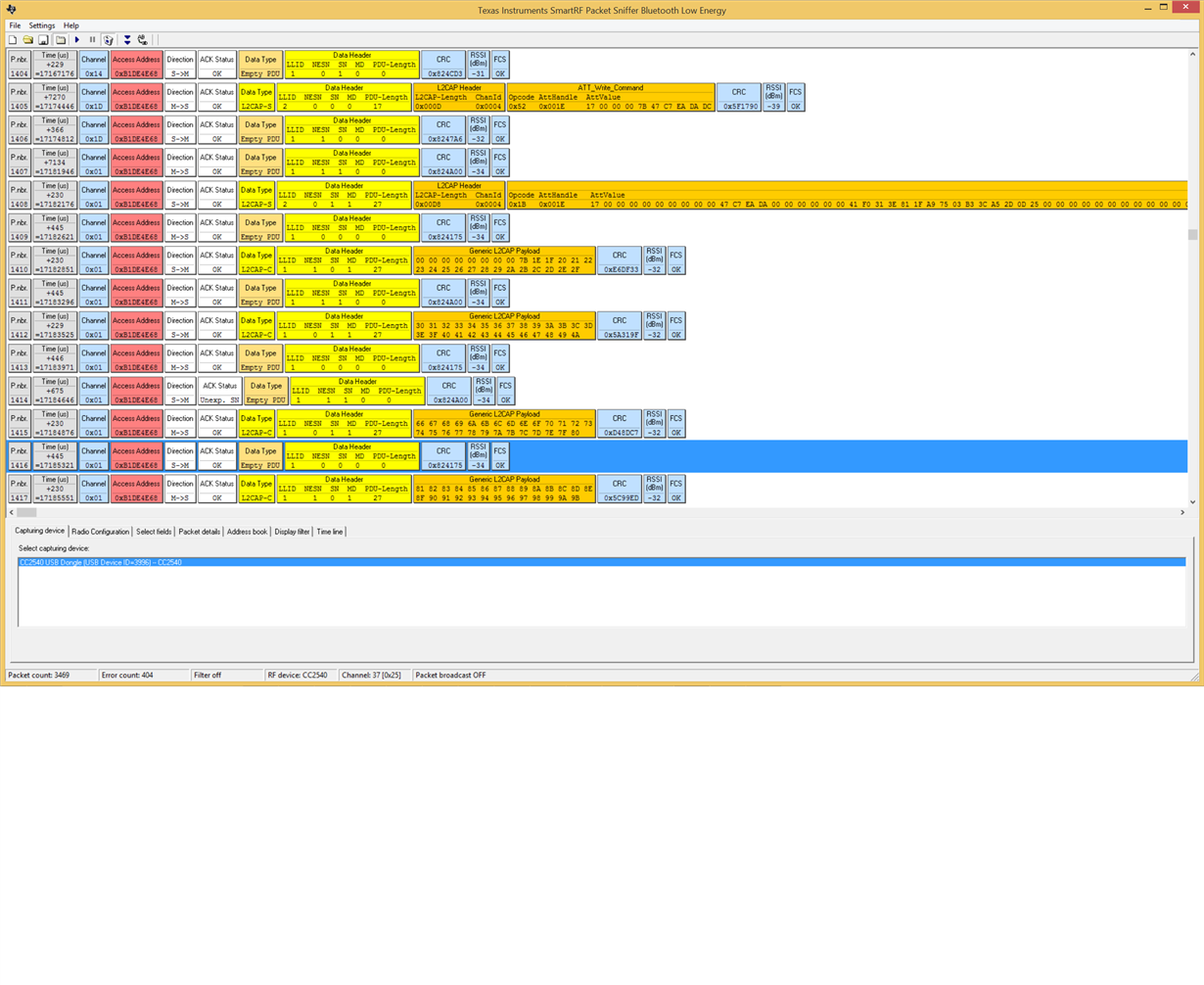

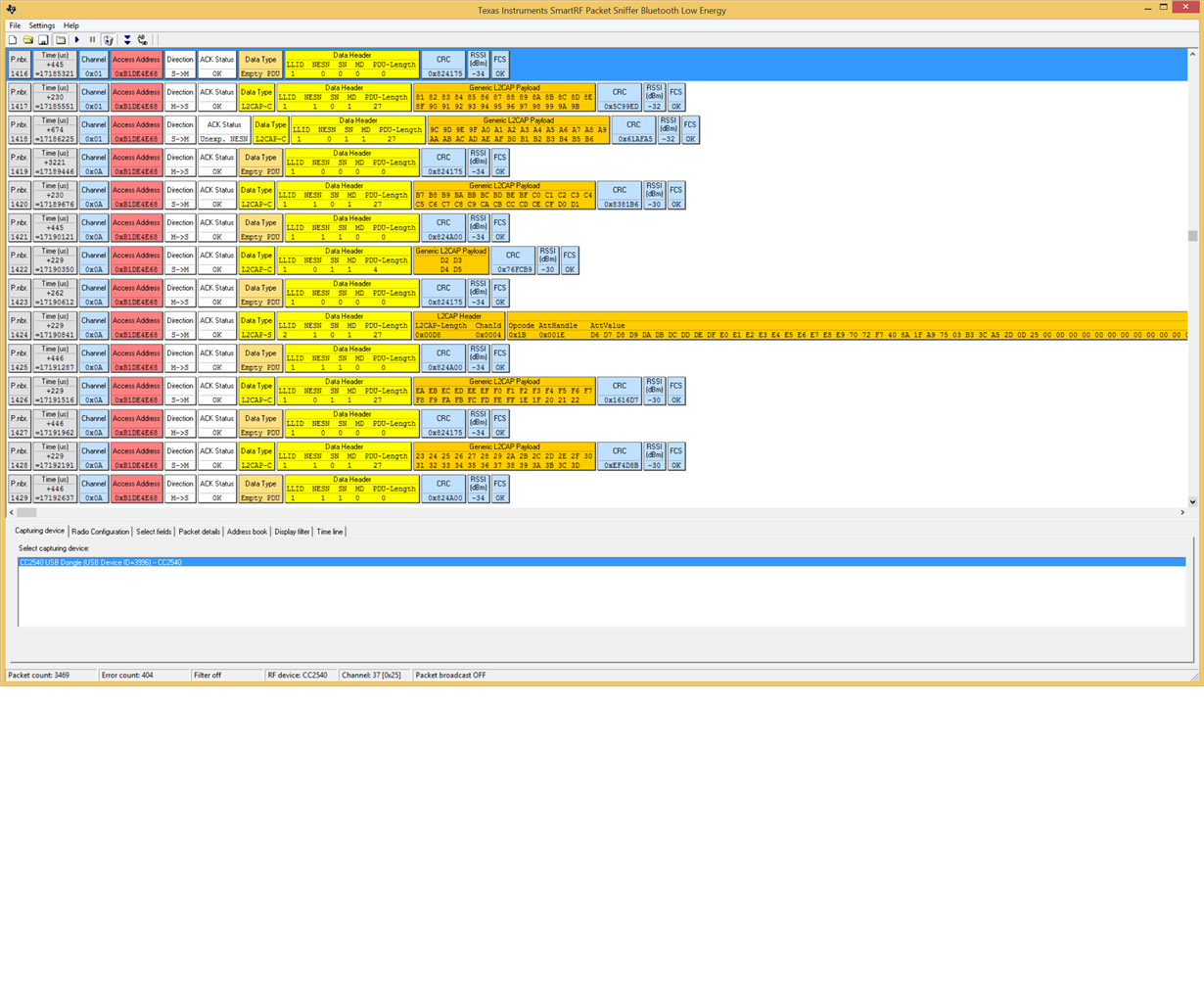

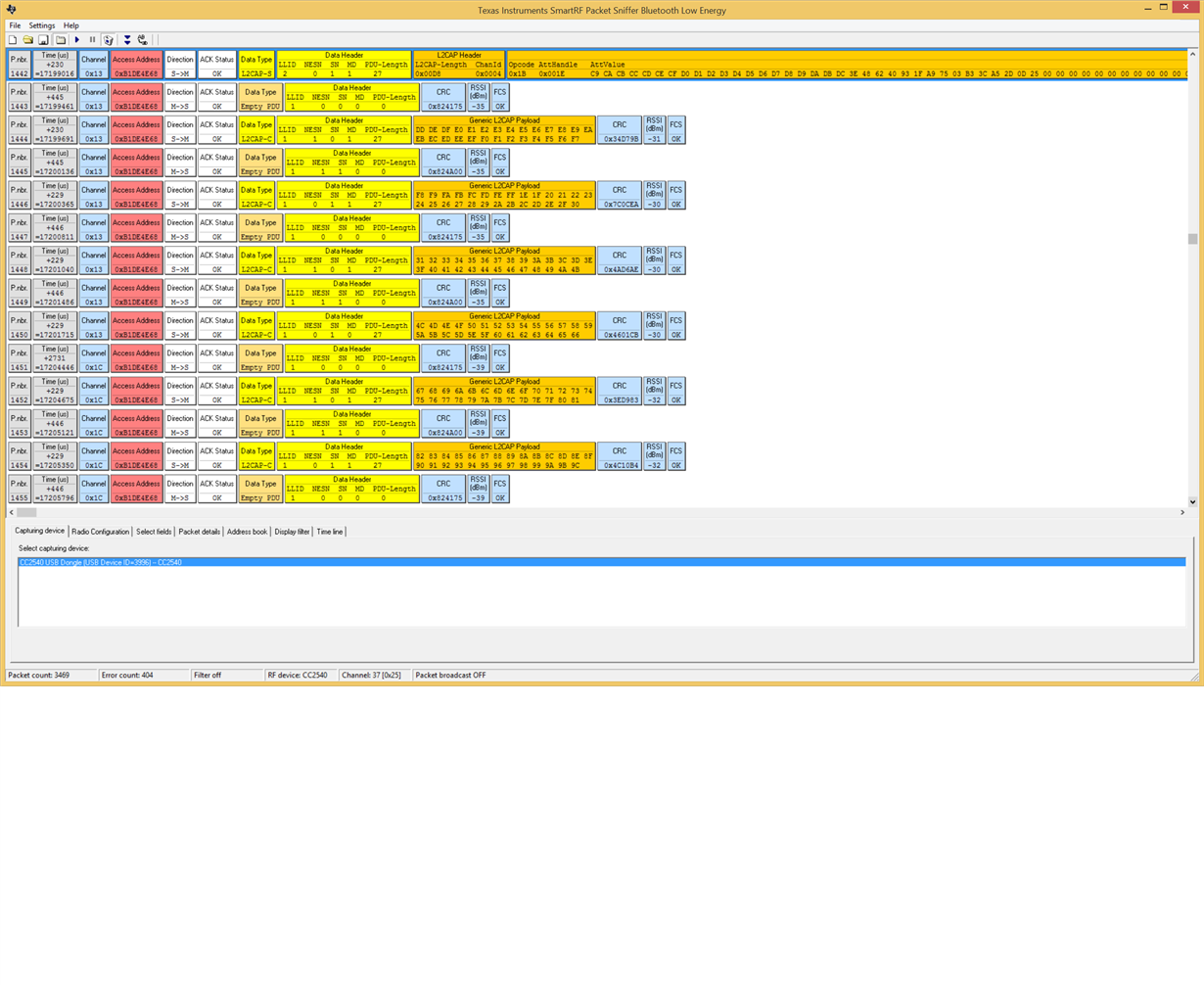

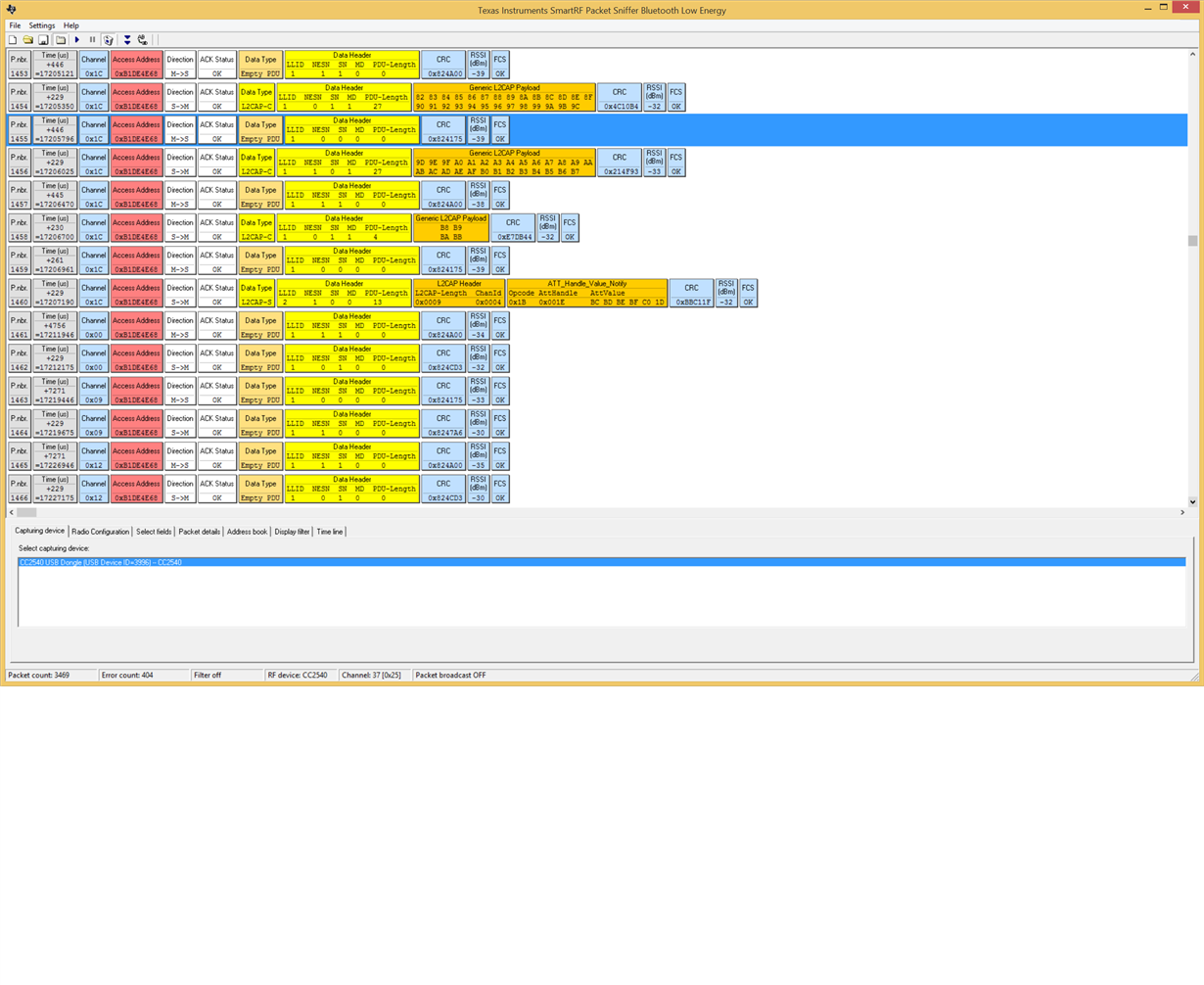

Then we change the PDU to 220:

In this setting we are using a connection interval of 7.5 ms and PDU of 220 and the notification is adding enough data to fill 3 PDUs.

We see the PDU going out with a CC2540 USB Dongle using SmartRF sniffer. The following connection interval the peripheral device responds with 2 PDUs (Size 220) and not the expected 3 PDUs that should fit within a connection interval of 7.5 ms. Following the next anchor point the remaining PDU is sent. Note that all data is successfully received at central.

What might the reason be that the CC2640R2F seems to send only 1 PDUs in each connection interval and not 3 as the Bluetooth specification suggest should be done? Our time measuring on our PC also confirms that 3 connection intervals is needed for the notification response instead of the expected 1.

What might the reason be that the CC2640R2F seems to send only 1 PDUs in each connection interval and not 3 as the Bluetooth specification suggest should be done? Our time measuring on our PC also confirms that 3 connection intervals is needed for the notification response instead of the expected 1.

A lot of questions in one post, but i hope to get som anwers to some of the them:)