Other Parts Discussed in Thread: ADS1263

I need to read some radiometric sensors working at UV wavelengths for irradiance measurement.

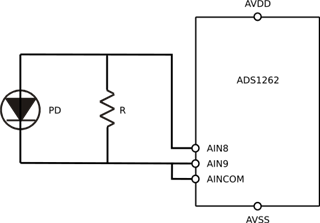

Each sensor is realized with a photodiode short-circuited to a resistor of 1 kOhm, the photocurrent generated by the photodiode struck by the light is converted into a difference in potential wich can be measured across the resistor; the sensors have a sensitivity of about 0.5 uV / uW cm^-2 and, in my application, they should produce a signal between 0 and 20 mV.

I decided to use an ADS1262 for several reasons, one of them is the presence of a large number of analog inputs and the high sensitivity so I started to test the converter with just one sensor using an Arduino for register settings and readout retrieval.

In this basic test I'm working without amplification at a slow sample rate (10 sps), using an analog supply of 0 to 5 V DC and the internal reference of 2.5 V.

Having to measure an unipolar floating signal I used the internal IC capability to level shift the signal to the half of the ADC input dinamic enabling the VBIAS on the AINCOM pin, as descrbed in the ADS126X datasheet. The schematic of my setup is reported below.

Now the problem: I get a reading with a positive voltage shift of about 20 mV. The shift is present also covering the sensor optical window and doesn't depend on the ADC because if I short-circuit AIN8 to AIN9 I get teh corrent reading of 0 V (except some uV of noise); I've also performed the offset and system calibration. Reading the sensor with a Fluke multimeter measuring mV I get more or less the same readings without the 20 mV shift.

I can't understand from where this shift come out. From what I read on the datasheet the input impedance of analog inputs is high enough (several MOhms) to make negligible the polarization of the sensor resistor caused by the internal VBIAS.

Does someone can help me?