Hello Team,

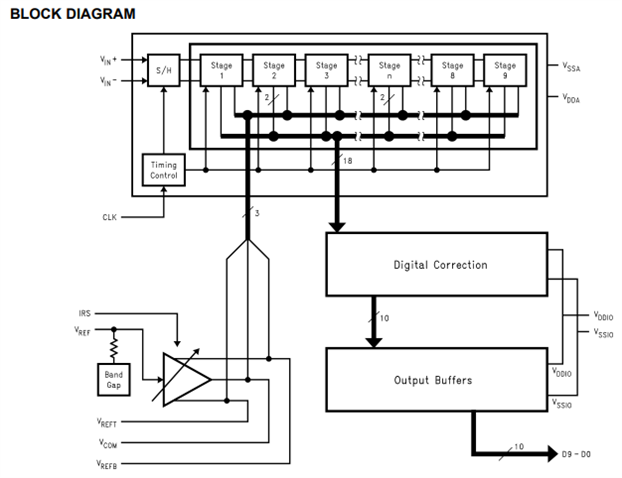

My customer would like to know if the conversion latency applies to all samples? Is there a latency of 6 clock cycles for every digitized output in relation to the corresponding input sample? Or is there an initial latency until the first valid sample and then no latency between sample at the input and the digitized output? In other words, can you explain the process of analog to digital conversion from input to output? For example, can you explain the path an input sample takes each clock cycle until it reaches it's digitized output ? Thanks

Regards,

Renan