Hello,

In our project, we are using the ADC mentioned in the subject of the question. The converter seems to be measuring the input voltage incorrectly. During our tests, I performed two voltage measurements.

The first measurement was taken by applying a voltage of 160mV to CH0P input, with CH0N connected to AGND. The obtained voltage was 159.565 mV. For a test voltage close to 1.2V, the error is approximately 4mV, which is not acceptable to us.I would like to add that the supply voltage to the ADC is adequately filtered, and the measured voltage has been verified using an oscilloscope and a METRAHIT meter.

Moreover, I used the internal calibration feature, which disconnects the measurement circuit and sets the voltage Vref = 160mV for a gain of G=1. The measured voltage was 152.15 mV.

What is the reason for such a difference in the measured voltages? How can I properly calibrate the voltage readings so that the error approaches the one specified in the documentation?

Below, I am sending the ADC connection diagram and the bit response from the ADC on the logic analyzer( Change the file extension to .sal and open the workspace in the Salae software ).

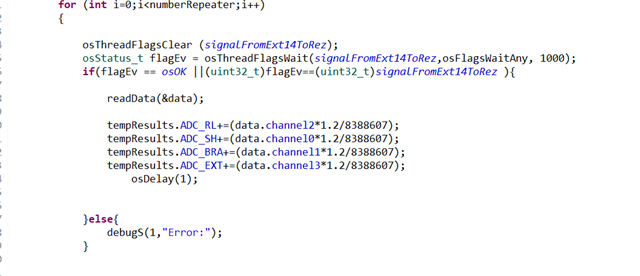

I read the voltage value from the register, and the falls within the range of 0-8388607.

Here's the formula used to obtain the voltage from ADC step: Session.csvSession2.csv

Session.csvSession2.csv