Other Parts Discussed in Thread: , OPA314

Hi all,

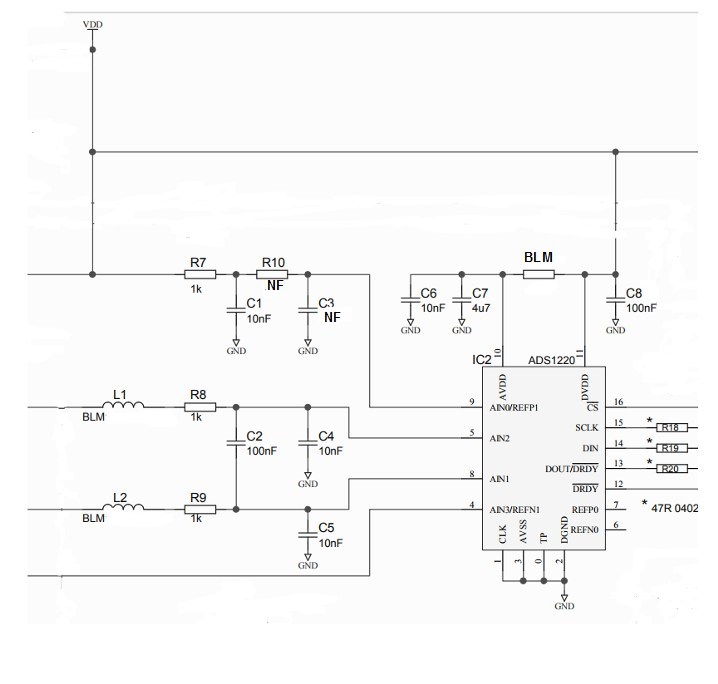

I am currently developing a load cell measuring device that will be battery powered. In order to reduce PCB real estate use and improve efficiency of the battery usage I am planning to take the analogue supply from the terminal voltage. Everything is communicating and measuring OK but I am having issues with the measurement stability with battery terminal voltage.

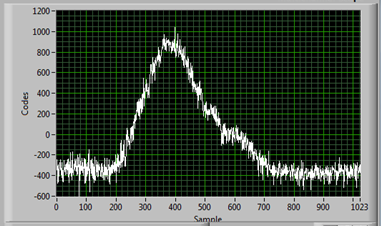

The measurement is ratiometric and as such I expected the measurement to self compensate as the analogue voltage changed. I am seeing variations of roughly 0.7 uV/V when changing the supply voltage between 3.5 V and 2.4 V. I have added the plot to show what I have observed.

Is there any data that characterises the variation of the measurement with analogue supply as I could not find anything specifically relating to this in the data sheet.

Is this characteristic typical of these devices.

Regards,

Clive.