Other Parts Discussed in Thread: DDC264

Dear colleague,

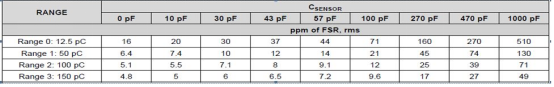

The customer wants to use DDC264EVM to compare their DDC264 data acquisition result.

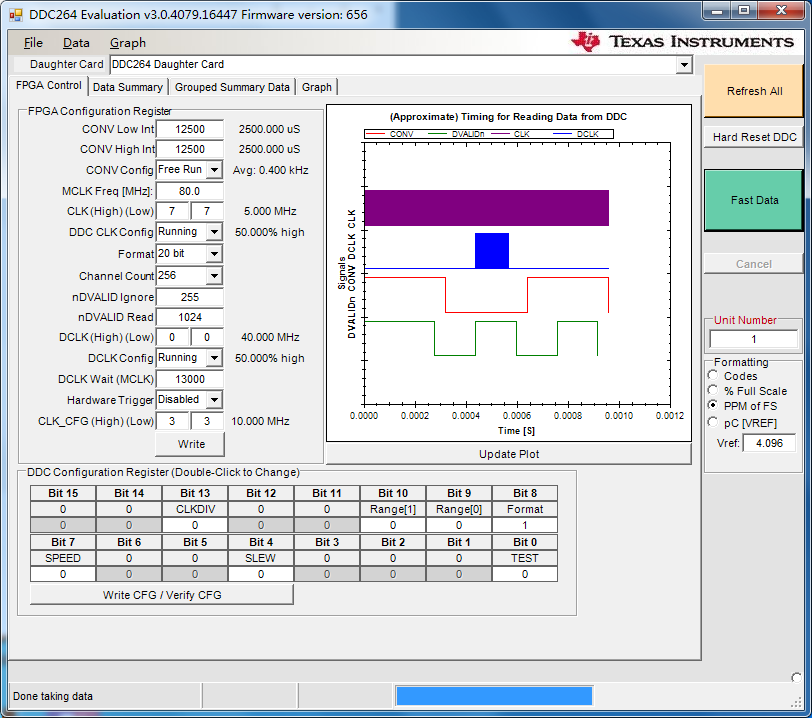

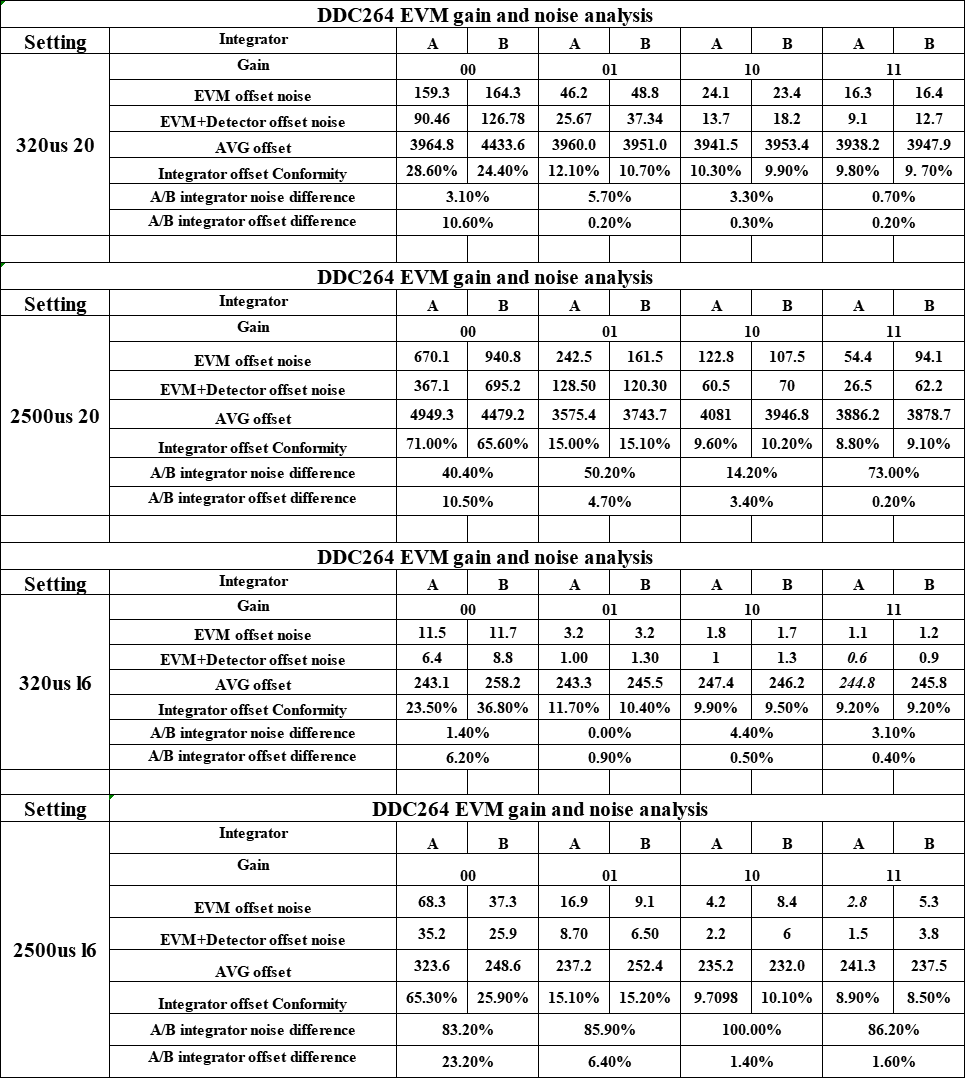

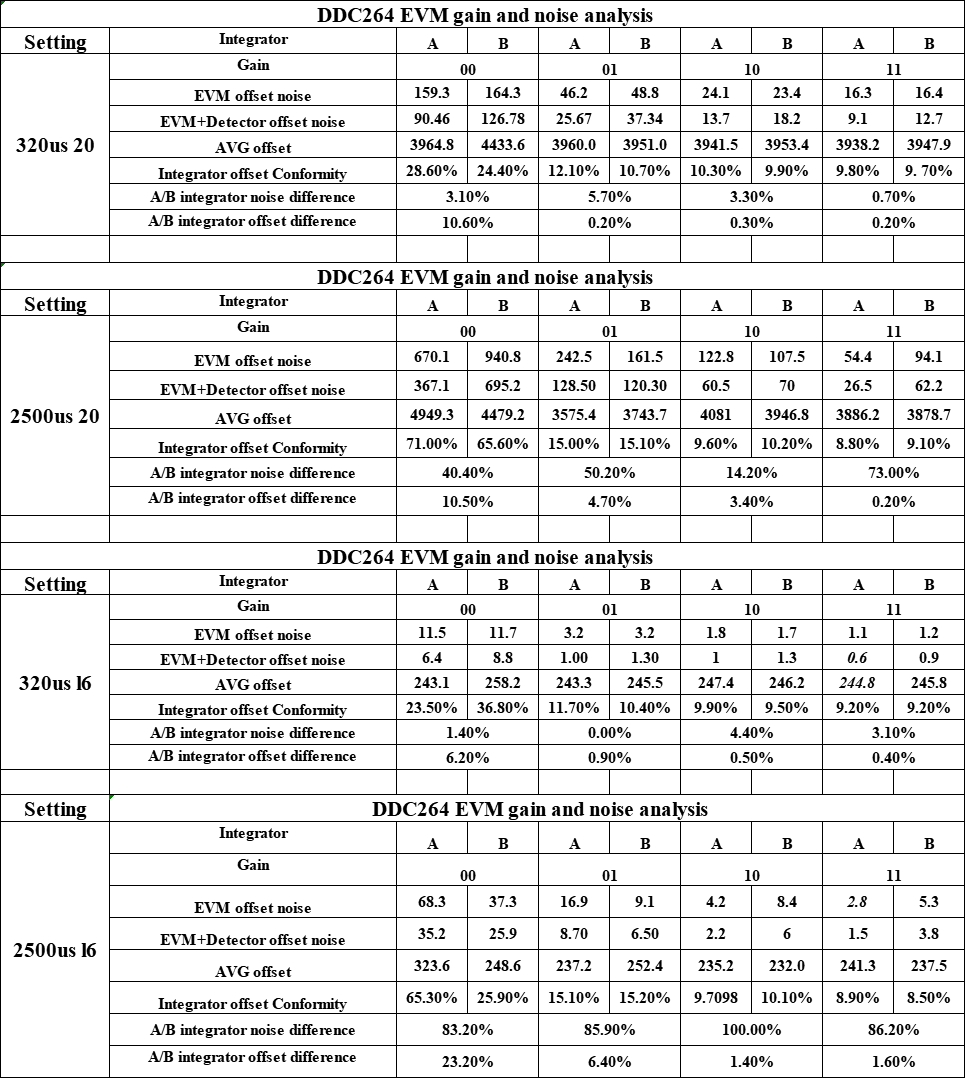

But when they use DDC264EVM in 16bit, they encounter below issues.

They want to know:

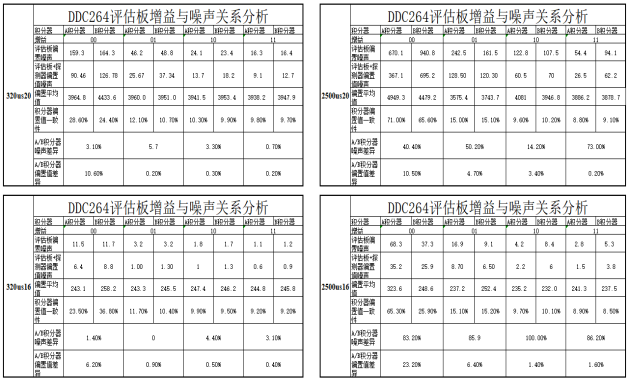

1. What is the reason for the wrong output format in the 16bits configuration?

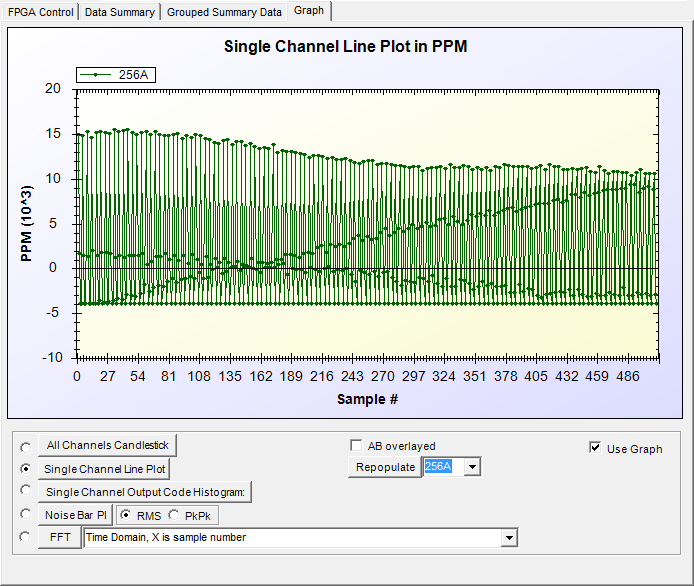

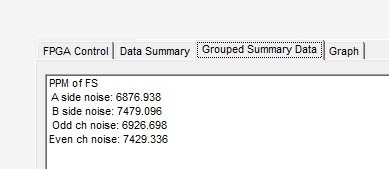

2. In output summary, the value is averaged by GUI, how many times have an average? How to get the row data? Because they want to compare their DDC264 data acquisition result with DDC264EVM, now they this their board noise is large.

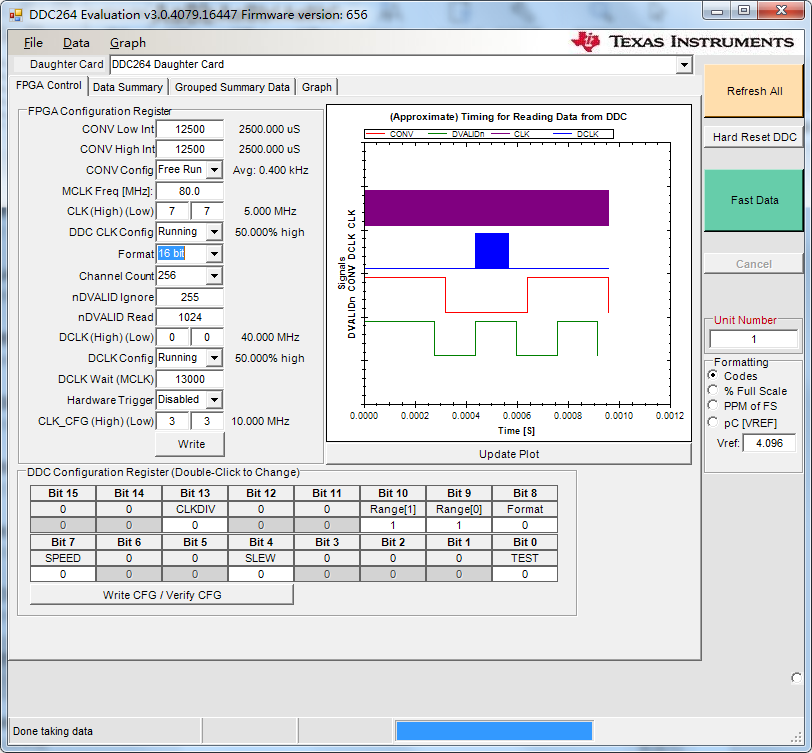

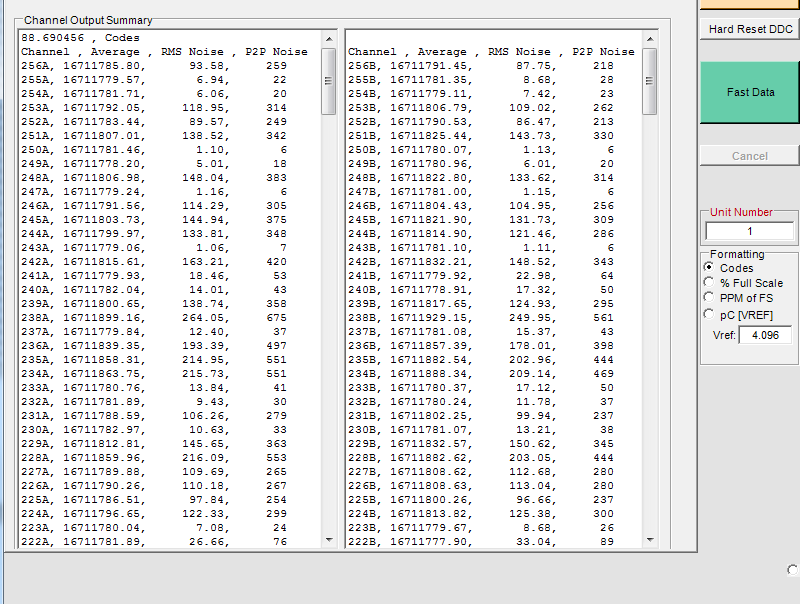

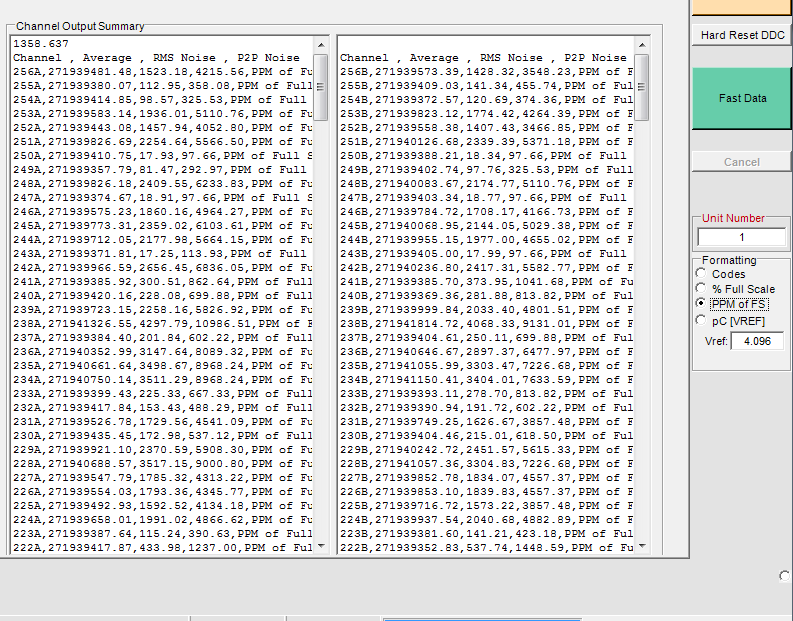

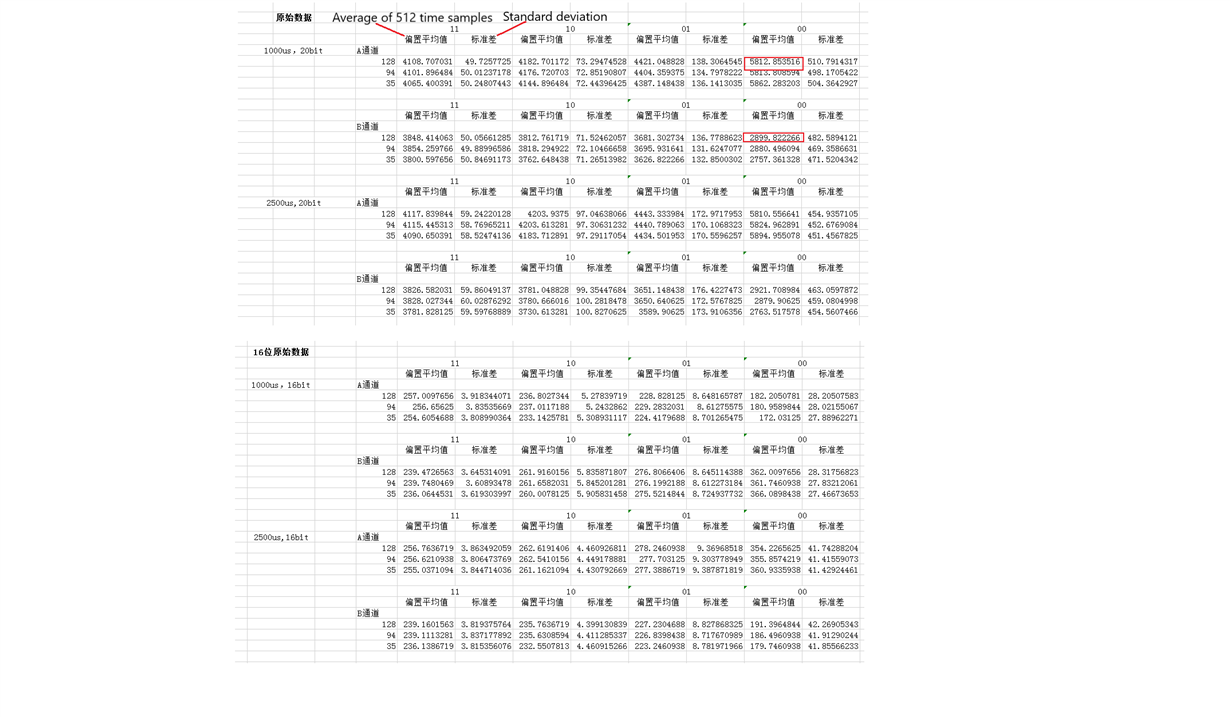

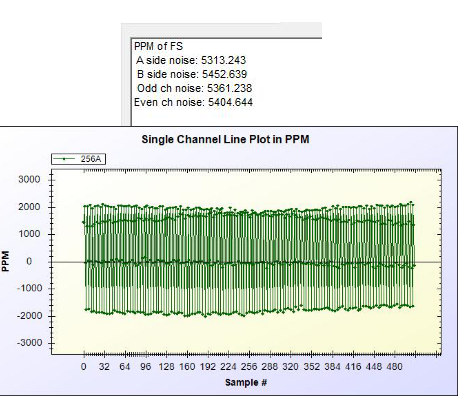

When change format to 16bit, no matter input signal amplitude and frequency how to change, output in GUI is wrong, and max value is not 65535. Result is as below:

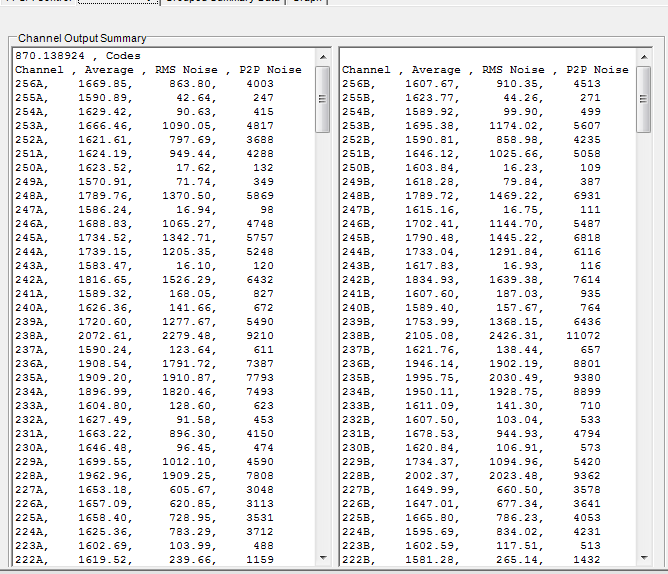

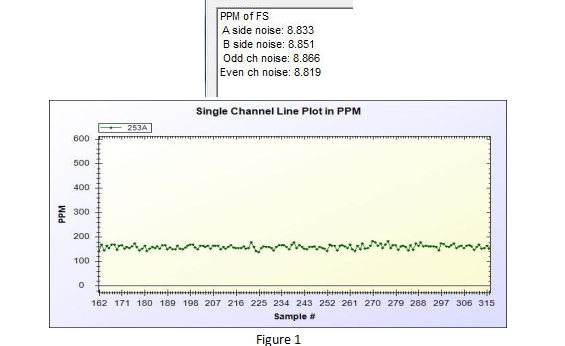

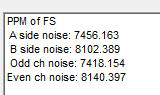

When change format to 20bits, output changed with input signal changes, the result is normal. The result is as below:

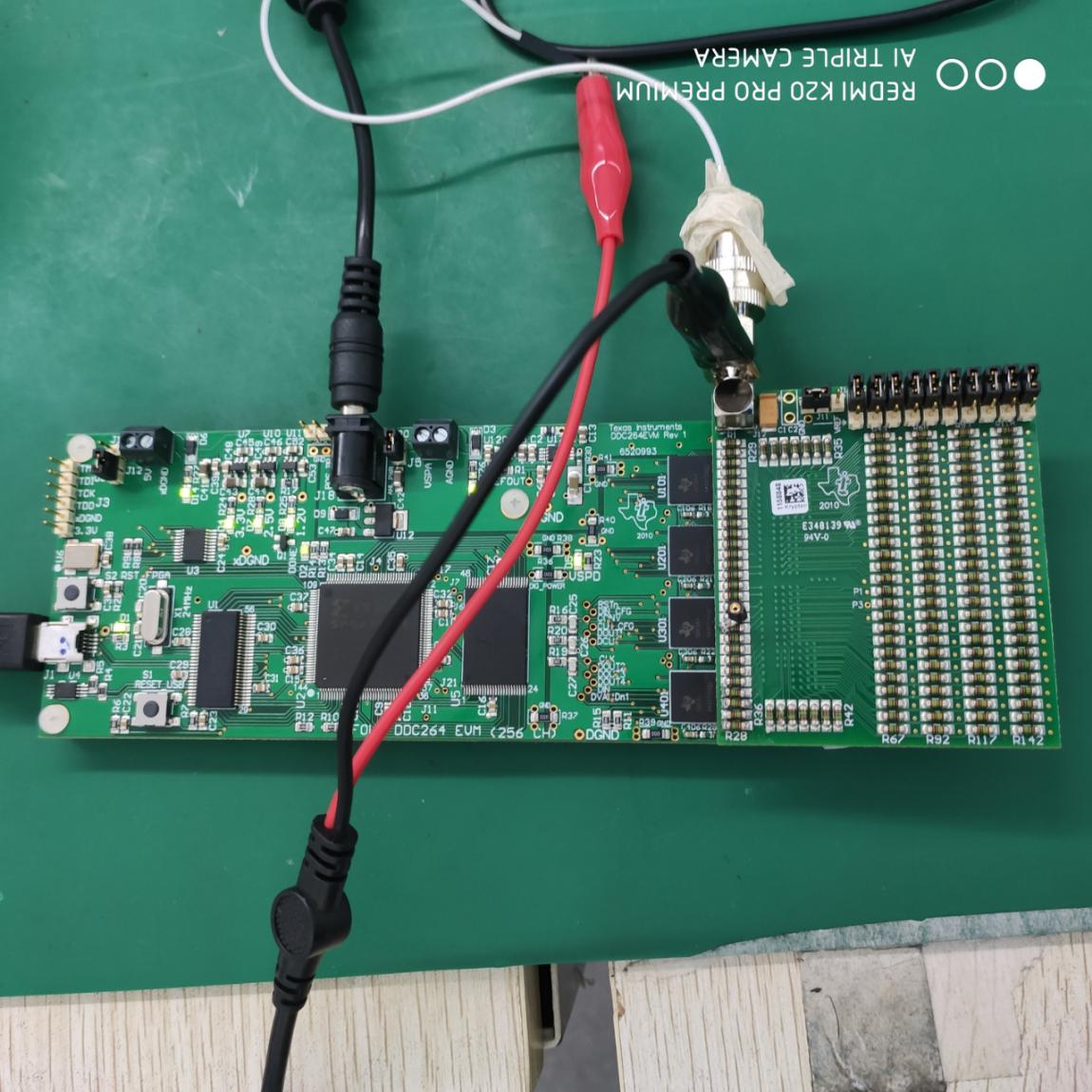

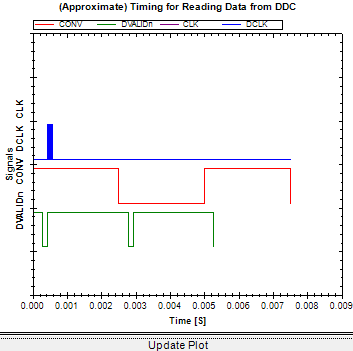

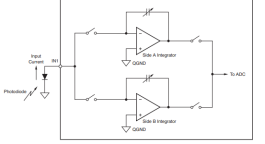

Connection configuration: