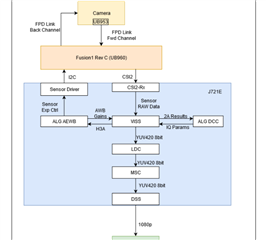

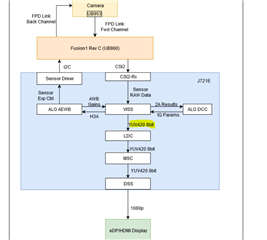

In the single camera application data flow, sensor raw data is converted into YUV 8 bit data. It is highlighted in the below diagram.

1. In our application, we want YUV 16 bit data. What are the changes that we have to do in the source code(single camera application)?

2. In the single camera application flow, it is mentioned that the YUV is passed to the DSS. But, there is no code related to DSS in app_single_cam_main.c file. How the image will be displayed while executing the single camera application?