Other Parts Discussed in Thread: OMAPL138, MATHLIB

Hi,

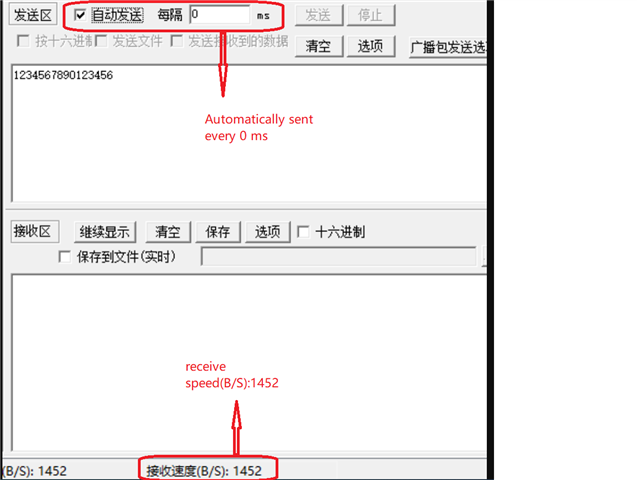

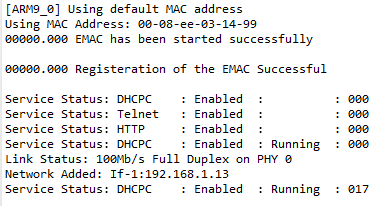

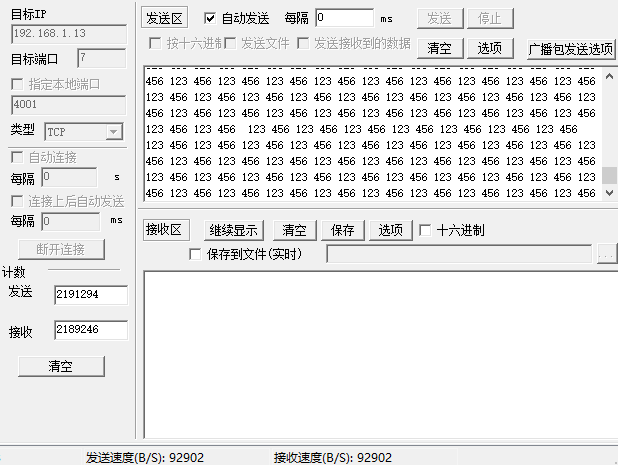

Customer configured 100M ethernet and tested dtask_tcp_echo in ti\ndk\examples\ndk_omapl138_arm9_examples\ndk_evmOMAPL138_arm9_client, but the maximum Throughput is 12KB/s and gradually decline.

How to improve the throughput? Is it possible to reach 100M?