Dear TI team,

we're having trouble to understand why we can't write DRU registers (specifically DRU_CHRT_SWTRIG_j) via the PCIe in endpoint mode.

Our setup consists of a x86 CPU in RC mode and an AM6548 in EP mode, with our code running on the R5f.

We sucessfully configured the DRU to perform memory-to-memory copies, and we would like to trigger the actual transfer from the RC (i.e. the x86).

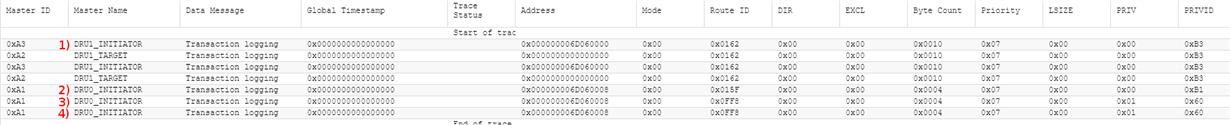

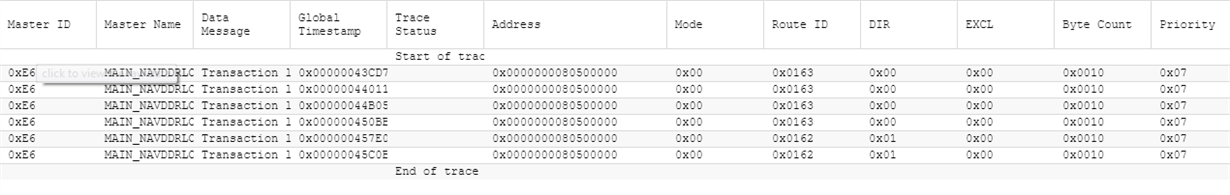

For this we mapped the DRU configuration registers at 0x6D060000 via a PCIe memory BAR. We confirmed that we're able to "see" the DRU config registers, for example by reading the DRU_CHRT_CTL_j registers. However we're unable to write to any of these registers.

When using a different DMA controllers, e.g. NAVSS UDMA, we can write to the respective SWTRIG register (e.g. UDMA_TRT_SWTRIG_j) without problems, and our write triggers the DMA transfer. However for performance reasons we would like to use the DRU, since performance seems to be way better when using the DRU.

We had a look at the various system interconnect master-slave tables (chapter 3.3.1 Master-Slave Connections in the Rev. E TRM), but we're not sure where to find the DRU in these tables. Since reading from the DRU config registers works we don't think it's a general lack of connectivity.

Since "read only" sounds a bit like a security issue we also looked into the interconnect firewalls, but again we have no idea which firewall would be responsible for writes from PCIe to the DRU config registers.

- How can we trigger a DRU DMA transfer from an external PCIe RC?

- Is there a more comprehensive documentation for the firewalls available? We found that the tables lack several firewall IDs for which the TISCI_MSG_GET_FWL_REGION returns configuration information. There are some additional firewall IDs listed in pdk_am65xx_1_0_6\packages\ti\csl\soc\am65xx\dmsc\csl_soc_firewalls.h, but there are also discrepancies between that header and the firewall IDs that we found by querying the SCI client.

- Is there any other hardware except the interconnect firewalls that could limit our ability to write to the DRU registers from an external PCIe RC?

Regards,

Dominic