Hi,

1. If we want to use mmWave sensor for medical purpose, will there be any hazard for human beings?

2. What is the safety limits in which we have to use?.. like the min distance between human body sensor? power we can use?

regards

Karthik S

This thread has been locked.

If you have a related question, please click the "Ask a related question" button in the top right corner. The newly created question will be automatically linked to this question.

Hi,

1. If we want to use mmWave sensor for medical purpose, will there be any hazard for human beings?

2. What is the safety limits in which we have to use?.. like the min distance between human body sensor? power we can use?

regards

Karthik S

Hi Joe,

We are thinking of using isotropic antenna which will have radiation only about 5-10 cm of its vicinity. So we will try to keep antenna gain less, so that output power is less.

The sensor will be at 5 cm from the patient, with min detectable power after reflection.

--'You would have to look at the proximity to the patient for different frequency ranges and output power'...Where can I find these details?

regards

Karthik S

Hi Joe,

Thanks for info.

Just need one more clarification- what is the min range resolution of AWR/IWR? some T2E discussion say 3.75 cm.Is this the min distance that can be detected by RADAR or it is the ability of sensor to detect 2 objects which are sep by 3.75 cm?

1.I want to detect a range of an object range, starting from 50 cm in steps of 1 cm till the distance becomes 2 cm. it is possible with AWR/IWR right?

2. If I want to build any system using mmWave AWR/IWR what are essential things I need like mmWave EVM board-299 USD will include all SW part required for configuration? do we need to buy it separately?

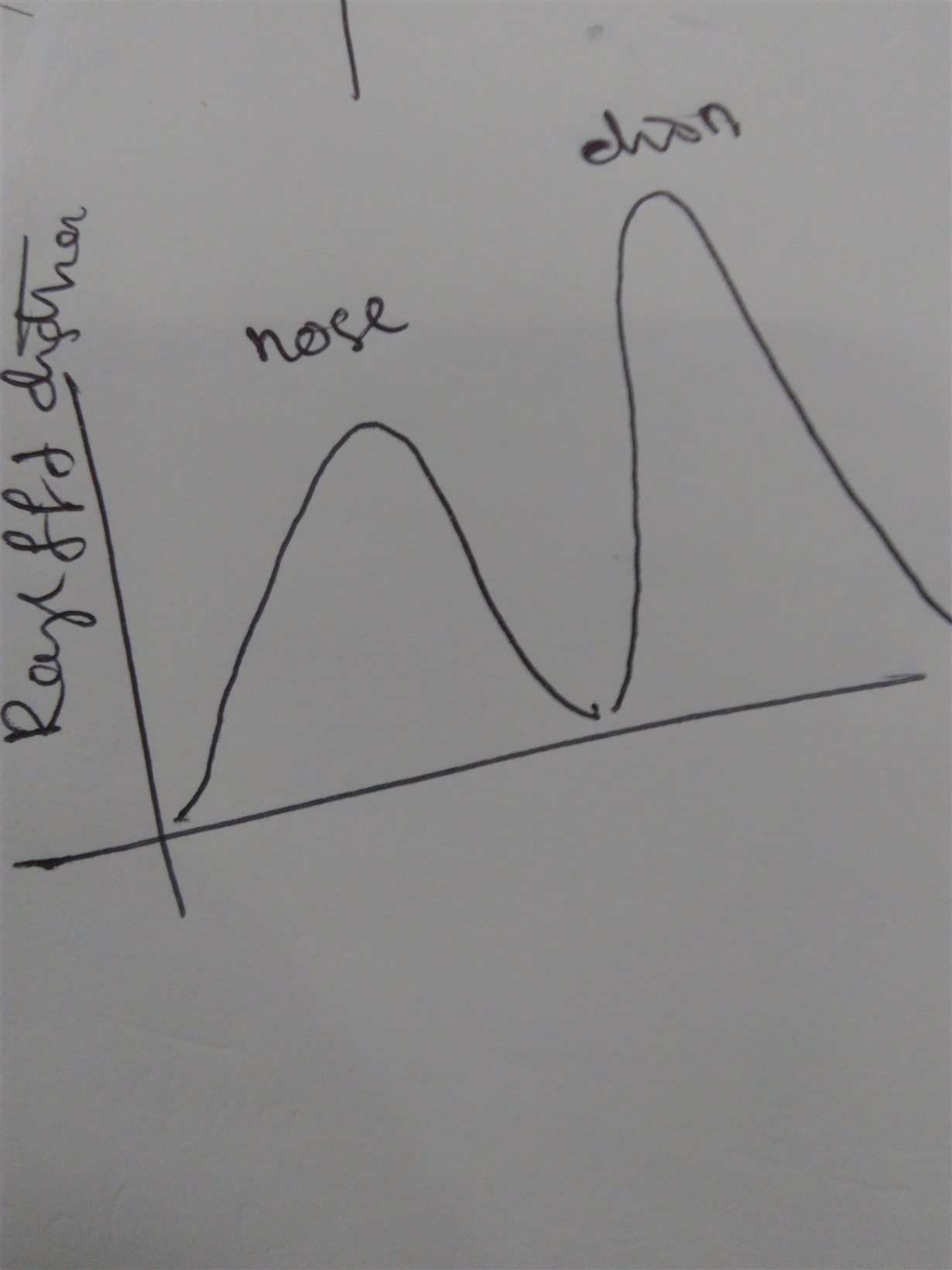

3. If I want to measure the distance of human face, Can this sensor able to give distance from nose and that of other parts of face. Ideally I want to get the 2 distance of sensor nose distance and sensor chin distance? If we have 3.75cm resolution with 4 GHz between nose and chin.?

Regards

Karthik s

Hi Joe,

Thanks for the elaborated information.

Could you pls explain last part, what is the extent that I can separate the chin and nose distance using phase signal, if human face is stationary they I feel we will not have any phase change in the signal.

with 60 GHz higher RF band is it possible to satisfy my requirement of separating nose and chin distance?

Basically tell me what I am suppose to do, if my radar want to detect nose and chin as separate object and should give distance separately?

Referring to fig above Is it possible to find distance R1 and R2 separately. take the width of projection is 2 cm. and flat portion extends to 6 cm comparable to nose and chin.

I was going through tuto doc by Mr.Sandeep rao from TI and it was mentioned that, if 2 objects sep by 3.75cm and are at different distance wrt sensor the phase of reflected chirp would be different, if same principle is used for nose and chin, nose is projected front than chin so the distance between sensor and nose will produce reflected chirp with f1 and phase1 where as chin gives f2 nearly equal to f1 and phase2. With phase info can we process the signal to get range information?

"'. The EVM, and the Out of Box demonstration software are the initial items.

If you want to do further Radar Rx processing the DCA1000 is needed, with mmwave Studio"-With only EVM and out of box demo SW can we process phase information?

what extra processing can be done with DCA100,?

regards

Karthik s

Hi Joe,

1."Related to range, velocity, and phase - there is a detection process where objects > detection conditions are active objects. You may need a method to detect the nose, lips, chin as separate objects, a point cloud can be tracked , the center of a point cloud can be subtracted from the center of another point cloud, and a distance can be computed. Object detection can be done on distance, velocity, and phase information"-

What I am thinking is to, process signal reflected by nose and chin if possible to detect, since there will be projection of nose the reflected wave will have one phase and chin will have one phase by keeping dmin as 4 cm between nose and chin. And try to map this phase change with extra distance travelled.

2. Could you pls explain the concept of point cloud

3. I need a kind of application, where I want to get range value(not on plot) in number like 4 cm. I want to compare and if its less than some threshold I want to generate a high signal form any I/O pin- Can it be done with only mmWave IC which has DSP and ARM? If yes How?

4."The DCA1000 is used to capture or forward over Ethernet the LVDS data that is output from EVM. The initial configuration forwards the mmwave Rx DFE output or stores it"---could you pls elaborate this?-

I want to get Range FFT as shown in the above figure.

Hello

1) If the person is breathing deflections in the nose area or cheeks could be detected for doppler as well as position.

The IWr1642 having an internal DSP, and the ARM control processor, can perform more post processing than the Hardware Accelerator and the ARM control processor. is the Industrial Control toolbox with experiments and labs for testing out some of your ideas. "dev.ti.com/.../

2) The point cloud is data about the objects detected by the mmwave sensor processing, see page 5 of "www.ti.com/.../spry311.pdf"

- range, velocity, angle about a detected object

3) the IWr1642 with the DSP can be used to develop algorithms after detection. There are both digital communications Control UART, MSS Logger UART (used in Visualizer over USB), I2C, and SPI, and GPIO that can be used for output.

4) If you put the mmwave sensor EVM on a tripod, and adjust if related to the target face, using the Best Range Resolution, you should be able to adjust the parameters (following swra553 - different chirp items) to get short range range and velocity information.

I have used the 4fps, side and front view. You can try this with other experiments.

front view (mmwave selfie)

side view

example config file

Regards,

Joe Quintal

Hi,

Thanks Mr. Joe for explanation with experiment.

The last point is it like, face is considered as object in front of mmWave sensor?

what does side and front view means? in point 4 explanation?

if I need onboared processing with IWR1642, but I have to drive this with 4 antennas for customized application, how should I go forward? simultaneously I want to measure range from all 4 antennas, I mean using both TX s in IC.

How exactly the signals received in 4 receivers is differentiated when signal is processing?

regards

Karthik

Hello

Front view, in this case the radar antenna is inline with the face, ie the nose is closer to the radar antenna.

Side view, in the case the nose is further away than the cheek on the face,

Regards,

Joe Quintal

Hi Joe,

Thank you so much for the experiment now I have some idea on my concept with mmWave. Just to clear some more

1. Is it possible to change the scale of plot, In above fig range plot is in meters, I want to use it in cm steps. So that I can see good differentiation between nose and cheeks.

2.Could u pls try the same exp, with step changing to cm, I don't see the range value of nose in current range power plot, only I can see 4 points which are after 0.5m.

3. Any examples available for using GPIO pin on mmwave?

4.In my case I only want to get the range information, so can I use only one Tx and One RX to get5 the first and second plot or at least the range vs power (db) plot?

5. Could u pls show what and can be done with single TX Rx combination with same setup as above?

Please don't mind, Just need clarity so that I will be sure for proposal of solution with your mmWave sensor.

Regards

Karthik s

Hi Joe,

Sorry for late reply.

"3) your picture seems to be a front view, if you are trying to detect the image of a nose and chin for biometric purposes, you would store a normalized point cloud. You would normalize position, and have to have some method so the angle from the radar antenna to the sensed person is nearly the same. You could then compare a normalized repositioned average point cloud to a stored parameter set, and set a GPIO for red(not OK)/green(OK). In this concept this has an analogy to an optical scanner, looking for a matching pattern"

---------I want to find the distance from tip of nose to antenna and chin to antenna as 2 separate range values, and if it is less than some threshold I want to make one GPIO pin high else it is kept at low.This is my application.

Can point cloud help me for this? How?

2. In the mmWave selfee image shown above the marked points indicate nose and chin? if yes then I can see example to have range plot in terms of cm values so that I will get the zoomed value.

regards

Karthik S

Hi Joe,

We have thought of using IWR 1642 due to on board DSP and ARM.

we will try to set only best range resolution which is max of 4 cm by adjusting chirp signal. And put it in front of face and get the reflected signal, taking the FFT and zooming it with more samples and if we could able to get the nose point and the part of chin which is 4 cm apart from nose and try to differentiate(Using the visualizer and DSP processing).

Is it possible to do in this way?

Can I map your first sentence of last post with my explanation?

Could you pls explain same thing wrt IWR 1642, the procedure remains same or different?

"Because of the HPF1, HPF2 response there is a null in front of the radar within a few cms. You could collect data from the data capture example, and post process to zoom into the object, however the ZOOM FFT provides a single point, you would need to calculate the zoomed FFT over more samples."- what does this mean?

Can these fine detection tried with AWR1642 as well? ... since we are having little difficulty in getting the IWR so

I got to know today you also have sepeicifc ODS version of AWR with wide FOV.

Can industrial applications like my application be implemented with ODS , since we want to onlty detect range. What is the difference between AWR1642 BOOST, IWR1642 BOOST, AWR1642 ODS interms of performance.

regards

Karthik

Hello Karthik,

The HPF1 and HPF2 portion of the mmwave sensor are the same.

The main mmwave sensor difference is that you have 2 Tx, and 4 Rx (1642) and 3Tx and 4Rx (1443). The extra Tx is used for elevation.

There is a MIMO application note swra544, that discusses the TDM and BPM MIMO to get better azimuth angular resolution.

The DSP can be used for other functions in the 1642 applications.

The IWR1443 has a higher DFE output rate, 18.75e6 complex 1x, the IWR1642 has a 6.25e6 complex 1x rate.

The BOOST EVM 1443,1642 have a medium range ~50 meter usage, in the EVM User Guide, the antenna pattern is displayed.

In the ODS EVM the antenna is setup for both azimuth and elevation with equal coverage.

You could try the ODS EVM also, to see which antenna pattern works best for your application.

In your example a dlelectric lens may help to focus the mmwave FMCW radar, " https://www.thorlabs.com/NewGroupPage9.cfm?ObjectGroup_ID=1627 "

Regards,

Joe Quintal

Hi Joe,

I wanted to understand on how to do the post processing, after recording the 100 frames from the, visualizer.

Please help me to do as follows: As discussed I will set 1642 for best azimuth resolution, and let node and chin tip will have reflected waves which will be captured from visualizer. Where do I have those vales? How do i take it to CCS for processing.

I want to get the range value of nearest object and want to compare with threshold and if it is less then it, GPIO pin should be made high. Please help me to achieve this...How do I go about it , I did not get any doc related to GPIO ports enabling/disabling.

Could u pls send link for zoom FFT lab? I searched in your site but could not get it.

Hi Joe,

What and all can be done with only IWR 1642 dsp processor ? Can I do those point cloud processing what you told earlier witout DCA1000?

If in case I am getting the plot of range, where do I have the value for comparison?

Let us say I have a signal reflected from face and I will get the range or angular plot as shown in your expt images. Where will I have the range value which will be displayed on plot?

I would like to process the data in, inbuilt DSP itself to get zoomed FFT and range value which are of 4cm resolution, I should be able to get 2 range FFT peaks which are 4 cm apart when I set the mmwave to max resolution of chirp config. What I am telling can it be acheived without DCA1000? I mean only with IWR 1642 DSP and ARM?

Plsase explain different points you have got in range and angluar plot of mmwave salfee? i see 2 very nearby objects is it 2 points on face or 2 different objects( Image is attached in last 2 post)

Regards

Karthik S

Hi Joe,

I agree with your point of Phase processing.

I wil just tell my req in a line: In mmWave selfie, I want to measure a distance from sensor to nose tip. what I have to do in terms of processing.

If I use point cloud and subtract the centres, I will get distance between nose and chin I need accurate distance between nose tip and sensor.

I went through vital signal lab but could not identify the part of phase processing.

Since FFT of signal reflected from phase would look like single peak as far as I understood and I am interested in the signal reflected from nose to sensor since I need shortest distance.If it is leg and from the tip of the finger. Please help me in this.

I went thorough the 2 labs you mentiond. But could you pls help me which .C or .H file I have to look into for phase information?

And which file should be need to be considered for modification in case of customization?

It woulf be good if you could show which part of code I need to see, for comparison of range value for threshold and activating GPIO..when I studied doxygen doc all are explained wrt memory. So how exactly will I compare the values?

regards

Karthik

Regards'Karthik