Other Parts Discussed in Thread: CC3100

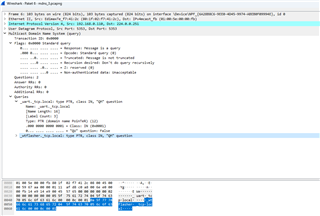

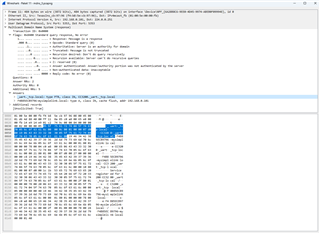

We use the CC3200 to connect 4 different of our devices to one accesspoint. An app from an end device (Android, iOS, Win10) ist connected to the same accesspoint and search for the connected devices via mDNS.

All devices with CC3200 are found, but not regularly and not always all at the same time. To investigate the behavior further, we set up a test program and created small statistics. For comparison and reference, we placed a Raspberry Pi in this network as well. This is found 100% of the time, but the devices with CC3200 are not. To exclude the influence of our own hardware, we also repeated the test with a LaunchXL rev. 4.1, latest servicepack, mDNS example from SDK 1.5 with the same result.

Has anyone seen this problem before and where can we look for the cause or a solution?

2 example statistcs:

--------------------------------------------------------------------------------------------------

Benchmark 1 MDNS

Scan 25 times in 19s

--------------------------------------------------------------------------------------------------

device 1 20%

device 2 52%

device 3 44%

device 4 44%

rpi 100%

...

--------------------------------------------------------------------------------------------------

Benchmark 4 MDNS

Scan 25 times in 125s

--------------------------------------------------------------------------------------------------

device 1 16%

device 2 100%

device 3 68%

device 4 92%

rpi 100%

--------------------------------------------------------------------------------------------------