Other Parts Discussed in Thread: Z-STACK, CC2538

We have are having an issue with a smart home gateway with CC1352 adn Z-Stack Linux gateway. After a certain amount of commands sent to a smart plug, we get the following message:

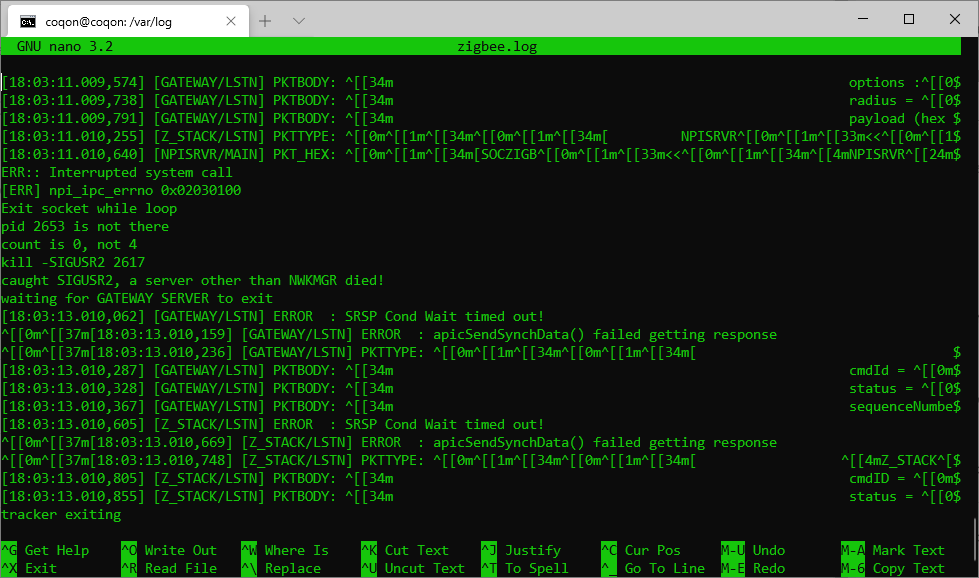

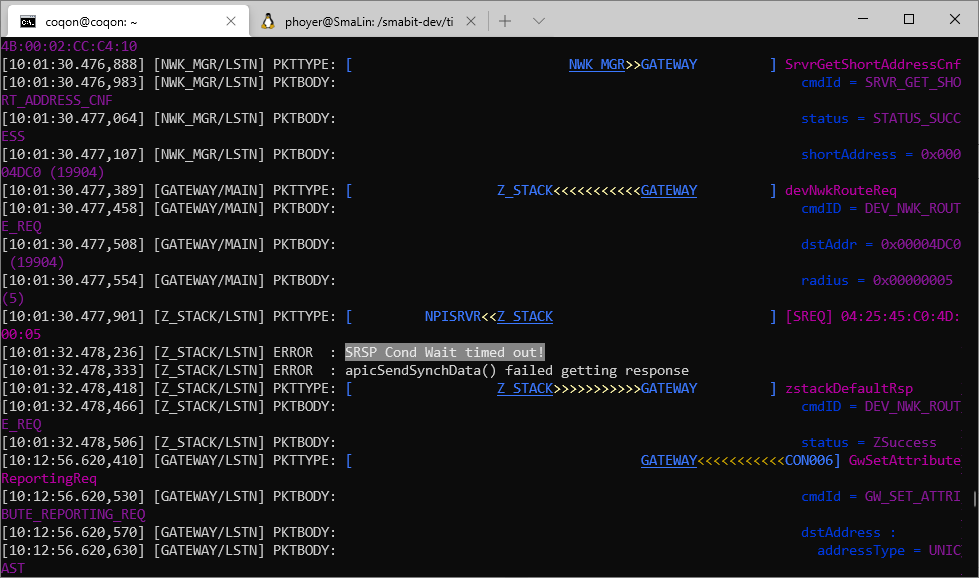

[16:54:21.697,127] [Z_STACK/LSTN] ERROR : SRSP Cond Wait timed out! [0m[37m[16:54:21.696,797] [GATEWAY/LSTN] ERROR : SRSP Cond Wait timed out! [0m[37m[16:54:21.696,894] [GATEWAY/LSTN] ERROR : apicSendSynchData() failed getting response

I doubt that this has to do with UART speed or other speed-related parameters as the problem can be reproduced depending on the amount of commands sent, not their interval:

- 2 secs interval: after about 50 minutes

- 5 secs interval: after about 3 hours

- 15 secs interval: after about 10 hours

When running a test script changing the plug state each x seconds, at some point we do not receive the state anymore (this is when we get the timeout message) but the plug continues to switch correctly. After another 20 minutes or so the plug also stops switching. For the second state, I cannot find a specific point in the log, you can just see this on the plug.

Could it be some counter or buffer which will overrun after some time?

In the attached log you can find the event at 16:54:21.697,127. I needed, we can provide further logs. The problem happens with different units (gateway and plug) so it is not related to a specific hardware.

ZigBee gateway version is the latest (exact versions of each module at the beginning of the trace), the Z-Stack on the module is 4.20.00.35.

Regards

Peter