I am trying to estimate the performance of real-time (RT) Linux running on one of TI's new SoCs. What's the best way to do that?

.

Please note that RT Linux is more real-time than regular Linux, but RT Linux is NOT a true real-time operating system (RTOS). For more information about real-time performance on different cores, please reference Sitara multicore system design: How to ensure computations occur within a set cycle time?

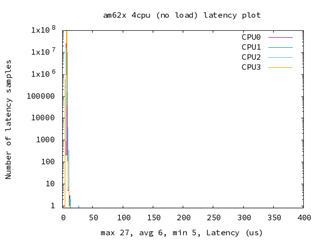

The performance of RT Linux is typically measured as interrupt latency (or interrupt response time). For more information about interrupt latency, reference the Linux section of Sitara multicore system design: How to ensure computations occur within a set cycle time?

For more information about testing with cyclictest, please reference https://e2e.ti.com/support/processors-group/processors/f/processors-forum/1172055/faq-am625-how-to-measure-interrupt-latency-on-multicore-sitara-devices-using-cyclictest

.

For other FAQs about multicore subjects, please reference Sitara multicore development and documentation