Hi,

I'm using a TLV320AIC3254 in a embedded system, and I would like to use its mini-DSP to compute the amplitude and phase of the 18kHz component of a signal.

The Codec is programmed using a STM32F427 microcontroller through SPI and samples are collected through SAI.

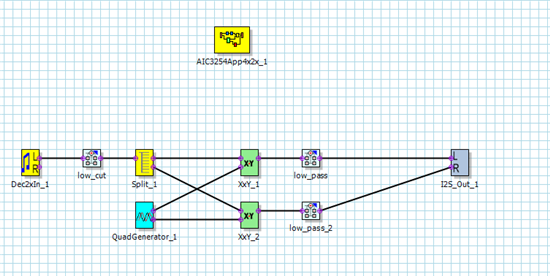

I used Purepath to do design this process flow:

(see output script at the end)

Using the QuadGenerator, I can bring the 18kHz component in baseband with a pi/2 phase shift between the two channel, and then use these signals to compute the amplitude and phase of the aimed component.

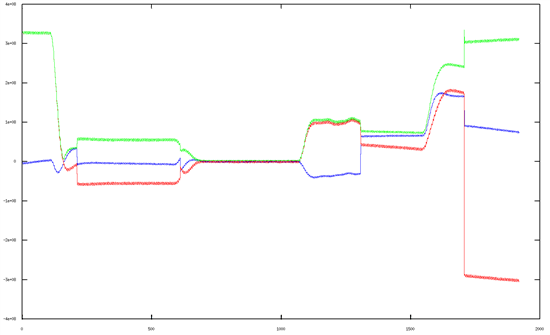

Unfortunately, when I plot the values we measure in octave we can see various jumps in our measures:

(blue is the left channel, red is the right one, and green is the norm of the two first - green=sqrt(blue²+red³))

We can see the jumps around 200, 600, 1300 and 1800.

I also recorded raw output and multiply them by sines in octave and the same result occurred, so its directly the output of the ADC that is impacted, and not the sines themselves

I don't know where these jumps come from but I was assuming it was some sort of AGC, but I disabled it and verified the register was correctly configured afterwards

Can you tell me where this effect could come from and how I could disable it ?

Here is my output code from PurePath, (I have just modified two lines to route the correct input and set data size 32bits).

Thanks !