Tool/software:

Hi,

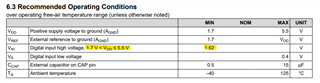

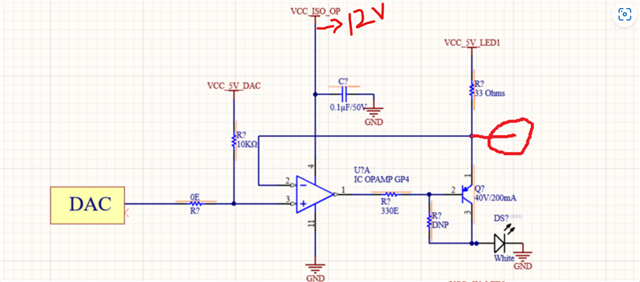

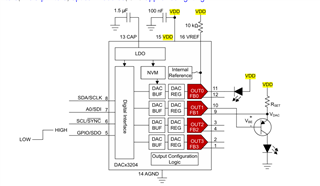

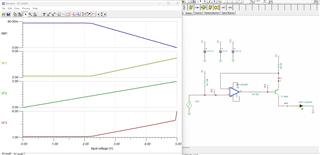

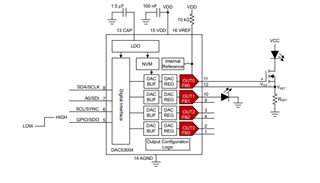

I have planned to use DAC63204 for led brightness control in my design. The microcontroller that is used functions at 3.3v. Will it be possible to power DAC63204 at 3.3v to avoid the usage of level translators for I2C and SPI?

Thank you.

Regards,

Jeevan