Tool/software:

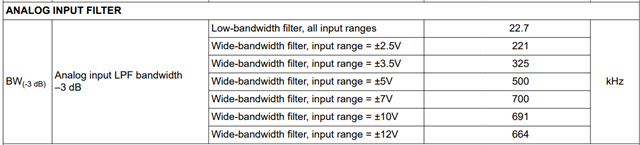

I'm working on a data acquisition system using the AD9813. When I measure the input signal at the AD9813's input pins with an oscilloscope, the signal amplitude remains stable remains constant across the frequency range of 40KHz to 400KHz. However, when I capture the waveform using FPGA's ILA (Integrated Logic Analyzer) to observe the digitized data, I notice a distinct trend: as the input frequency increases within this range (40KHz to 400KHz), the amplitude of the captured digital waveform decreases significantly.

The analog front-end signal appears stable and consistent across the entire frequency spectrum when verified by the oscilloscope, but the digitized output data shows a clear frequency-dependent amplitude reduction. I've confirmed that the input signal reaches the AD9813's input pins with consistent amplitude regardless of frequency.

Could you help explain the possible reasons for this discrepancy between the analog signal measured by the oscilloscope and the digital waveform captured by FPGA ILA? Are there specific characteristics of the AD9813 or configuration considerations that might cause this frequency-dependent amplitude variation in the digitized output?

I'd appreciate any insights or guidance on troubleshooting this issue.