Hello,

I am trying to compare the MSC1211 and MSC1210. In the MSC1211 data sheet, the internal voltage reference is specified for a Vref clock of 250 kHz. Am I correct in understanding that this is a maximum frequency?

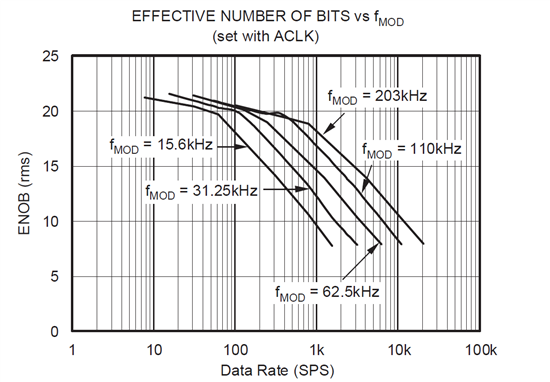

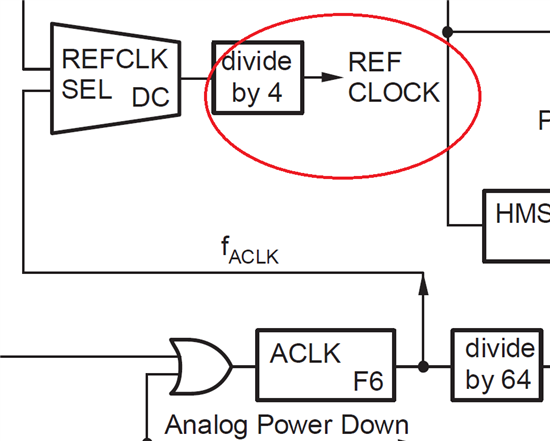

The MSC1210 data sheet in a similar table states "ACLK = 1 MHz." Does that mean the ACLK divider must be set so that the Vref clock is 1 MHz? Is that a minimum or a maximum? What are the consequences of using a different frequency?

I am asking this because the ADC rate depends on the same branch of the clock division tree, and it would be helpful to know how constrained the ADC rate really is, especially with the MSC1210.

Thank you,

Chris Hakim