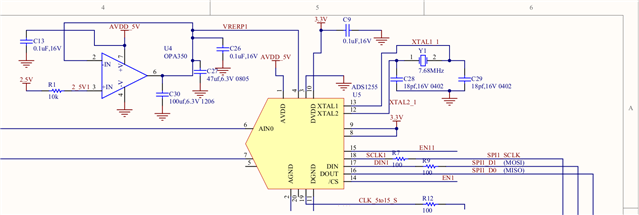

I'm trying to debug my ADS1255s (I have 4 on the same board with daisy-chained 7.68MHz clock signals but the diagram will just show one of them) as they have been reporting incorrect voltages when presented with a known input.

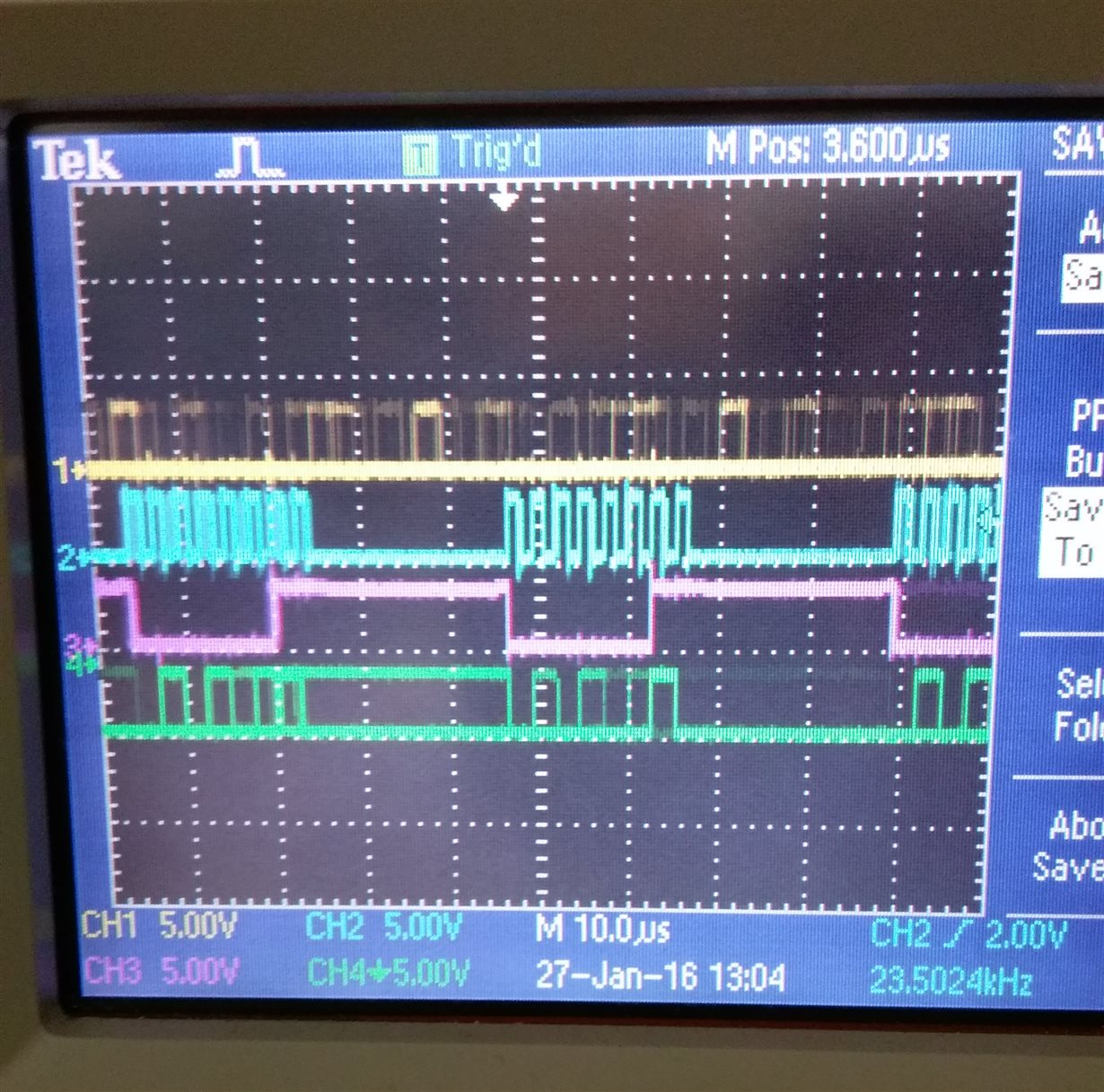

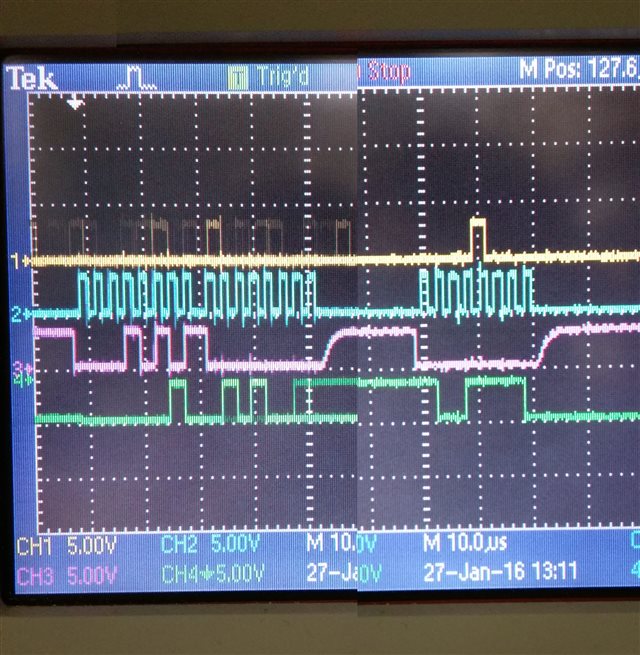

Right now I am seeing that the number I get out when presented with alternating 5v (= full scale = 2 x VREF = 2 x 2.5) and 0v differential signals is wildly incorrect after performing a self calibration. Here are 3 measurements taken sequentially about 300ms apart.

5V signal

=========

626072h : 3.842842v

6260E4h : 3.842910v

627FF7h : 3.847651v

0v signal

=========

FDFFAFh : -0.078173v

FDFDFCh : -0.078433v

FDFDFEh : -0.078431v

If I instead perform a SYS[G/O]CAL with a 0v and 5v input, I get something a bit more respectable. Pragmatically though I cannot perform such calibrations as I have no way to provide reference signals without getting my hands dirty or redesigning the circuit board. I would've imagined SELFCAL to be apt enough to at least get measurements to within 100mV?

My control flow is as follows:

[COLD BOOT] WAKEUP set DRATE = 0x92 // 500SPS set ADCON = 0x00 // PGA = 1 SELFCAL SYNC [MEASURE] while !DRDY, loop RDATA set b = read 3 bytes set result_integer = -((b[0] >> 7) * (1 << 23)) + ((b[0] & 0x7F) << 16) + (b[1] << 8) + b[2] set fullscale = 2 * 2.5 / 1 // 2 * VREF / PGA set voltage = fullscale * result_integer / ((1 << 23) - 1)

The ADCs are wired up something like this: