I am having an issue using ADS1118 with a 3 wire RTD, PT1000, in wheatstone configuration - please see figure 41 in the link below:

www.omega.com/.../rtd-measurement-and-theory.html

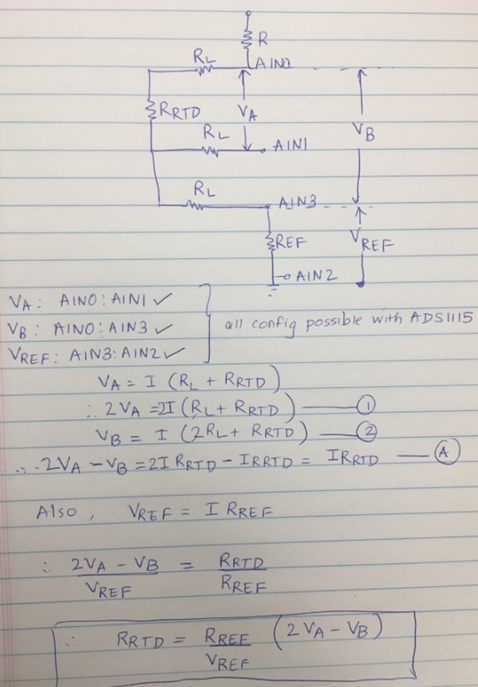

We are using AIN3 (pin 7) for the reference voltage, AIN2 (pin 6) for the RTD voltage, and AN0 (pin 4) to read Vcc

We have a few prototypes working perfectly and a few reading slightly off but consistently off - for example, reading 3 degrees low in both room temp and ice bath tests. As mentioned we are using PT1000 RTDs which all test perfect out of circuit and we are using 1K 0.02% resistors which all test perfect out of circuit as well (using a recently calibrated HP 34401A). If we replace the RTDs with 1K 0.02%, to replicate a stable 0 degree celsius condition, the readings match up (very close to 0V differential). Again, some units are working great, some not. So, what can cause a perfectly working RTD (1k in icewater, 0 degree celsius) to consistently appear to be a few ohms low when in circuit under the same conditions but when replaced with a precision 1k resistor the readings produce 0 celsius?

Please ask any and all questions needed if I need to clarify or go more into detail