Hello Team,

We received the below questions from one of our customers on the ADC12J4000 device:

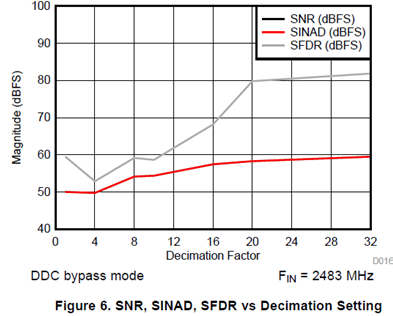

The following figure, page 18 from the datasheet represents the SFDR vs the decimation factor. It shows that when the Decimator factor increases, better is the SFDR.

I don’t understand why the SFDR depends of Decimation factor. Could you explain me the reason of this improvement? Is it true concerning other frequency signals (between 1GHz to 2GHz at 4GSPS)?

Supposing that the decimation is realized in a FPGA (reproducing the same digital filters that the ADC), could we have the same trend? In other words, is this trend only depends on the decimation or is exists another reason?

Thank you in advance.

Kind Regards,

Mo.