for a ideal ADC, SNR = 6.02*N, actual ADC's SNR is much lower than 6.02*N, with input frequency increasing, SNR is only related to sampling clock jitter,that is SNR = 20*log(2*pi*fin*T_jitter).

below are performace of two ADC chips:

ADS54J60:

IN = 470 MHz, Ain = –1 dBFS ,SNR = 66.5 dBFS, NSD = 153.5 dBFS/Hz

ADS5400:

IN = 600 MHz, Ain = –1 dBFS ,SNR = 58.2 dBFS, NSD should be = 153.5 - (66.5 - 58.2) = 145.2 dBFS/Hz

1. ADS54J60 is 16 bits and ADS5400 is 12 bits,but the SNR difference is 8dBc, the 16 bits ADC does not seem to have a significant perforance advantage since it is much more expensive??? why N matters???

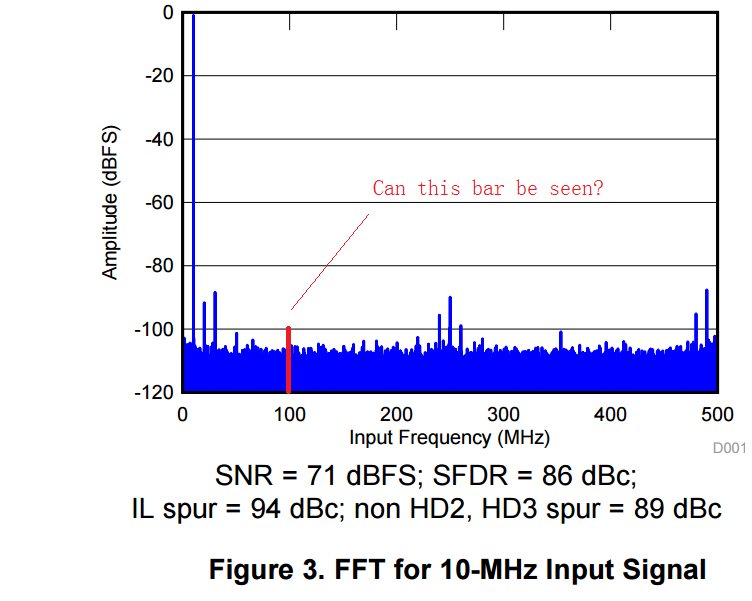

2.if input signal is 6.02*16 = 96.32 dB lower than FS but higher than NSD,e.g. a 100MHz input is -100dBFS(or even lower,FFT Gain is high enough),Can ADS54J60 'receive' this input???

since signal amplitude lower than 1/2^16 (20*log(1/2^16) = -96.32 dBc)does not trigger the input encoder ,right???