Other Parts Discussed in Thread: DDC264

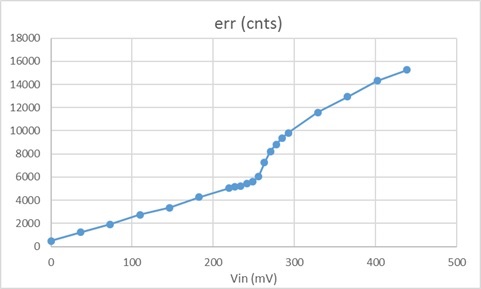

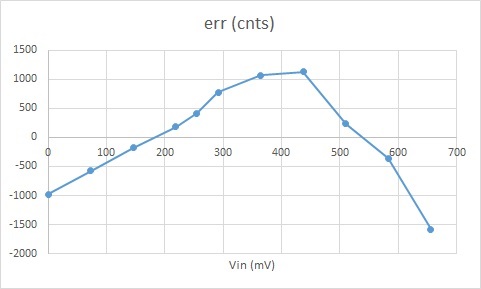

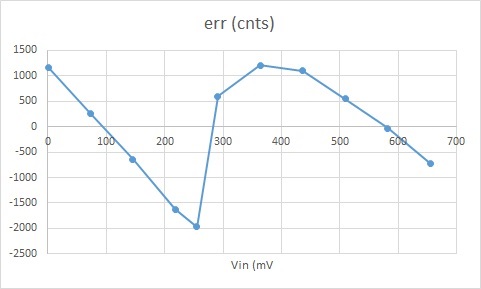

DDC118 Linearity Issue – as shown in the test data plot (Fig.1). The issue is that, the DDC118 linearity has a sudden change in the middle of the input signal.

The test was done with the test circuit as shown in Fig. 2. A constant current source, Ic (7.31uA), is applied to the resistor RT. Then the buffer op-amp output voltage is Ic*RT. The 10Mohm resistor converts the voltage into current input to one of the DDC118 inputs. With different RT resistor value, DDC118 counts were logged and converted to the measured resistance and compared with the true RT value to calculate out the measurement error -- the vertical axis of the plot. The DDC118 setting is: range 111, integration time 5 ms. At the setting, the linearity change occurred at ADC counts of around 400,000 (RT = 35kohm to 40 kohm).