We are trying to timestamp all our periodic Tasks, Swis, Hwis, and our zero-latency interrupt but we see fluctuation when optimization is on. My first test of this logic on a SWI in our codebase is showing a 1ms +-1us for its period when optimization is off but 1ms +-14us when optimization is turned on. We have some GPIOs set. that I set directly after the timestamp. On an oscilloscope, I see the +-1us when the code is optimized and not. This seems to indicate that the optimization is doing something to the timestamp logic. Perhaps it's moving it later into the logic? Note, I've tried removing the GPIO set/clear actions but this doesn't remove the issue. We'd like to get this as close to 'real' period as possible without infringing on the actual work being done.

The timestamp module is running off of Timer 2 using the full range of the timer period (0xFFFFFFFF). Our zero-latency interrupt is about 10us, so this could easily be the factor causing the difference. Mostly, I'd like to ensure that the timestamp is taken first thing in all of our threads.

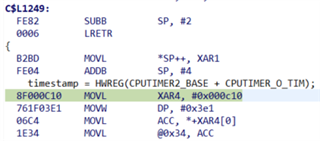

Here is an example of what I'm trying to do:

static volatile uint32_t lastTimestamp = 0;

static volatile uint32_t timestamp = 0;

void swiFunction(void)

{

timestamp = HWREG(CPUTIMER2_BASE + CPUTIMER_O_TIM);

.

.

.

// save the delta between timestamps (CPUTIMER_O_PRD, period = 0xFFFFFFFF)

saveDelta(0xFFFFFFFF - timestamp, 0xFFFFFFFF - lastTimestamp);

lastTimestamp = timestamp;

}