Hello,

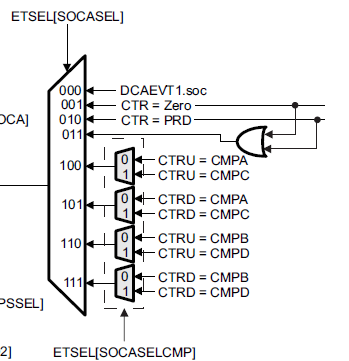

I am developing a dual-CPU power converter. I have one converter running at 1MHz and the other running at 200-400kHz, and their interrupts are triggered by the ADC SOCs.

I have found during emulation that the priority at which I set the interrupts leads to very different performance of the code.

I had originally planned to use ADCA1, ADCC1, TIMER0 and IPC0/IPC1 interrupts in each CPU to communicate between them.

I find that if I use ADCA1 (1MHz) and ADCC1 (200-400kHz), TIMER0 and IPC0/IPC1 are never serviced.

I changed ADCC1 to instead use ADCC2 in group 10, which then leads to TIMER0 being executed as expected. ADCC2 also does execute, but only a select number of times (100~) and then is never executed again.

I think there is quite clearly some kind of conflict between the ISR's - TIMER0/ADCA1 is always set again, before it is reset (I believe I am using continuous interrupts because I am using the CLA and digital controller library to read error and calculate new duty cycles and periods).

Is it just that the code in my 1MHz control loop takes too long to execute, and therefore because it has priority, after it has finished executing it has already set a new ADC flag and begins execution again?

Is there any solutions to this at all? IPC0/1 are not executing at all, the IPCSTS[IPC1] flag is constantly set showing that the interrupt flags have been set requesting a service routine to occur, but the CPU is never servicing them.

EDIT: I changed the ADC's to not be continuous and instead need manual reset of the INTs. I then checked the ADCAINT1OVF and ADCCINT2OVF and both have been set at 1, leading me to believe that my suspicions are true and that the PWM frequency generates a new interrupt before the last one has been handled. Is there any way to work around this? Is it just a matter of the control loop being too fast, or the code I have wrote being too slow to execute before the next PWM cycle comes along?

Any help appreciated.

Best regards,

Joel