Hi Champs,

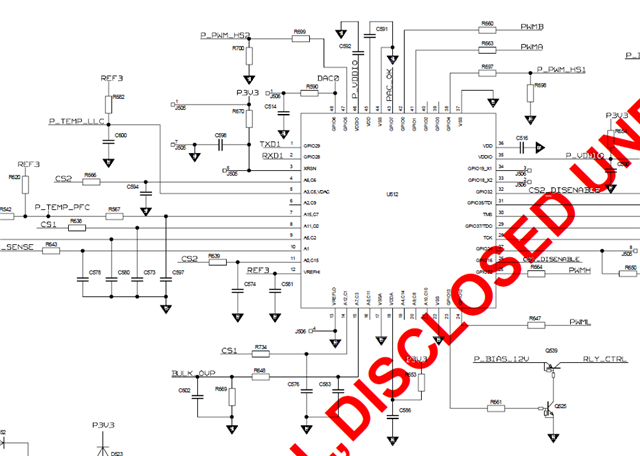

Customer migrates their digital power supply platform from NXP's DSPs to F280023(PFC) and F280025(LLC).

The firmware is almost done and the unit passed almost all the DVT functional tests, but they're encountering some problem with the surge test.

The primary DSP resets durng 2.2KV differential mode surge test.

- The main board is using the old main board with the old NXP platform, which had passed surge tests. The control board is redesigned, the aux power is located on the control board.

- They use

- 4K7 pullup to XRNS pin, and use 10nF bypass cap, changing the bypass to 100nF no help.

- 2.2uF cap for each VDDIO pin.

- 10uF cap for each VDD pins.

Please kindly share how to debug and solve this kind of issue.

Thanks

Tamas