Hi,

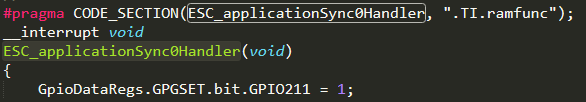

attacched in the below picture is shown the delay, plotted in an istrogram between the arrival of the interrupt trigger (in particular a ECAT SYNC0 signal, routed with the OUTPUT XBAR in a GPIO) and the first istruction be executed inside the ESC_applicationSync0Handler (set a GPIO).

My question is about the extreme jitter of about 500ns (100 istructions @ 200MHz), can it be consider normal? My problem is not of the delay itself but it not being constant.

Please note that this is the only interrupt active and it's executing from RAM.