Part Number: TMS320F28035

Other Parts Discussed in Thread: OPA320

In looking at the F2803x datasheet, I'm trying to determine two things:

- What are all the strategies that can be deployed for ADC calibration, including steps necessary to implement them?

- self-recalibration

- offset calibration (if self-recalibration is occuring, is there nothing else that can improve this?)

- two point gain calibration

- temperature compensation

- etc...

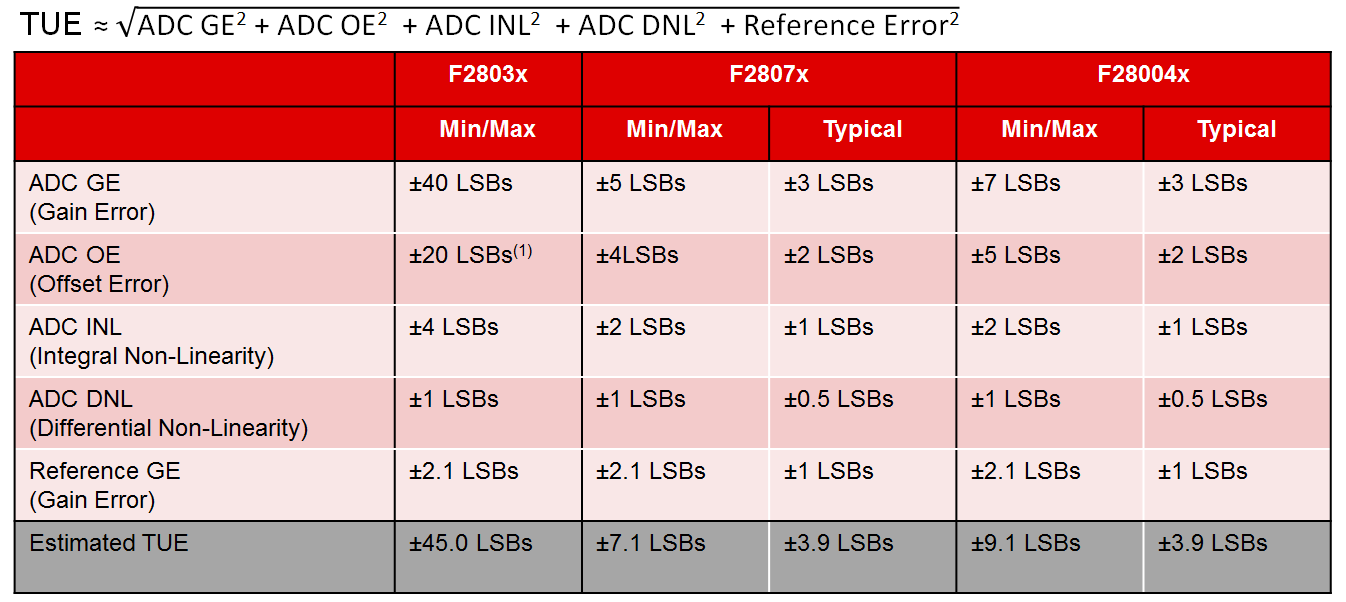

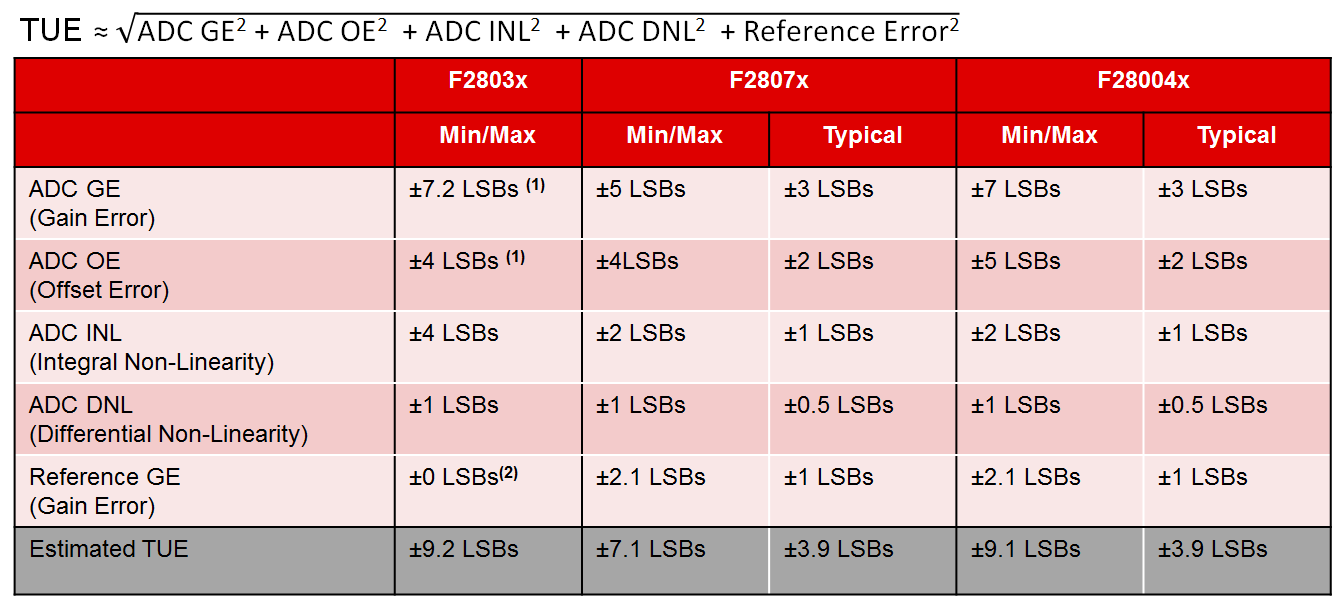

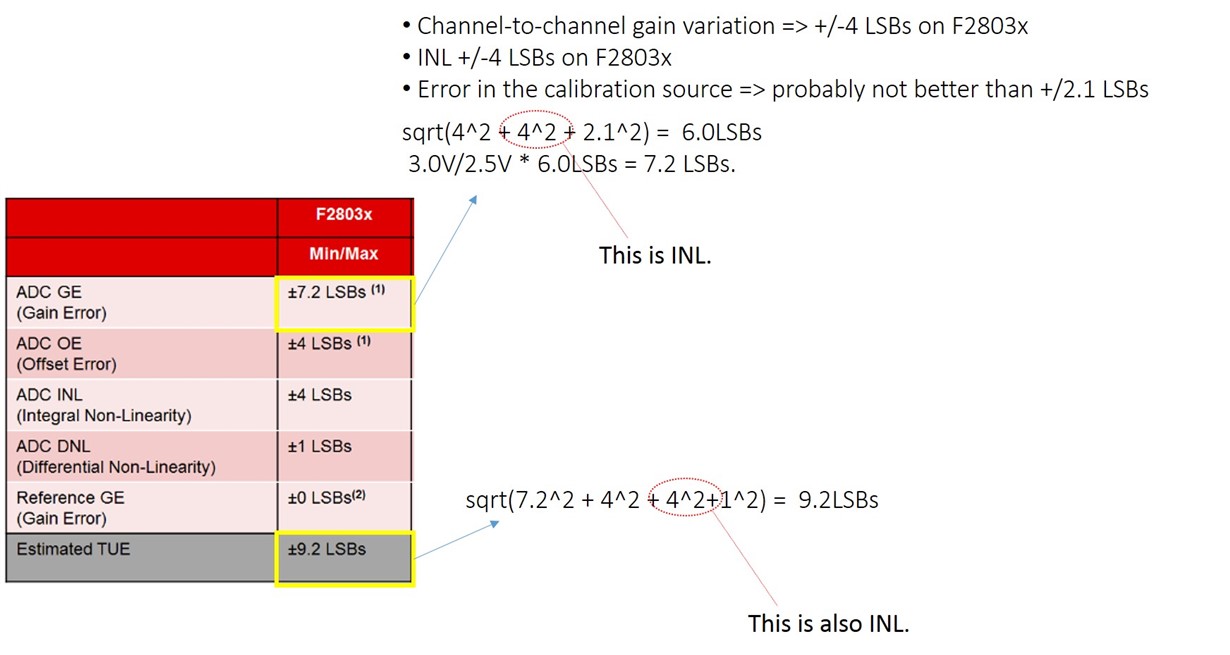

- After deploying all the necessary ADC calibration, what is the resulting total unadjusted error?

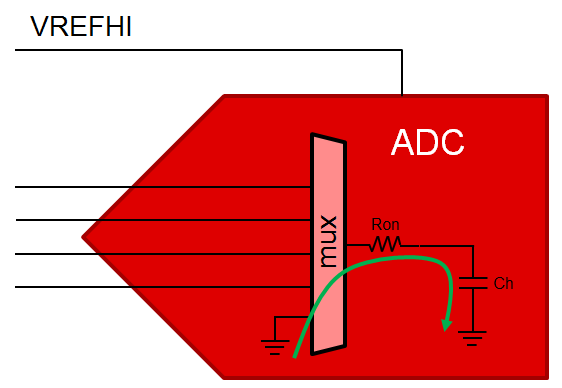

Let's assume that periodic self-recalibration occurs. It appears as though the INL and DNL are not additive. This would leave INL as the worst of the two at 4 counts. What I don't understand is whether what other errors are additive:

- Channel-to-Channel offset and gain variation does not appear to be an absolute error added to the total unadjusted error of a given channel.

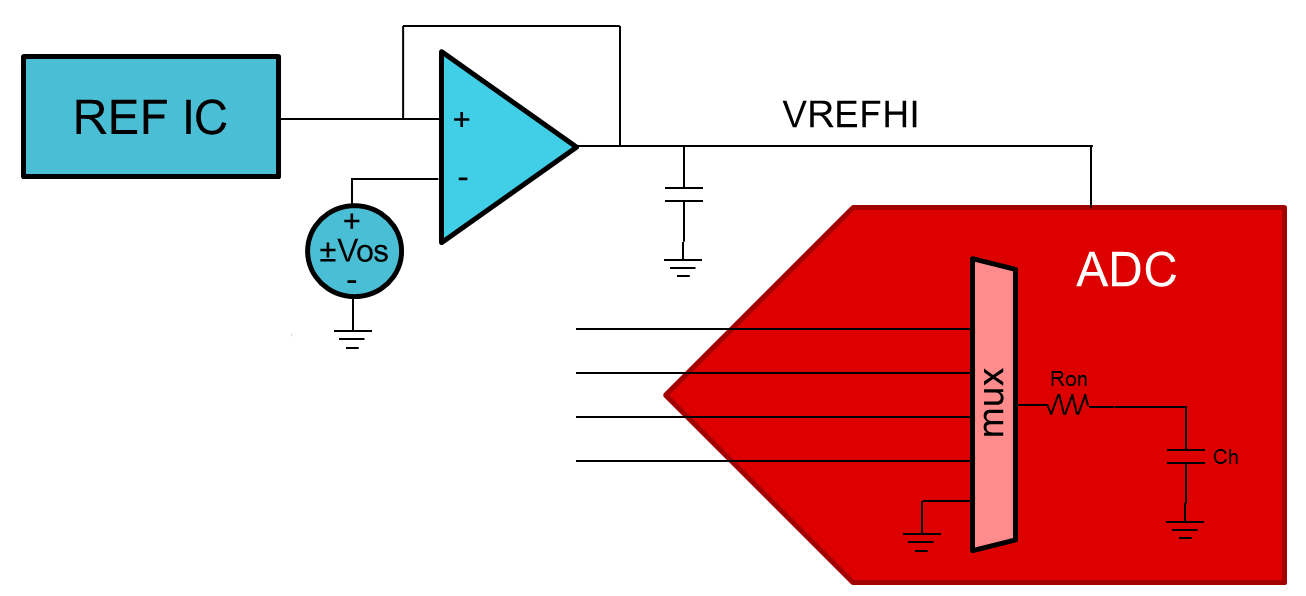

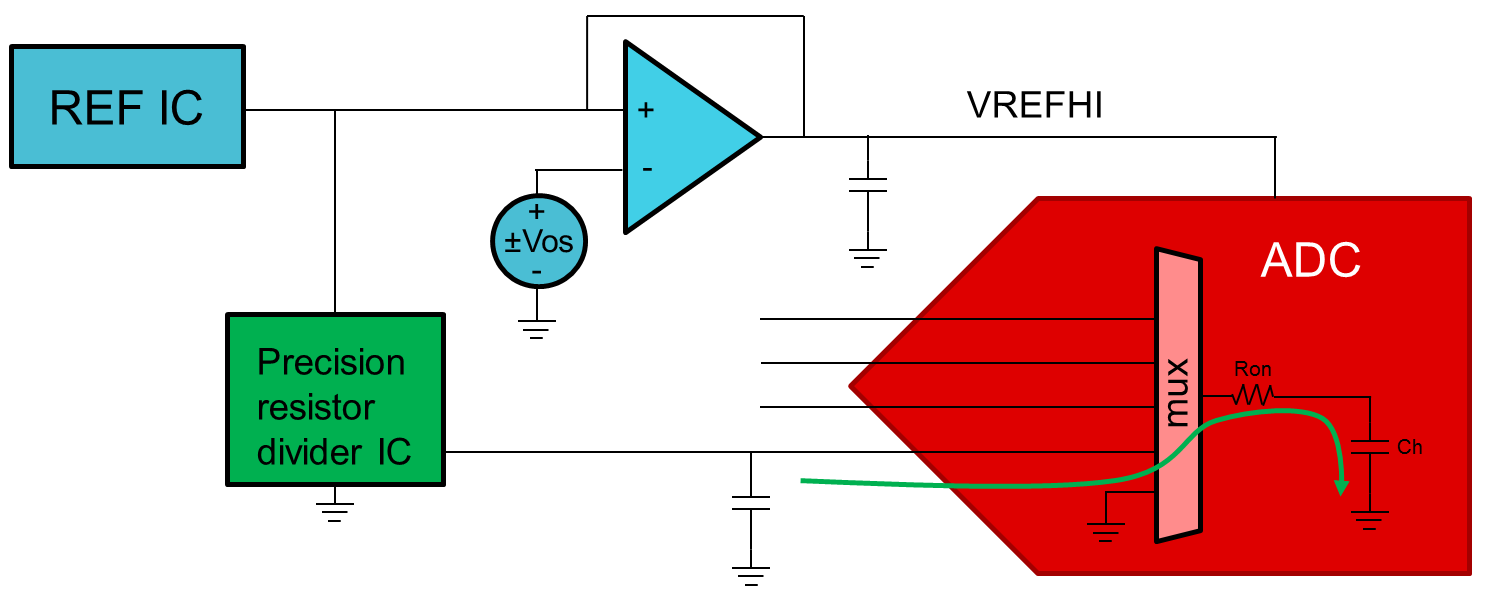

- gain error appears to be additive, and the total unadjusted error added would be a factor of the accuracy of a two point gain calibration reference.

- If gain calibration happens on only one channel, does the channel-to-channel variation come back into play?

- The temperature coefficient would appear to be additive to the total unadjusted error. It could to some extent be compensated for with a temperature measurement.

- How much of the temperature coefficient is accounted for in the periodic self-recalibration?

In summary, I'm trying to understand what all goes into determining the absolute worst case total unadjusted error, and what strategies can be deployed to minimize it?

Thanks,

Stuart