Other Parts Discussed in Thread: CONTROLSUITE

My Customers use 28335 for their motor control products. Recently, in their production test ,they found some DSP’s ADC results are wrong. For example, the normal value is 10, but when the problem occur, the value is 1000.

Their product has been in production for many years without any similar problem. And they have not change the HW and SW. So they suspect that the problem may be caused by the batch of DSP, they did some tests, the most convincing one is: remove the “problem” DSP to the normal board, the problem can be reproduced.

I think we can’t judge this problem caused by the batch of DSP only by the test, so can you provide some other test methods to locate the problem?

I have do some tests with the engineers these days, and the testing process and results are as follows:

-

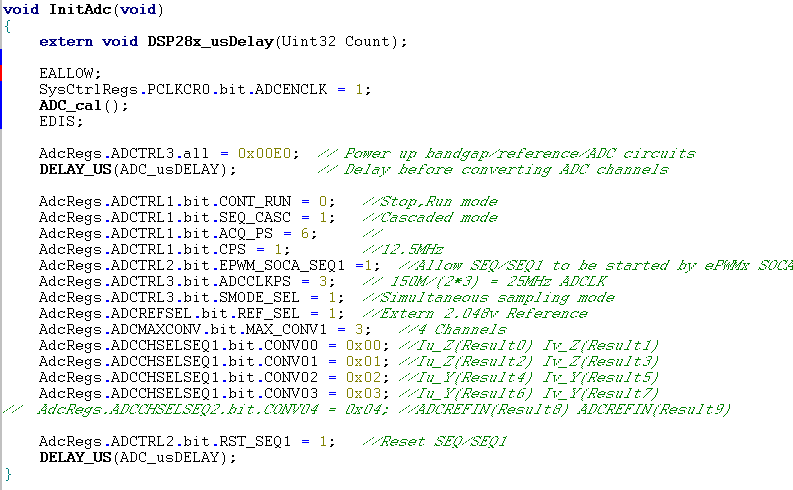

Modify some of the ADC initialization code ,The code they used before is as below:

AdcRegs.ADCTRL3.all = 0xE0;

DELAY_US(ADC_usDELAY);

We modified the code as below,

AdcRegs.ADCTRL3.bit.ADCBGRFDN = 0x3;

DELAY_US(1000)

AdcRegs.ADCTRL3.bit.ADCPWDN = 1;

DELAY_US(1000);

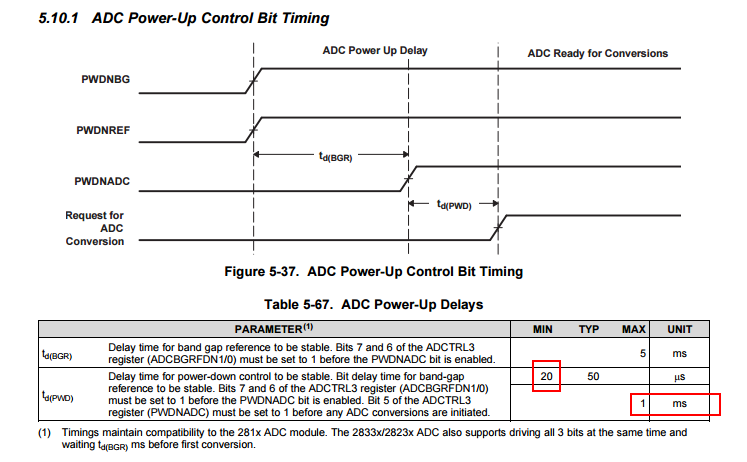

We founded that the longer of the delay time, the less times of the ADC problem.

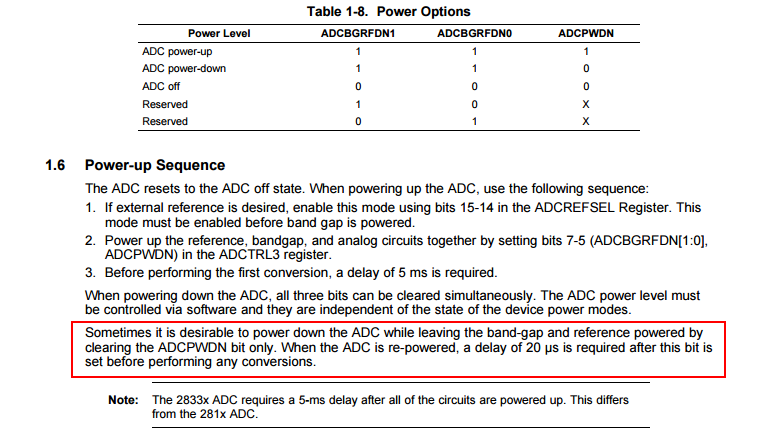

My question is, what are the differences between the two different initialization methods? I found that most examples in the ControlSUITE use the first way.

What are the requirements before the ADC initialization, for example, how long time we should wait before the initialization….

-

In the compiler Opt Level option, select different levels of optimization, we found that the times of the problem will change.

What is the difference between different Opt Level and how to choose the optimal level for the optimization?