Other Parts Discussed in Thread: LMP7709, LMP7704, CONTROLSUITE, TINA-TI, LM358, DESIGNDRIVE

Dear Sir/Madam,

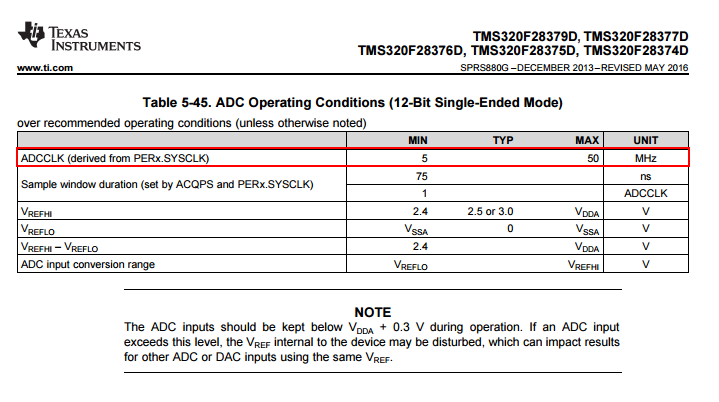

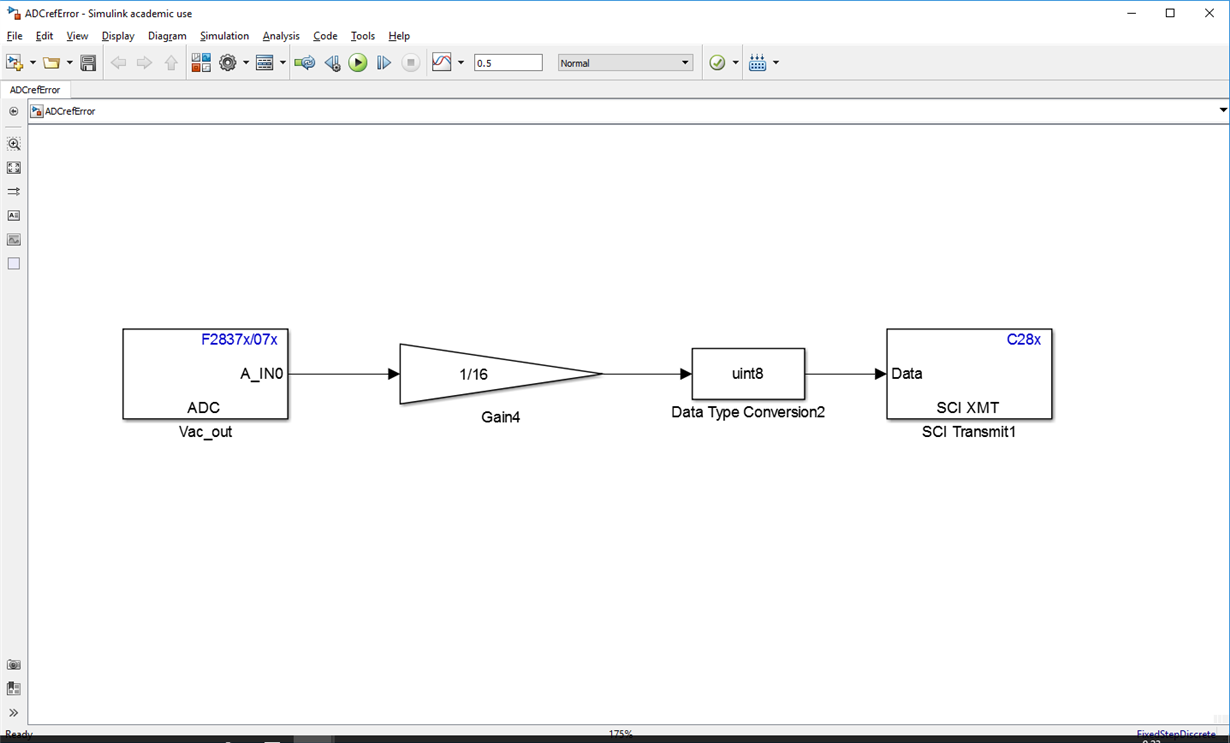

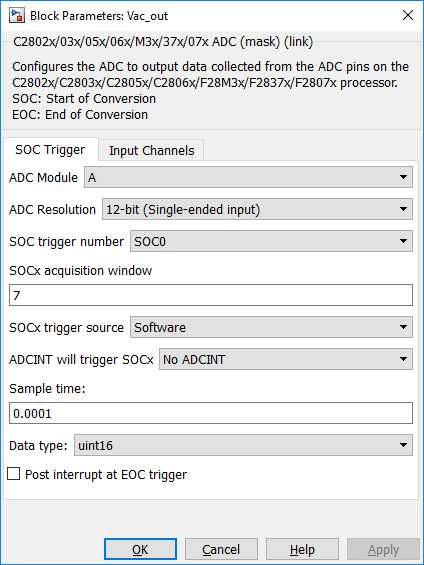

I am using Delfino TMS320F28377D controlCARD R1.1 and read a constant voltage at oone of the ADC. I have noticed that it gets "saturated" at 2.5V instead of 3V. Reading the infosheet TMDSCNCD28377D-Infosheet_v1_5.pdf, It clearly states that for my board version (R1.1), if SW2 is in the up position and SW3 is at the left position then I should get Vrefhi=3 if R42 is populated and R43 is unpopulated . This is the case wth the R42 and R43 on my board, I have measured regulator's (U13) voltage output at R42 and it is correct (3V), whereas input is 3.3V as it should. Also, I have checked ADC response with SW3 to the right position, which is supposed to give a Vrefhi of 3.3V, but I still get the same response! Both positions of SW3 make ADC saturate at approx. 2.5V. The ADC seames to work properly for Max 2.5V, because I have tested voltages at ADC input between 0 and 2.5V and I read proportional values with my code. Any ideas? I really need to read max 3V or even 3.3V in order to be able to use my analog sensors that output 0-3V.

Thank you for your time,

Panagis Vovos

Lecturer

University of Patras, Greece