Other Parts Discussed in Thread: MSP430F5359,

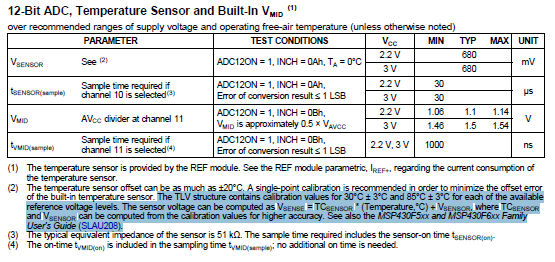

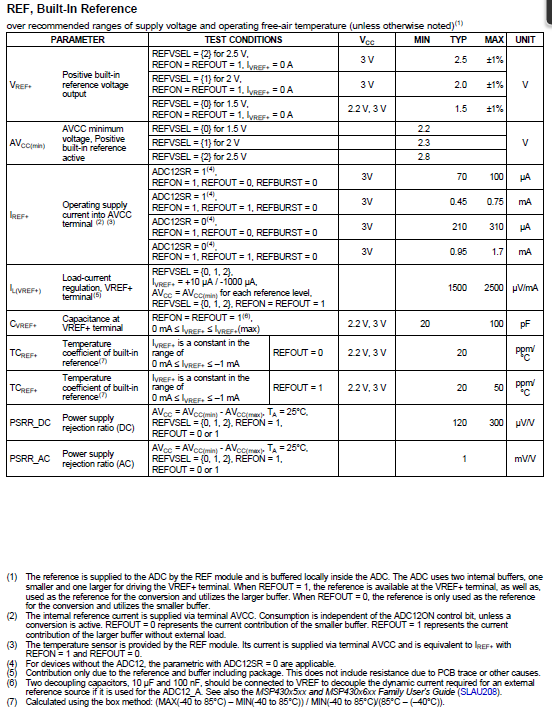

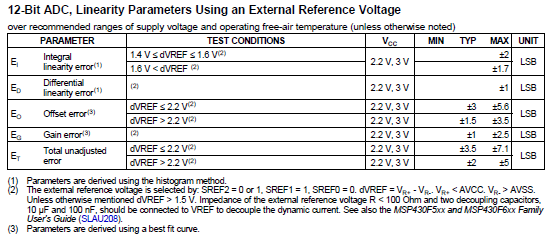

We're currently using the MSP430F2618 and transitioning to the MSP430F5359. For both of these products, I've read the respective data sheets but if I'm reading them correctly, the ADC12 sections of the data sheets are specifying the ADC accuracy in terms of its uncalibrated accuracy. But these MSP2 contain calibration data in Flash Information Segment A, right? And I believe we're using that data.

Is there documentation that talks about the calibrated accuracy of the ADC12? And which of the various ADC12 accuracy parameters are affected by the calibration factor(s)?

(And if I just missed an Application Note or some such, please don't be shy about telling me to go read that! ;-) )