Hello,

We are currently designing a low-power brushless motor controller and have some issues with the currents DC offset calibration. We are using both HW and SPI versions of the DRV8353 (RS and FH) and can replicate the problem on both units.

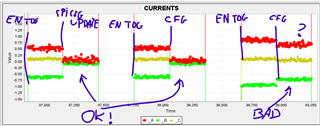

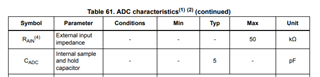

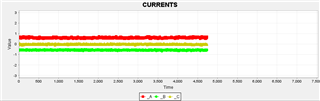

Our approach to DC offset calibration is to move the motor in an open-loop control mode to measure each phase current amplitude (find min/max values) and set each offset so that the waveforms are perfectly in the center around zero. This calibration works really well, even better than simply measuring the startup offsets to zero level and subtracting them. Here's proof for that:

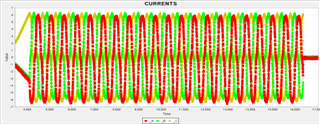

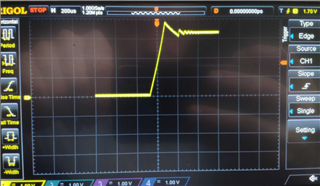

simple calibration (done during every startup, simply average measured DC offset and subtract so that the zero-current level is at true zero)

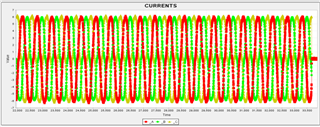

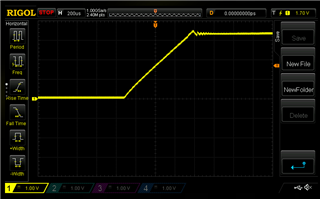

calibration during openloop movement:

there's clearly an improvement in the waveform alignment which results in smoother operation (especially in haptic devices).

However, this is where the problems start - in order not to repeat the open-loop sequence the offsets are just saved in flash memory after the initial calibration routine. They stay the same after each power-up. This is not the case when it comes to the DRV amplifier "zero" level. It's clearly noticeable that the DRV changes its amplifier output zero levels between start-ups, that are even a few seconds apart. Actually, even an MCU reset can cause problems (essentially just toggling EN pin - the DRV is in 3x PWM mode so EN is shorted to INLx).

We are using only phases B and C for measurements, the A is determined from Kirchoff's current law.

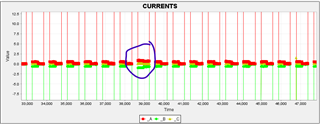

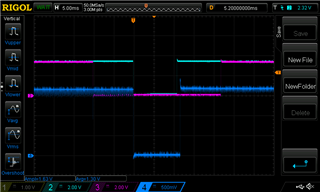

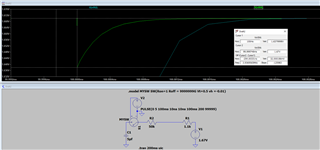

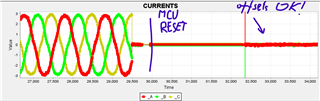

GOOD calibration outcome:

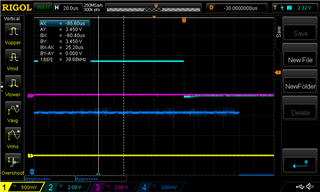

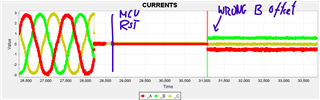

WRONG calibration outcome:

You can clearly see that before the calibration the determined offsets were correct, bringing all three waveforms to the same level. However, after the reset, the B phase is off, even though the offsets are read from flash memory.

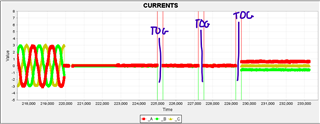

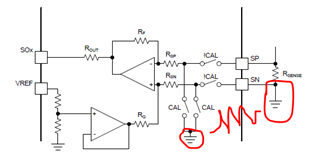

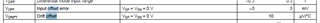

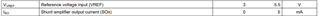

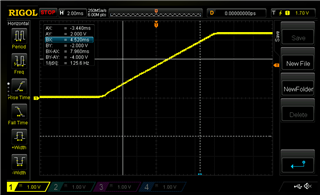

Initially, we thought it's related to the lack of the CAL pin and the automatic routine that is performed in the first 50us after the VREF crosses the minimum voltage level. The voltage level on VREF during startup is not that clean due to the buck starting up (the voltage is measured directly at the VREF cap with spring oscilloscope contact):

SPI version (with integrated buck):

Hardware version (external buck):

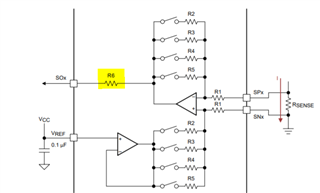

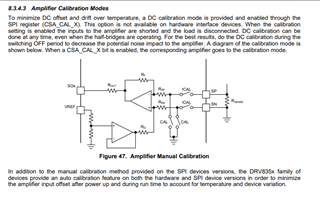

As can be seen, there are some voltage ripples near the 3.3V level, especially in the SPI version with a built-in buck. Looking at this and the internal diagram:

We saw that VREF is one of the sources that can disrupt the calib process since SP/SN are totally disconnected. Since autocalibration needs around 50us it seemed to be quite a good guess and this is why we made the soft start of the external buck in the HW version much longer:

This unfortunately did not solve the problem.

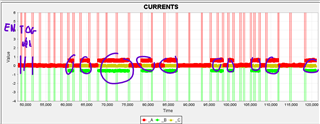

It seems that it's a random problem that causes the B phase to diverge from the initial offset. It's must be an issue with DRV internal autocalibration, since it physically changes the "zero-level" voltages on its amplifier outputs (for example from 1.63V to 1.67V on the SOB pin).

This seems to affect only the B channel, as C is always correct after the startup and calibration routine. The direction in which the B is off is random:

Do you have any ideas what could be wrong?

Best Regards,

Piotr Wasilewski