Hi,

we did all necessary measurements for the GPC cycle for a 24 V AGM battery with 12 cells with 9 Ah.

The results where not so good so we started playing around with the values for LearnSOC, FitMaxSOC and FitMinSOC to bring SOC error into an acceptable range.

Finally this are the best results we got:

3652.GPCPackaged-report.zip1460.GPCPackaged.zip

We did the GPC cycles with 6 A and with 45 A at -5°C, 20°C and 40°C to meet the requirements of our application.

We used the results of the cycle to test the SOC calculation.

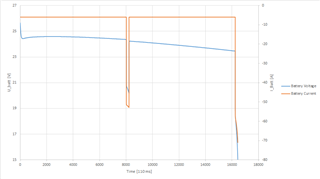

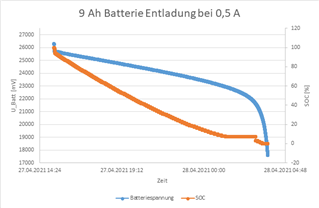

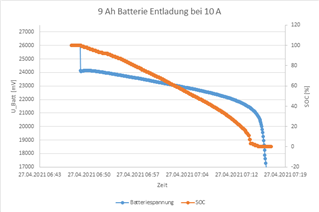

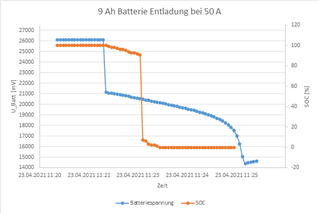

Unfortunately the results where really bad as can be seen in the following diagrams:

The above measurements where done at 20°C. At different temperatures (-5°C, 40°C) it looks the same.

We tried to use the CEDV smoothing feature to get better results, but at the high discharge current (third diagram) it really doesn't work at all, the SOC has a hard drop to 7 %.

Does someone have an idea what to change to get better results.

Best Regards,

Oliver Wendel