Other Parts Discussed in Thread: BQSTUDIO

I created a golden image by executing a learning cycle on the bq27531 EVM evaluation board. I went through several charge/discharge cycles and it seemed to be working well. However, when I programmed the bq27531 on my target hardware, it does not work as well.

When charging, it will report 100% charged long before it has completed charging. When it reports 100%, it has only passed about 60% of the charge that occurs in a normal charging cycle. For example, on one charge cycle I started with the gauge reporting 5%, after fully discharging and relaxing for > 5 hours. I charged until the gas gauge automatically stopped charging due to taper current being reached. Total passed charge for the charge cycle was 7115 mAh, which is consistent with what I was getting when using the evaluation board. However, the gas gauge was reporting 100% after passed charge of 4229 mAh. The gas gauge then just sits at 100%.

During the charge cycle, the full charge capacity adjusts up and down. It first goes down, then goes up, usually ending up at a reasonable number. In this example case it started at 4477 mAh, got as low as 3663 mAh, then ended at 7309 mAh.

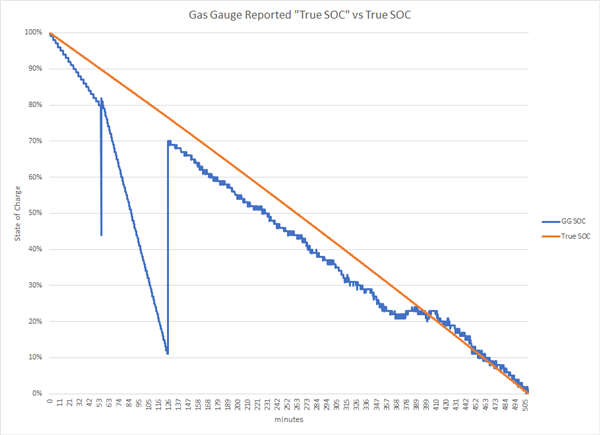

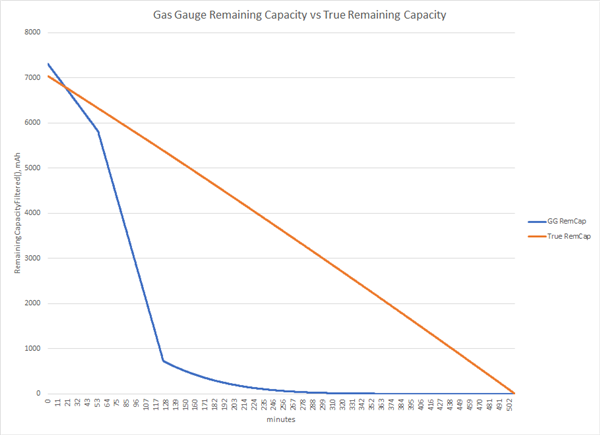

The discharge cycle is better behaved as the SOC reported tracks the true SOC reasonably well. (I am defining "true SOC" by comparing passed charge with the total passed charge when I reach the terminate voltage).

I suspect this problem has something to do with executing the learning cycle by discharging the battery to its limit of 2.5 V, then changing Final Voltage and Terminate Voltage to my system minimum voltage of 3.5 V. I also changed Design Capacity to 6000 mAh (about what I expected to get out of the battery under worst case current draw) and CC Threshold to 5400 (90% of Design Capacity). When I made these adjustments on the evaluation board it worked great - the gauge started treating 3.5 V as 0% SOC.

Comparing my data from the eval board and my system, one difference jumps out at me. On the eval board, once I changed the voltages from 2.5 V to 3.5 V, Qmax never updated any more. It had been doing small updates and seemed to be a reasonable value (13142 mAh vs. nominal battery pack capacity of 13400 mAh). After programming the golden image onto my target hardware, Qmax changed to 7800 mAh after my first discharge / charge cycle. It isn't clear to me if Qmax is supposed to be the total capacity of the battery pack or the capacity of the range Full Charge to Terminate Voltage. Was I supposed to change Qmax when updating parameters for my Terminate Voltage of 3.5 V?

I have only gone through a few cycles so I'm not sure if the behavior will stabilize. If so maybe I can create a new golden image?

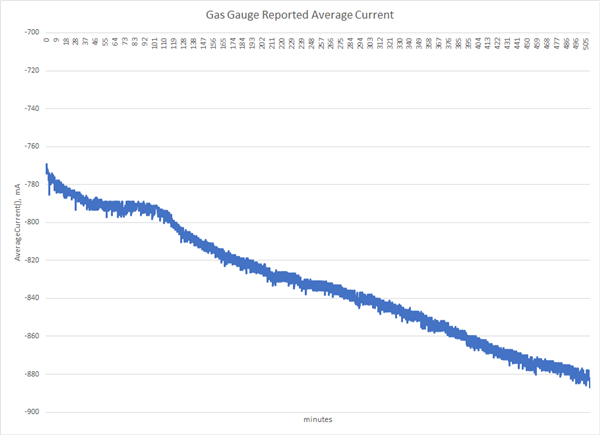

Any thoughts on what the problem is here? When updating post-learning cycle to match my system parameters, I changed Terminate Voltage, Final Voltage, Design Capacity, and CC Threshold to match my system low voltage limit and the observed available capacity (this is what SLUA903 says to do in section 2.1). I don't think there is any drastic difference between the eval board environment and my target environment. The load currents are about the same. As far as I can tell, loading a golden image did not entirely duplicate the state to the new gas gauge. I suppose I could test this by programming the eval board with the golden image.

Thanks,

Dave