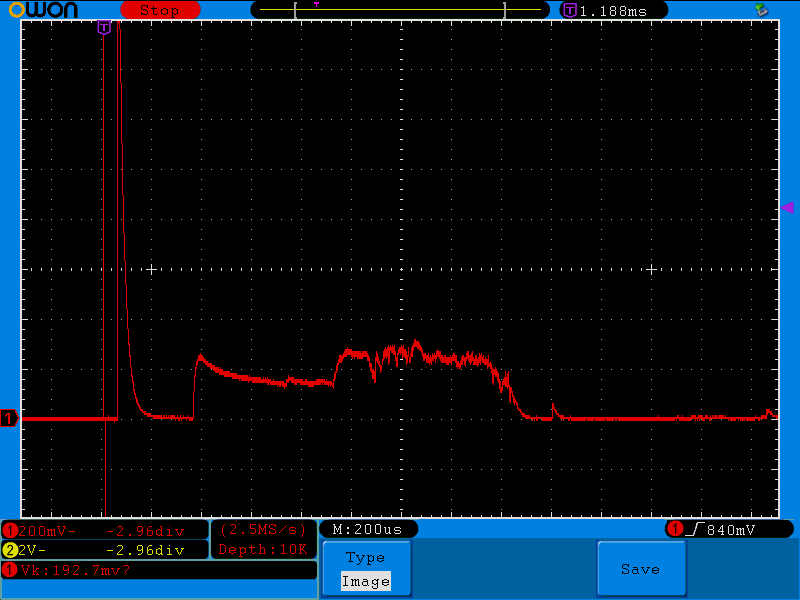

I am trying to decide whether to periodically disable the TPS61040 to conserve battery life. The load on the switcher will be disconnected when not needed no matter what, so the comparison I'm trying to make is whether the switcher uses more energy (1) being left enabled for 50ms or (2) being disabled for 45ms and enabled for 5ms. The quiescent current is 28uA, so 28uA * 50ms = 1.4mA*ms. I captured the following waveform of the voltage across a 3.3-ohm resistor in series with the switcher input at startup:

The average voltage looks to be about 200mV over a period of 2ms, which equates to a current of 200mV/3.3ohms, or 60.6mA. The startup energy is therefore 60.6mA * 2ms, or 121.2mA*ms. This is far more energy than would be spent just leaving the switcher running. I realize that having a resistor in series with the switcher input changes the behavior somewhat, but is this result in line with what you would expect? It seems to me that the only way it would be worth it to disable the switcher is if the disable period were much longer, say 5s instead of 50ms. I don't do a lot of design with batteries so I'm just looking for some general feedback on whether I'm thinking about this correctly. Thanks, -Pat