Other Parts Discussed in Thread: MSP430FR5994, BQSTUDIO, EV2400

Hi!

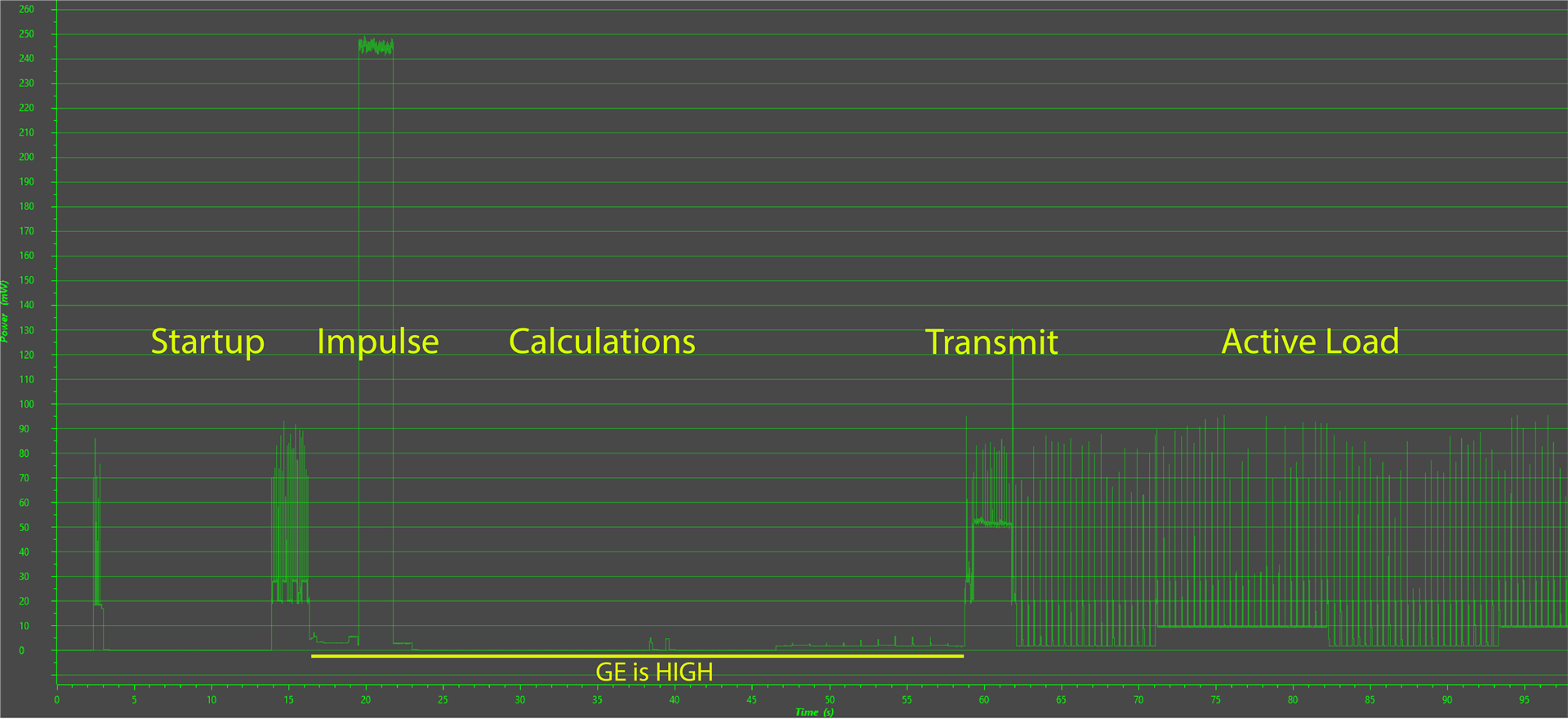

Equipment: BQ35100 chip is connected according to the datasheet and controlled by I2C via MCU. The GE and ALERT output are also controlled by the MCU. An XBee module is used as a load, which in transmit mode consumes 20mA, which creates a voltage drop of 80mV.

Power source: two SAFT LS14500 batteries connected in parallel. Voltage 3.6V, capacity 5200mAh, Li-SOCl2 chemistry.

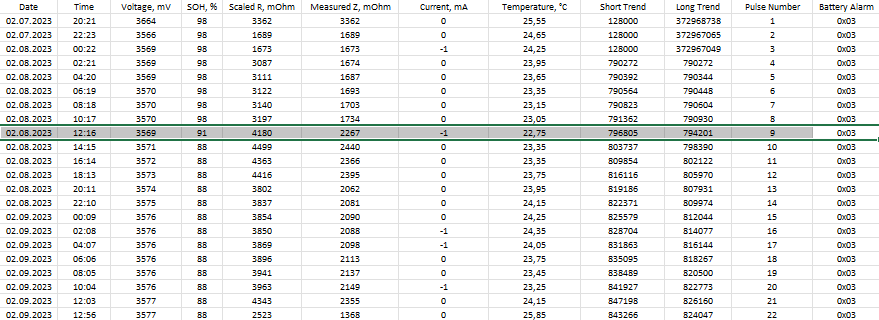

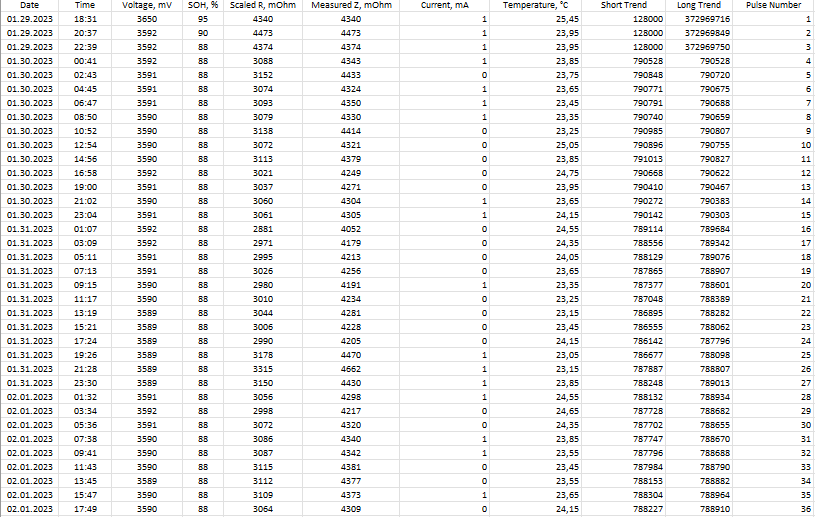

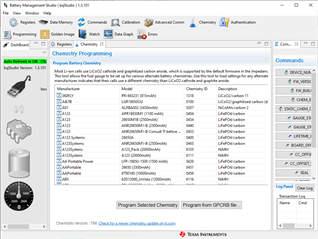

I connected the EV2300 programmer via I2C to the BQ35100 and calibrated the current and voltage on the chip according to the SLUUBH7 and SLUA904 manuals in the BQ studio program. Then set battery parameters, operation config A to EOS mode and updated chemistry ID to 0623 (Ra table updated). All parameters can be viewed in the memory dump. Then I unplugged the EV2300 and further tested the new battery to full discharge using the MCU.

Measurement algorithm:

1) Enable GE pin.

2) Read battery status.

3) Send GAUGE_START command.

4) Wait for GA bit.

5) Send a command to the XBee causing a voltage drop of 60-80mV for 2 seconds.

6) Send GAUGE_STOP command. Within 15 seconds, the consumption is minimal.

7) Wait for ALERT to go low due to the G_DONE.

8) Read Voltage and SOH.

9) Disable GE pin.

Then two functions are used to read Scaled R and Measured Z. Each function consists of:

2.1) Enable GE pin.

2.2) Read battery status.

2.3) Read value.

2.4) Disable GE pin.

(Although now I understand that it was better to read the parameters after the main measurement without switching the GE pin)

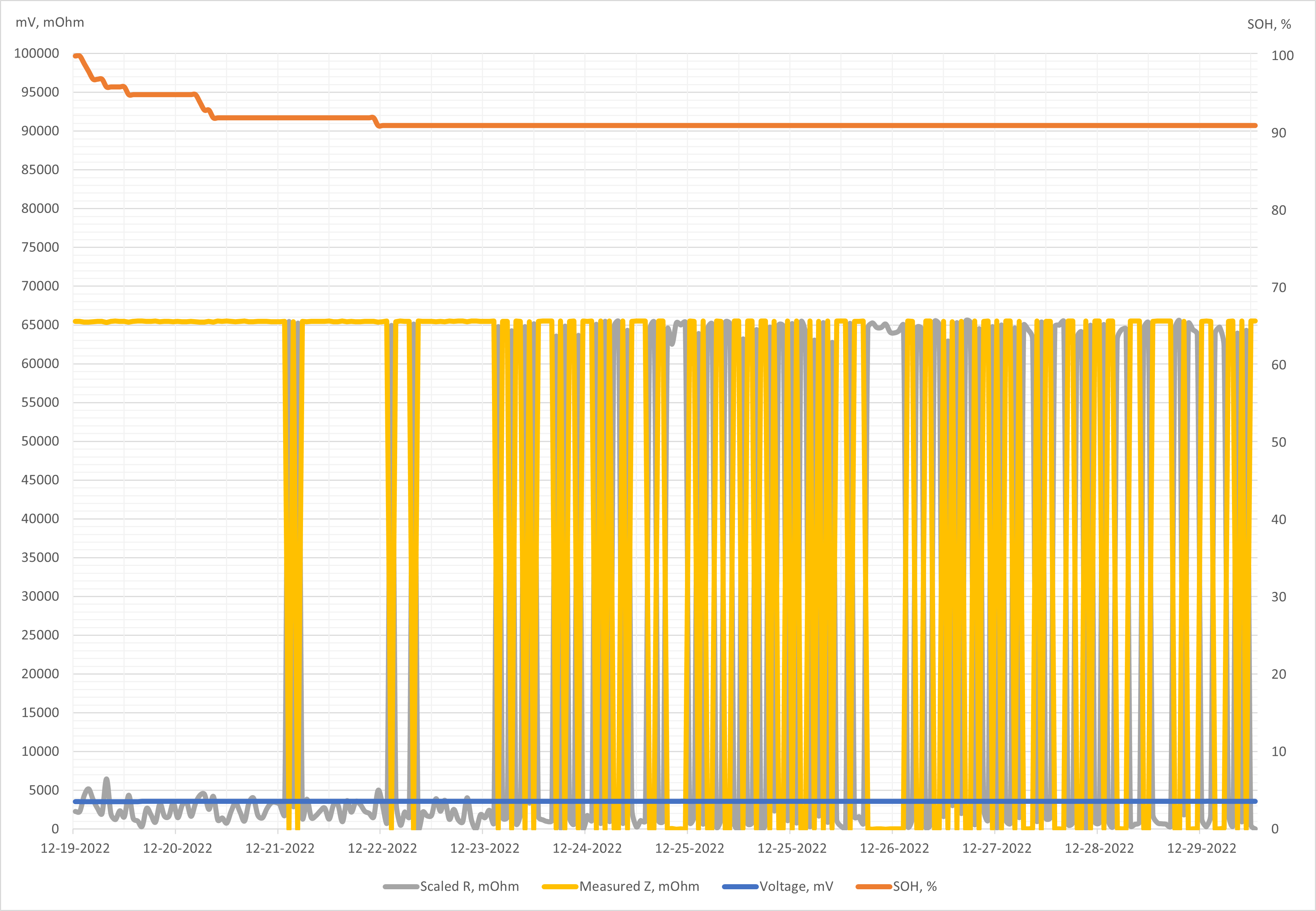

These three functions were performed every hour. After the measurements, the XBee data transfer function was turned on, which provided a consumption of 10mA. Thus, the battery should have been discharged in about 20 days. However, on day 11, I stopped testing, as the Scaled R and Measured Z numbers became frankly wrong. SOH value did not fall below 91%. I made a memory dump after testing and golden image.

Is there an error in the algorithm? Perhaps the chip settings are incorrect? I would be very grateful for your help in this matter.

\

\