Other Parts Discussed in Thread: GPCCEDV,

Hi,

I am struggling to understand what is going wrong with the CEDV gauging on my 78350 around the end of discharge and therefore how to improve it.

As background the battery is 9S LFP.

I have run through the learning cycles a few times with different load conditions, the latest calibration set that I have used was done with a low rate of 10A and a high rate of 100A and returns the following:

ProcessingType=1

NumCellSeries=9

CellTermV=2850

LearnSOC%=7

FitMaxSOC%=12

FitMinSOC%=6

ChemType=4

EMF 3261

EDVC0 121

EDVC1 0

EDVR1 470

EDVR0 2261

EDVT0 4820

EDVTC 11

VOC75 29871

VOC50 29619

VOC25 29185

file SOC error, % pass

roomtemp_lowrate.csv 0.835848428645379 1

roomtemp_highrate.csv -0.241990214888698 1

hightemp_lowrate.csv -0.314618964659832 1

hightemp_highrate.csv -0.350313002604034 1

lowtemp_lowrate.csv -0.818230169830177 1

lowtemp_highrate.csv 0.87776579492757 1

Deviations are within recomended range. CEDV parameters are suitable for programming the gauge

Battery Low % is set for the default 7%

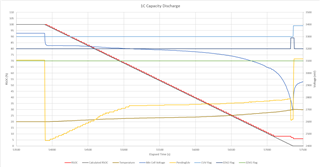

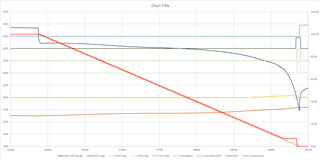

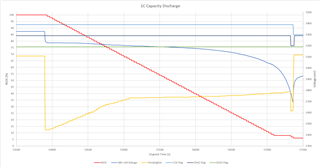

Having programmed these parameters if I do a full capacity discharge at 54A at room temperature, the gauge tracks well until it gets towards the end and then never drops below 6%.

Looking at it in more detail, the value of Pending EDV seems to fluctuate wildly and the battery only just flags EDV2 before the CUV point is hit (CUV is set for 2700mV).

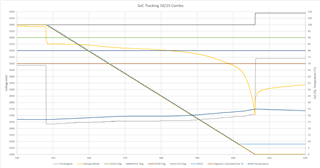

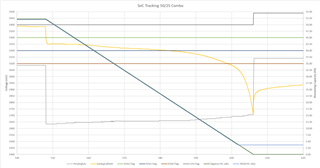

If I look at my original calibration capture for room temp and calculate the voltages corresponding to 7%, 3% and 0% I get 3009, 2940 and 2830 from the high rate and 3156, 3037 and 2797 from the high rate.

As my test discharge is between the two rates I would therefore expect the CEDV values to sit somewhere between these (ie the compensated EDV2 would be somewhere between 3009 and 3156).

You can see on this graph that Pending EDV (Yellow) is way outside this range with the EDV2 point being anywhere between 3100 and 2445.

So, any idea what is going on and how this can be improved (other than reverting to fixed EDV)?

Thanks,

Simon